Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

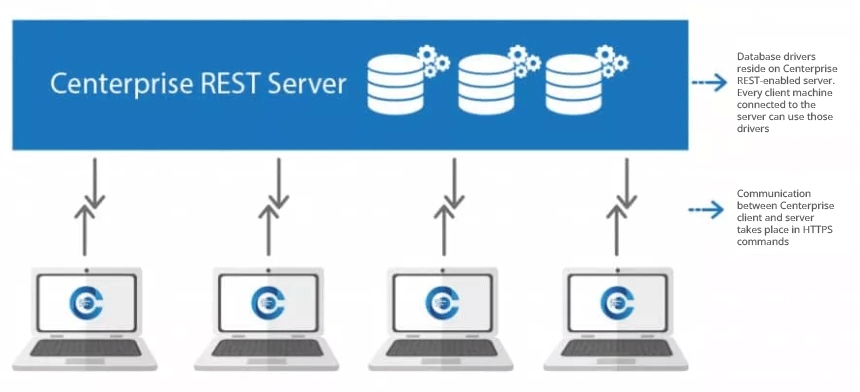

Astera Data Stack is built on a client-server architecture. The client is the part of the application which a user can run locally on their machine, whereas the server performs processing and querying requested by the client. In simple words, the client sends a request to the server, and the server, in turn, responds to the request. Therefore, database drivers are installed only on the Astera Data Stack server. This enables horizontal scaling by adding multiple clients to an existing cluster of servers and eliminating the need to install drivers on every machine.

The Astera client and server applications communicate on REST architecture. REST-compliant systems, often called RESTful systems, are characterized by statelessness and separate concerns of the client and server, which means that the implementation of both can be done independently if each side knows what format of messages to send to the other. The server communicates with the client using HTTPS commands, which are encrypted using a certified key/certificate signed by an authority. This saves the data from being intercepted by an attacker as the plaintext is encrypted as a random string of characters.

After you have successfully installed Express, open the application and you will see the User Logon screen as pictured below.

You can skip the log on or proceed with your preferred log on method.

Astera offers two methods to create your account:

Sign Up with Microsoft – Useful for users who have an active Microsoft account. No separate sign-up is required, since your Microsoft account already exists and can be used directly for login.

Sign Up with Email – Suitable for any other email domains. In this case, you will have to Create an account to complete the account creation process. This step is necessary because Email Authentication requires registration in our Azure tenant. Once created, your email is added both to our database and to the Azure directory.

Select your preferred method and go through the MFA steps.

Once you have signed up, the designer will launch. You can now start preparing your data.

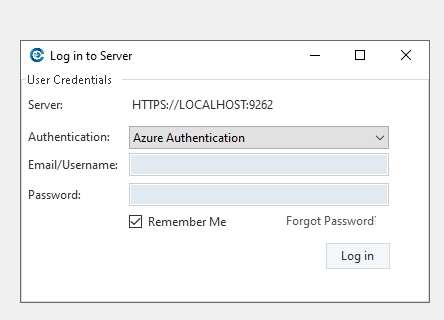

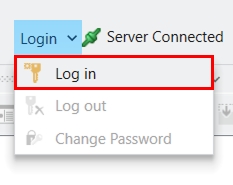

To log in from the designer, click the profile dropdown and select Log in.

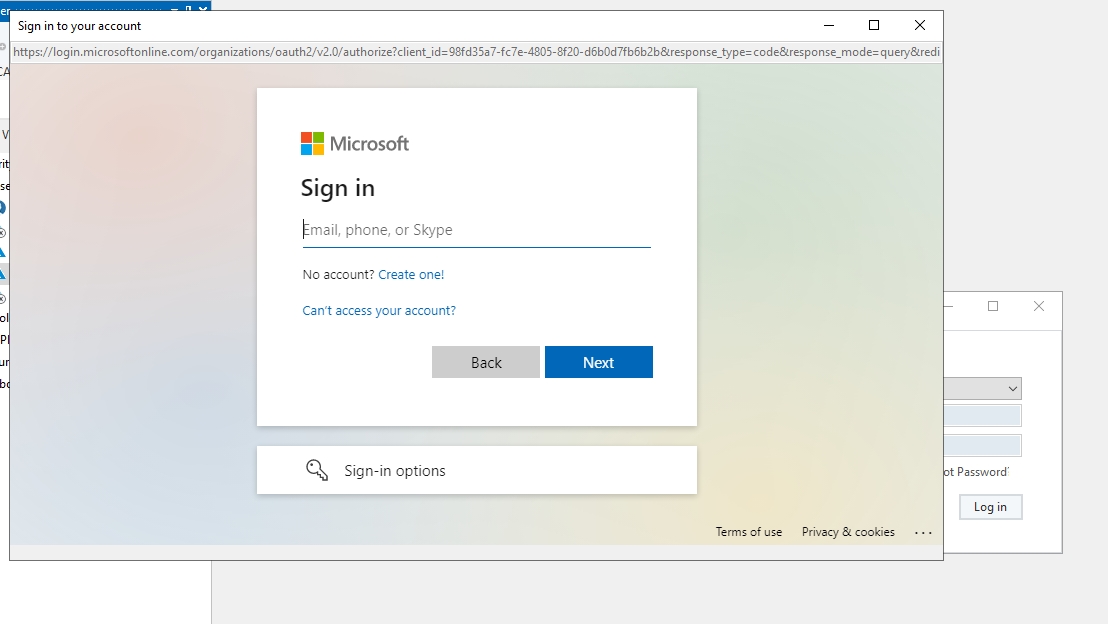

This will direct you to a login screen where you can provide your user credentials.

After you log in, you can click the Create New Chat button in the Dataprep AI Agent panel to begin chatting with the agent.

This release is a major milestone for Astera, introducing:

Astera Cloud - A comprehensive cloud-native platform that combines our different product offerings into a unified web-based portal. The portal serves as a single hub enabling users to sign up for a personalized experience with our products, download and get started using the right product in the configuration that best fits their needs, and handle administrative tasks such as adding or managing other users. The platform now supports both Dataprep and ReportMiner with scalable cloud infrastructure, dedicated storage, and seamless asset management.

Astera Express Editions - Lightweight versions of our core products designed for faster onboarding and streamlined usage scenarios. These editions remove complexity barriers while maintaining the essential functionality organizations need to get started quickly with data integration and processing tasks.

Astera Dataprep - Our first AI-powered self-service data preparation tool that empowers business users to clean, transform, and prepare their data without requiring technical expertise. This intelligent solution automates complex data preparation tasks while providing an intuitive interface for users at any skill level.

Together, these offerings make it easier than ever for organizations to access, manage, and prepare their data without worrying about infrastructure or complex technical processes. With cloud-native deployments and intuitive data preparation capabilities, this release democratizes data management and processing for organizations of all sizes.

Astera Cloud extends our platform beyond traditional on-premise deployments, allowing teams to leverage the full power of Astera without managing infrastructure. Preconfigured and optimized cloud servers let users focus on designing and running data flows instead of handling server resources.

Lightweight Client Installation: Install the client designer locally and continue working with the familiar drag-and-drop interface to build data flows, workflows, and more.

Server-Side Execution: All execution, scheduling, and processing runs on managed cloud infrastructure, minimizing local resource usage.

Scalable Infrastructure: Cloud servers automatically adjust to workload demands, ensuring reliable performance without capacity planning.

With this release, Astera Cloud now supports both Dataprep and ReportMiner, giving users the flexibility to prepare, extract, and integrate data seamlessly in the cloud.

The Astera Cloud experience is managed through a unified web-based portal that streamlines deployment, administration, and subscription management without the need for lengthy procurement cycles. This single interface provides:

User Configuration: Create and manage accounts with role-based permissions and access controls

Client Downloads: Access and download client applications

The portal provides a seamless experience in managing your complete Astera Cloud experience from one centralized location.

To simplify onboarding and accelerate adoption, Express editions are now available for both Dataprep and ReportMiner. These lightweight versions are designed for faster setup, simplified usage, and entry-level scenarios.

Dataprep Express: Quick access to AI-powered data preparation for smaller datasets and business use cases.

ReportMiner Express: Streamlined data extraction for simpler scenarios, without the overhead of advanced template management.

By default, the Express editions use local storage, with an option to connect to the cloud

We are proud to introduce Astera Dataprep, the fastest and simplest way to prepare data for analysis through an AI-powered, chat-based interface. Available on Astera Cloud as well as in the lightweight Dataprep Express edition, it enables both business and technical users to clean, transform, and prepare data by interacting with the AI agent using natural language instructions.

AI-Powered Chat Interface: Prepare data effortlessly with natural language instructions

Preview-Centric Tabular View: See real-time data changes with every action.

Flexible Import and Export Options: Work with Excel, CSV/TXT, and major databases (SQL Server, Oracle, PostgreSQL).

Instant Data Profiling: Gain insights into data quality, structure, and patterns instantly with real-time graphical profiles and chat-based analysis.

This concludes the Astera 12.0 Release Notes.

The Astera Cloud Portal provides secure access for managing your data integration projects in the cloud. In this document, we will walk through the steps to create an account on the Cloud Portal.

Go to cloudastera.com.

Click Create an account.

Astera offers two methods to create your account:

Sign Up with Microsoft – Useful for users who have an active Microsoft account.

Sign Up with Email – Suitable for any other email domains.

Select your preferred method and go through the MFA steps.

Once you have signed up, Step 2: Profile will appear. Enter your First Name and Last Name here.

Click Start Trial, you will be directed to the portal home page

You can now launch your designer by clicking Launch Designer to start designing data integration flows in Astera.

In this section we will discuss how to install and configure Astera Dataprep Express.

Run ‘DataprepExpress.exe’ from the installation package to start the express installation setup.

Astera Software License Agreement window will appear; check I agree to the license terms and conditions checkbox, then click Install.

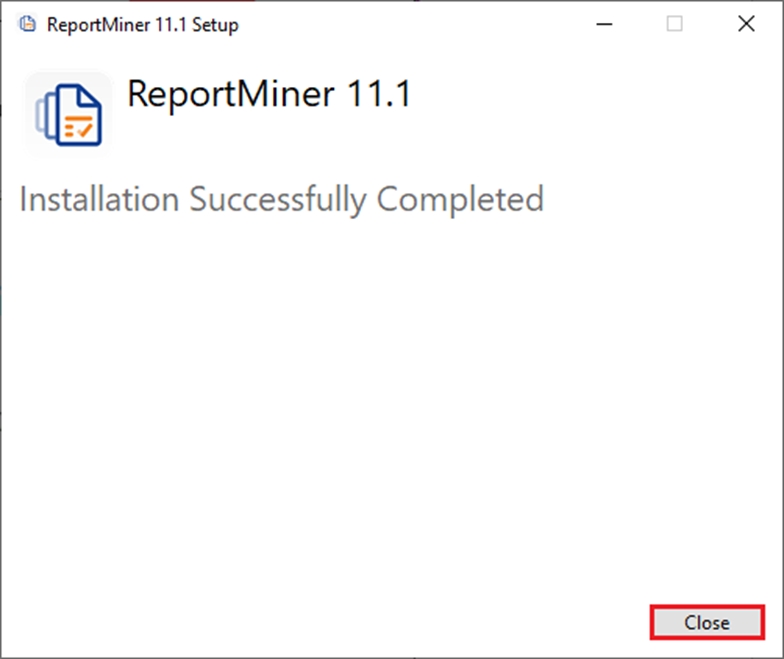

When the installation is successfully completed, click Close.

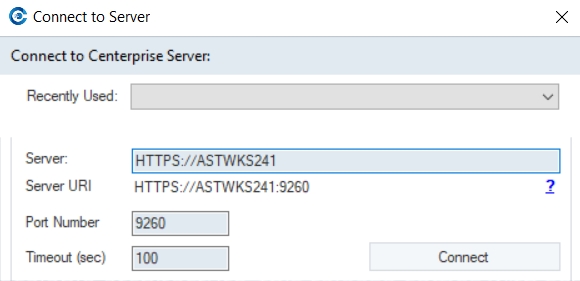

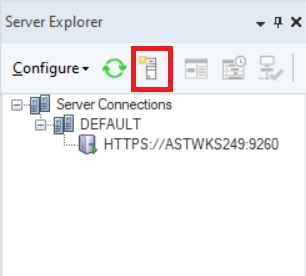

You can connect to different servers right from the Server Explorer window in the Client. Go to the Server Explorer window and click on the Connect to Server icon.

A prompt will appear that will confirm if you want to disconnect from the current Server and establish connection to a different server. Click Yes to proceed.

You will be directed to the Server Connection screen. Enter the required server information (Server URI and Port Number) to connect to the server and click Connect.

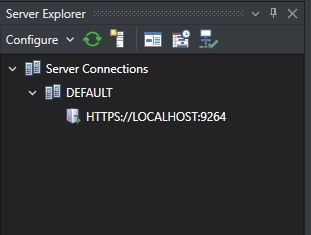

If the connection is successfully established, you should be able to see the connected server in the Server Explorer window.

Data pipelines in Astera, are designed in the Designer. The designer is a desktop based interface where users can design and test their pipelines before they run them on the cloud server.

To get started, you need to launch your designer from the portal by clicking on Launch Designer.

The launch designer screen will appear, which will automatically open the designer if previously downloaded. If not, download it by clicking the Download button.

The download button will download an executable, run the executable.

Astera Software License Agreement window will appear; check I agree to the license terms and conditions checkbox, then click Install.

When the installation is successfully completed, click Close.

The designer will now launch automatically.

The designer has successfully launched; you can now start preparing your data.

Application Processor

Dual Core or greater (recommended); 2.0 GHz or greater

Operating System

Windows 10 or newer

Memory

8GB or greater (recommended)

Hard Disk Space

2 GB – (including .NET Desktop Runtime installed)

AI Subscription Requirements

OpenAI API (provided as part of the package)

LLAMA API

Together AI

Other

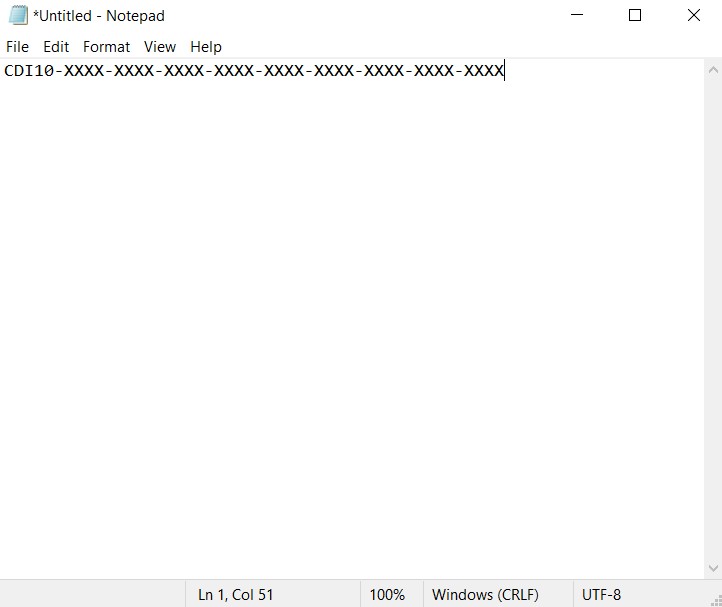

In some cases, it may be necessary to supply a license key without prompting the end user to do so. For example, in a scenario where the end user does not have access to install software, a systems administrator may do this as part of a script.

One possible solution is to place the license key in a text file. This way, the administrator can easily license each machine without having to go through the licensing prompt for each user.

Here’s a step-by-step guide to supplying a license key without prompting the user:

To get started, create a new text document that will hold the license key required to access the application.

In the text document, enter a valid license key. The key must be the only thing in the document, and it must be on the very first line. Make sure there are no unnecessary leading or trailing spaces, lines, or any characters other than those of the license key.

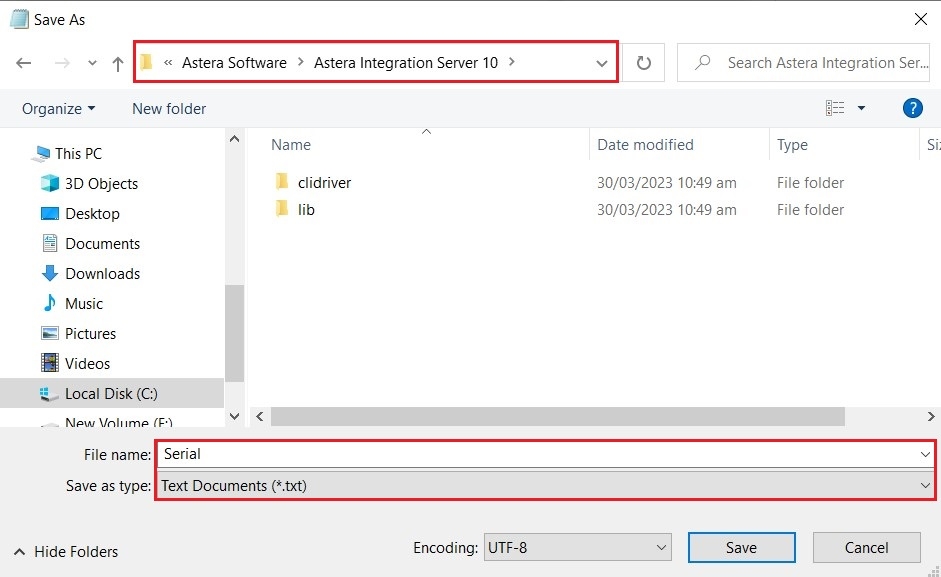

Name the text document “Serial” and save it in the Integration Server Folder of the application located in Program Files on your PC. For instance, if the application is Astera, save the Text Document in the “Astera Integration Server 10” folder. This folder contains the files and settings for the server application. The directory path would be as follows:

C:\Program Files\Astera Software\Astera Integration Server 10.

Finally, restart the Astera Integration Server 10 service to complete the process. This step ensures that, from now on, when the user launches Astera or any other application by Astera, they will not be prompted to enter a license key.

Also, please keep in mind that all license restrictions are still in effect, and this process only bypasses the user prompt for the key.

In conclusion, by following these simple steps, system administrators can easily supply a license key without prompting the end user. This approach is particularly useful when installing software remotely or when licensing multiple machines.

The Python server is embedded in the Astera server and is required to use the Text Converter object in the tool. It is disabled by default, and this document will guide us through the process of enabling it.

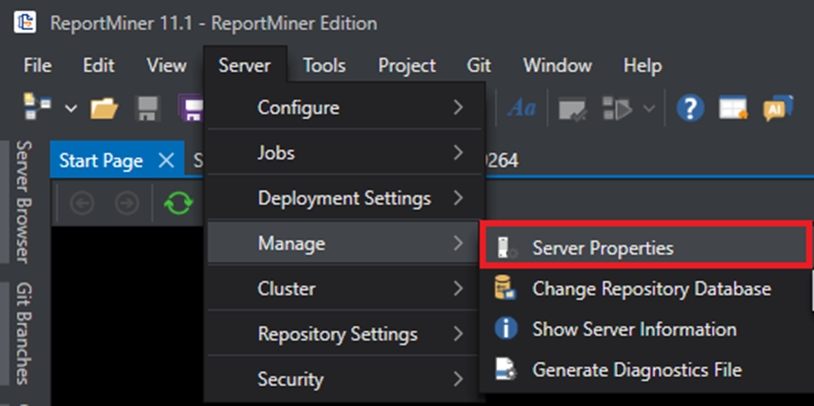

Launch the client and navigate to Server > Manage > Server Properties.

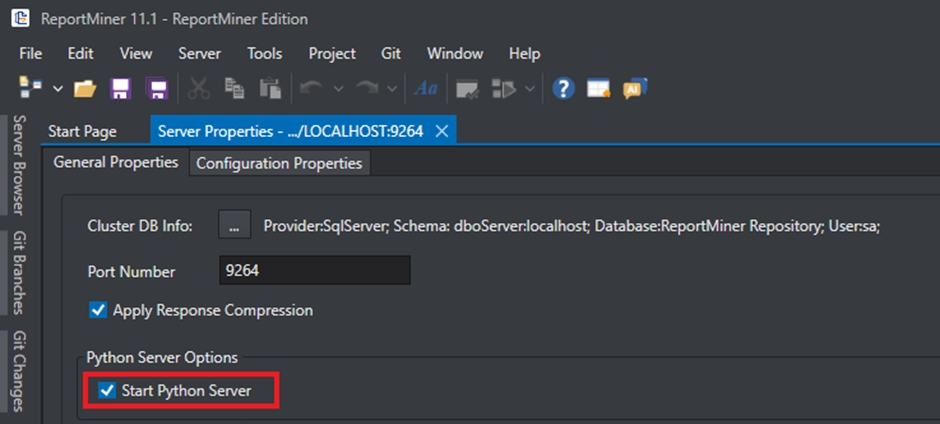

In the Server Properties, check the Start Python Server checkbox and press Ctrl + S to save your changes.

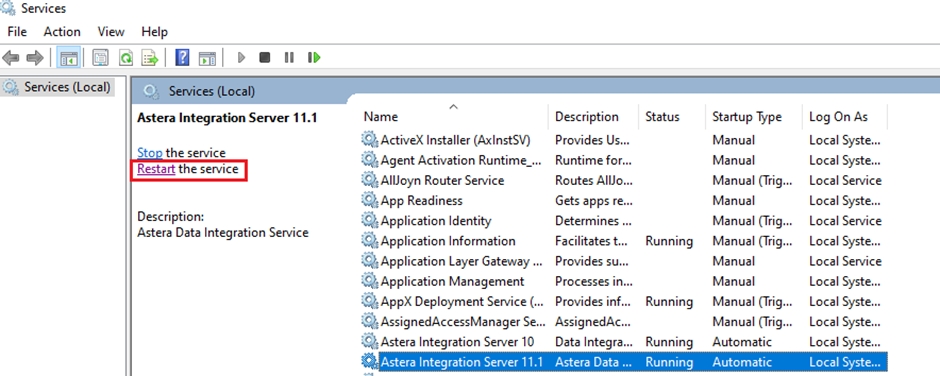

Now open the Start Menu > Services and restart the service of Astera Integration Server 11.1.

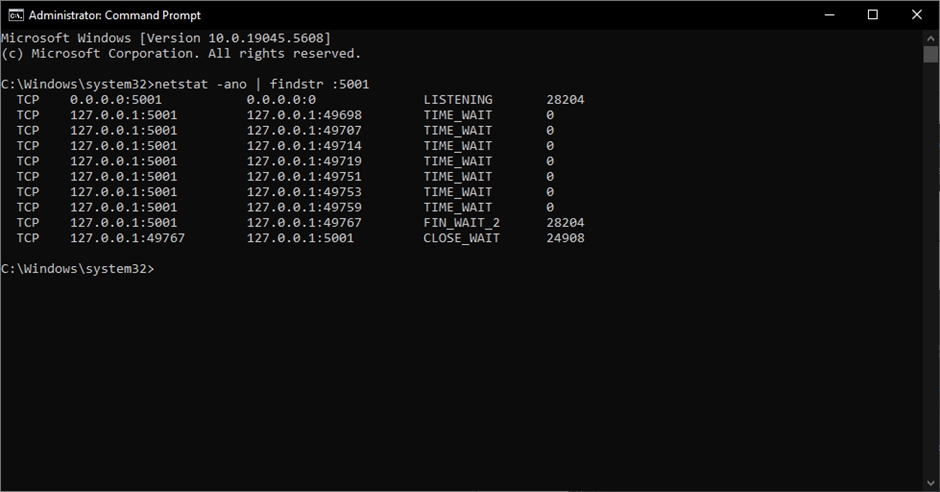

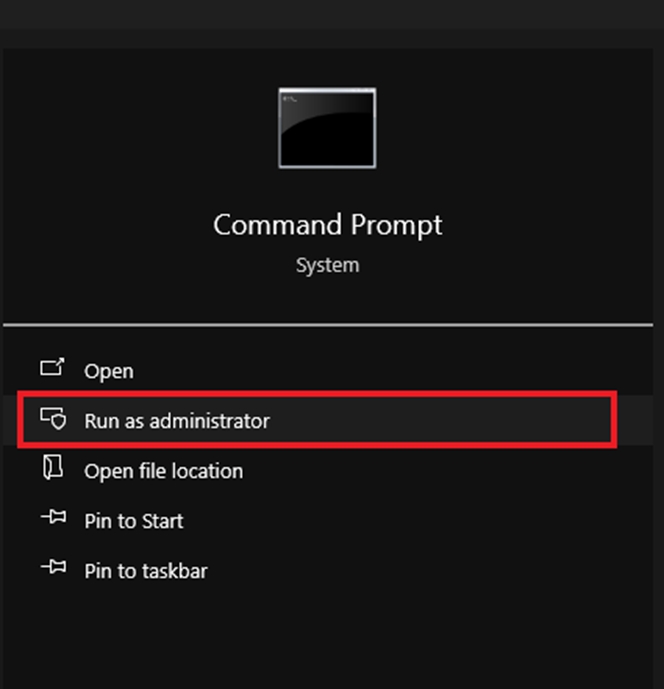

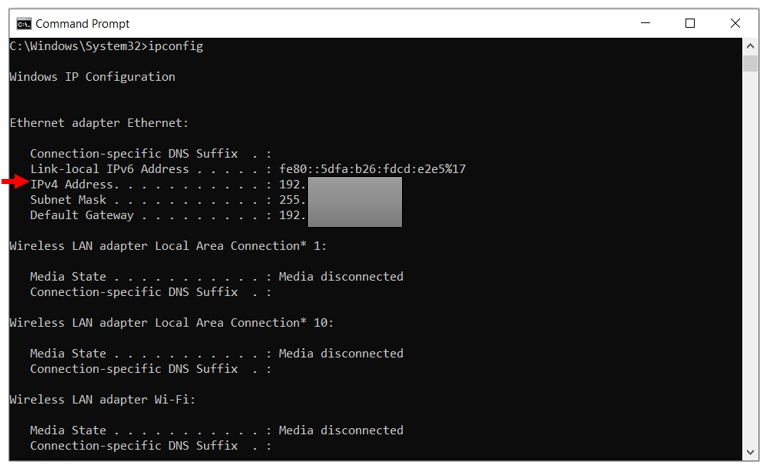

After restarting the service, wait for a few minutes and run cmd as administrator.

Typenetstat -ano | findstr :5001 command in the command prompt to check if your python server is running.

We’ve successfully enabled the Python server. You can now close this window and use any python server dependent features in the Client.

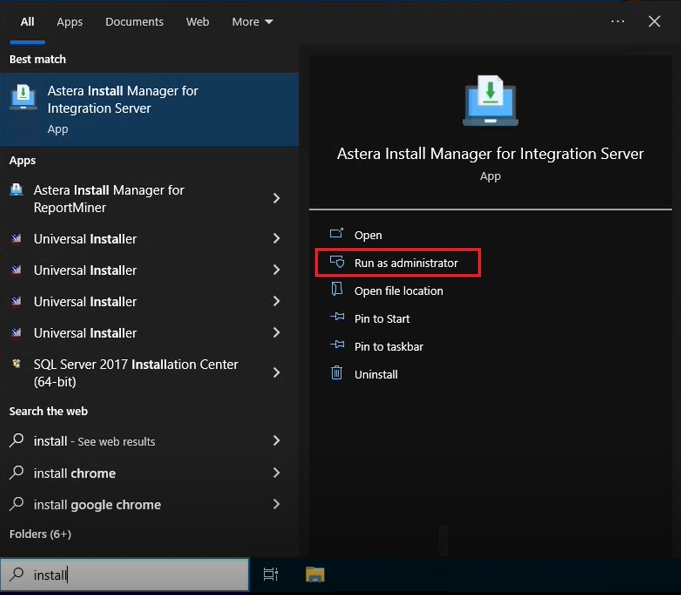

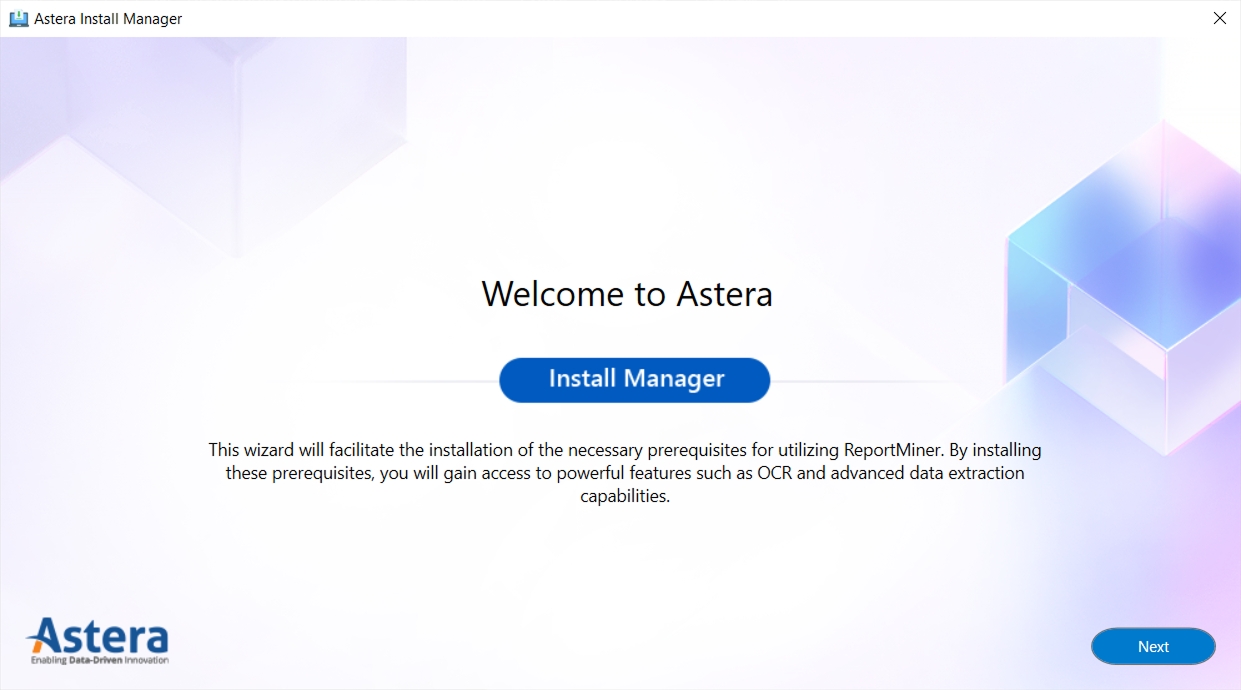

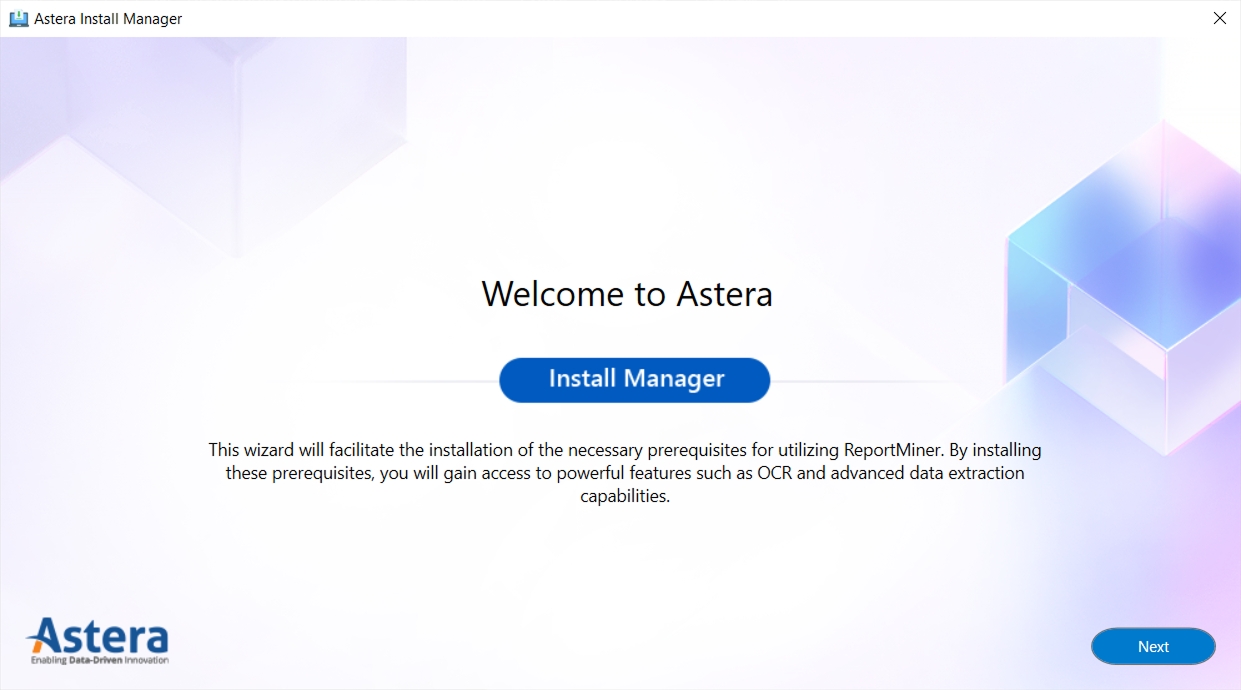

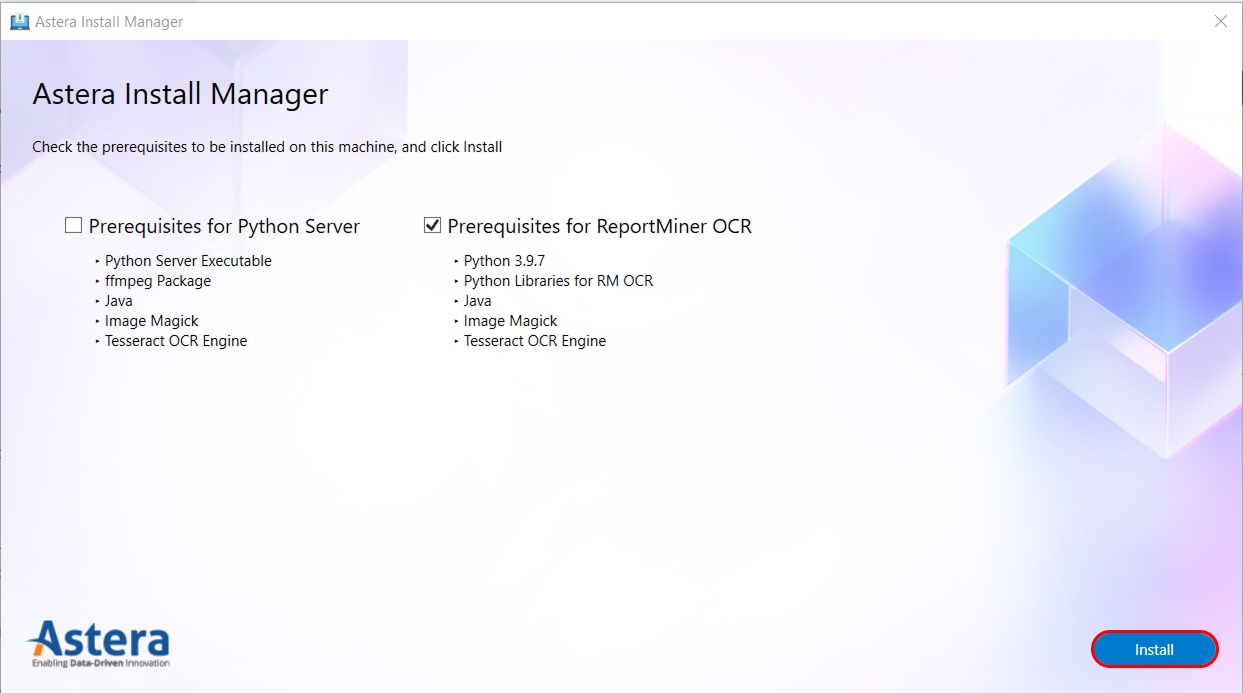

In order to access the install manager on the sever machine, open start and search for “Install Manager for Integration Server”.

Run this Install Manager as admin.

The Install Manager welcome window will appear. Click on Next.

If the prerequisite packages are already installed, the Install Manager will inform you about them and give you the option to uninstall or update them as needed.

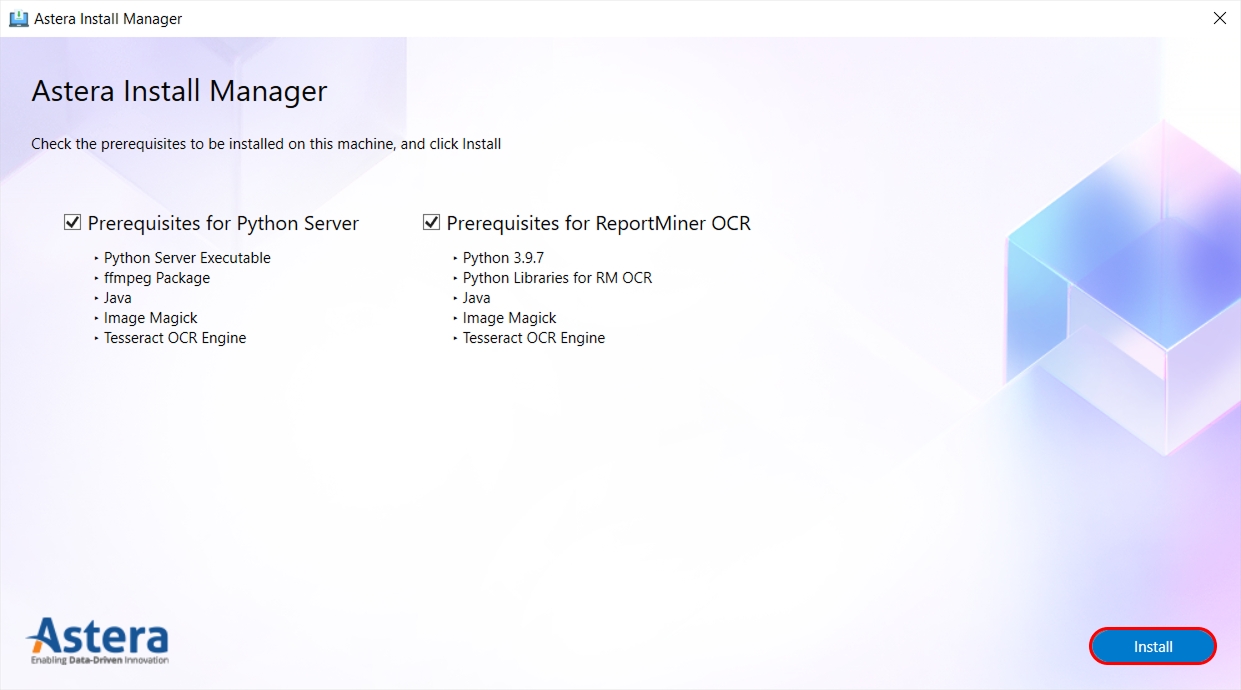

If the prerequisite packages are not installed, then the Install Manager will present you with the option to install them. Check the box next to the pre-requisite package, and then click on Install.

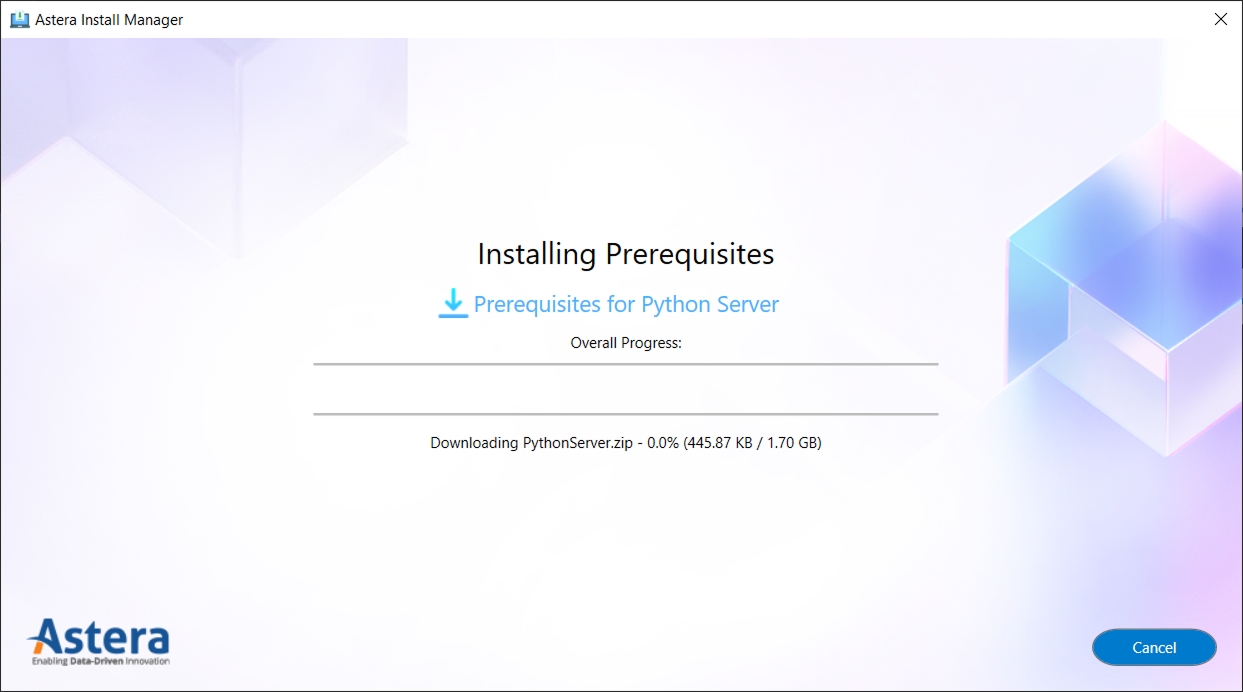

During the installation, the Install Manager window will display a progress bar showing the installation progress.

You can also cancel the installation at any point if necessary.

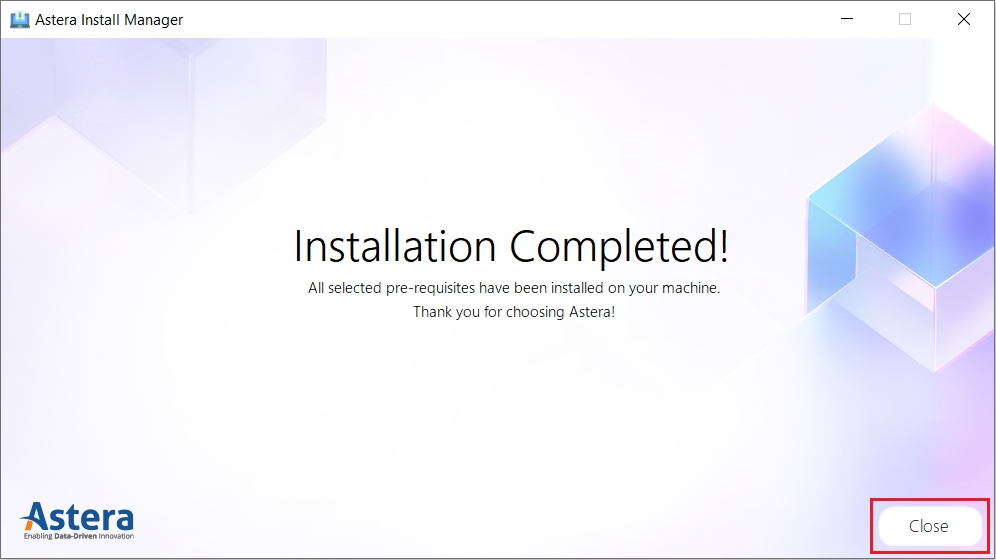

Once the installation is complete, the Install Manager will prompt you. Click on Close to exit out of the Install Manager.

This concludes our discussion on how to use the install manager for Astera.

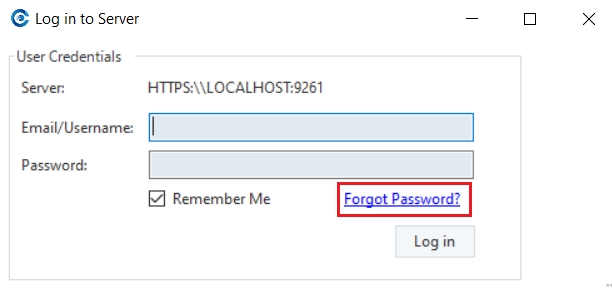

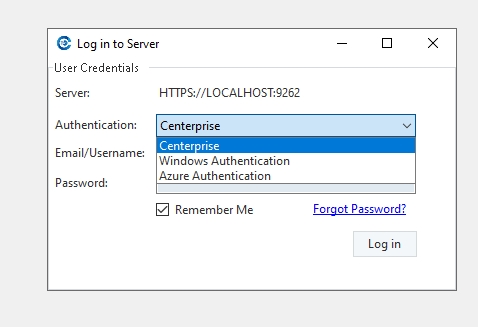

Once you have created the repository and configured the server, the next step is to login using your Astera account credentials.

You will not be able to design any dataflows or workflows on the client if you haven’t logged in to your Astera account. The options will be disabled.

Go to Server > Configure > Step 2: Login as admin.

This will direct you to a login screen where you can provide your user credentials.

If you are using Astera 10 for the first time, you can login using the default credentials as follows:

Username: admin Password: Admin123

After you log in, you will see that the options in the Astera Client are enabled.

You can use these options until your trial period is active. For fully activating the options and the product, you’ll have to enter your license.

If you don’t want Astera to show you the server connection screen every time you run the client application, you can skip that by modifying the settings.

To do that go to Tools > Options > Client Startup and select the Auto Connect to Server option. On enabling the option, Astera will store the server details you entered previously and will use those details to automatically reconnect to the server every time you run the application.

The next step after logging in is to unlock Astera using the License key.

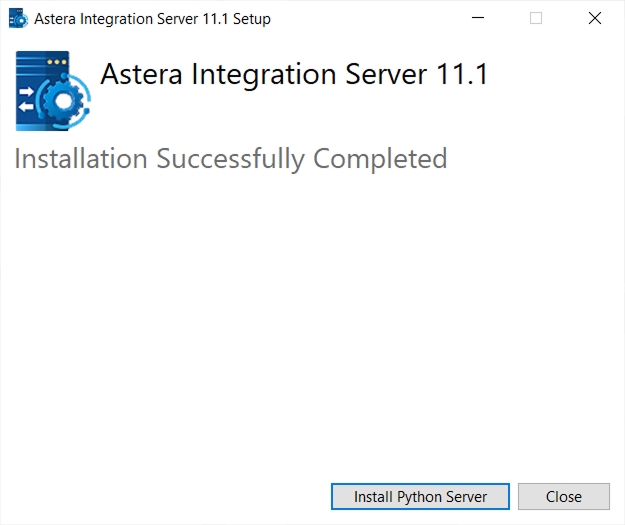

In this section we will discuss how to install and configure Astera Server and Client applications.

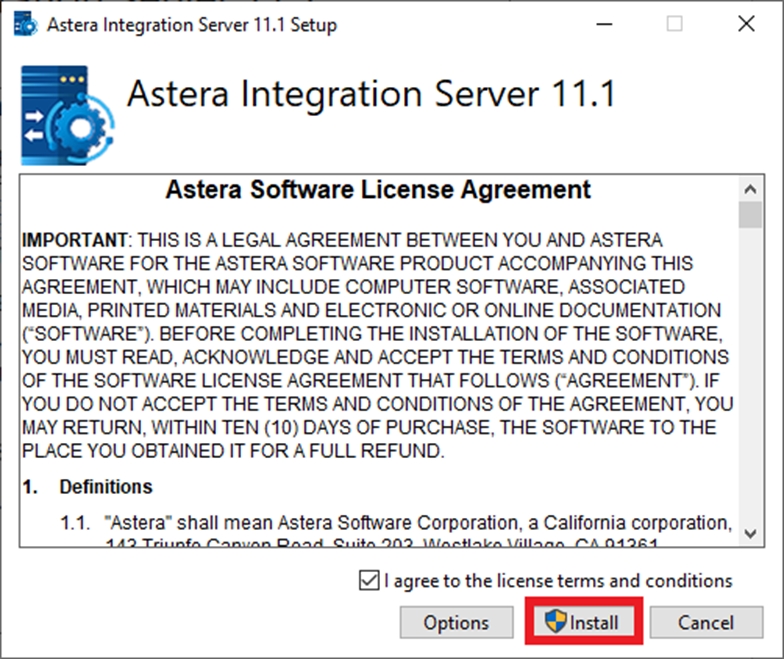

Run ‘IntegrationServer.exe’ from the installation package to start the server installation setup.

Astera Software License Agreement window will appear; check I agree to the license terms and conditions checkbox, then click Install.

Your server installation has been completed. If you want to use advanced features such as OCR, Text Converter, etc, click on Install Python Server and follow the steps .

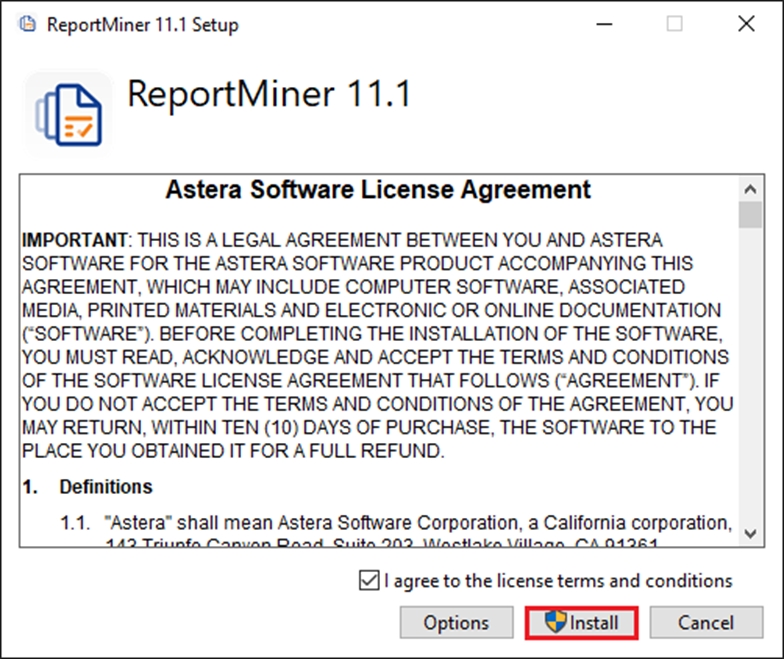

Run the ‘ReportMiner’ application from the installation package to start the client installation setup.

Astera Software License Agreement window will appear; check I agree to the license terms and conditions checkbox, then click Install.

When the installation is successfully completed, click Close.

Application Processor

Dual Core or greater (recommended); 2.0 GHz or greater

Operating System

Windows 10 or newer

Memory

8GB or greater (recommended)

Hard Disk Space

2 GB – (including .NET Desktop Runtime installed)

AI Subscription Requirements

OpenAI API (provided as part of the package)

LLAMA API

Together AI

Other

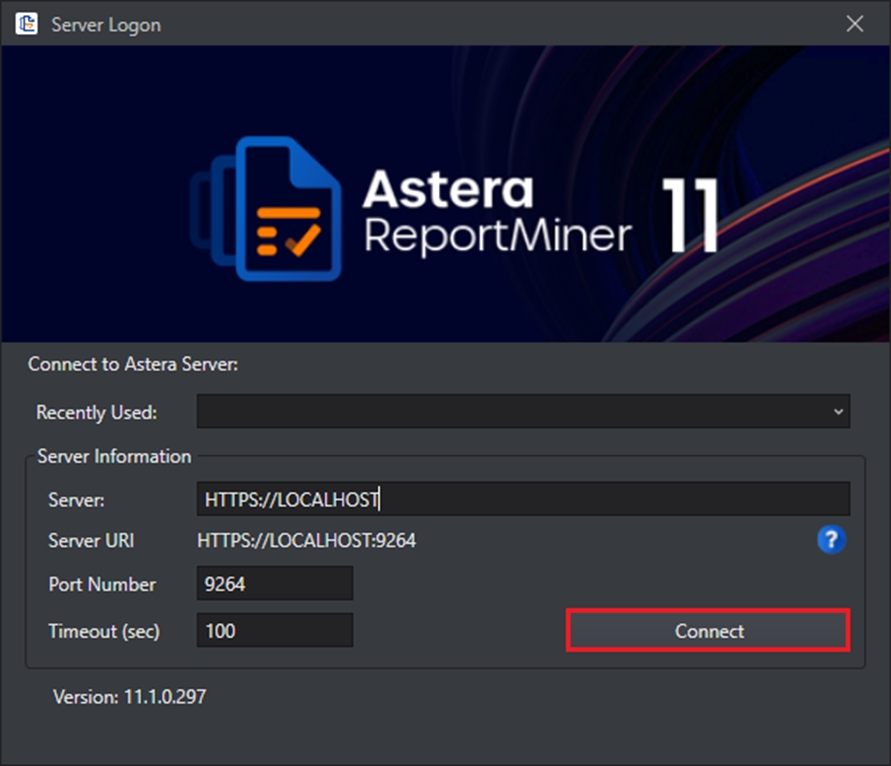

After you have successfully installed Astera client and server applications, open the client application and you will see the Server Connection screen as pictured below.

Enter the Server URI and Port Number to establish the connection.

The server URI will be the IP address of the machine where Astera Integration server is installed.

Server URI: (HTTPS://IP_address)

The default port for the secure connection between the client and the Astera Integration server is 9264.

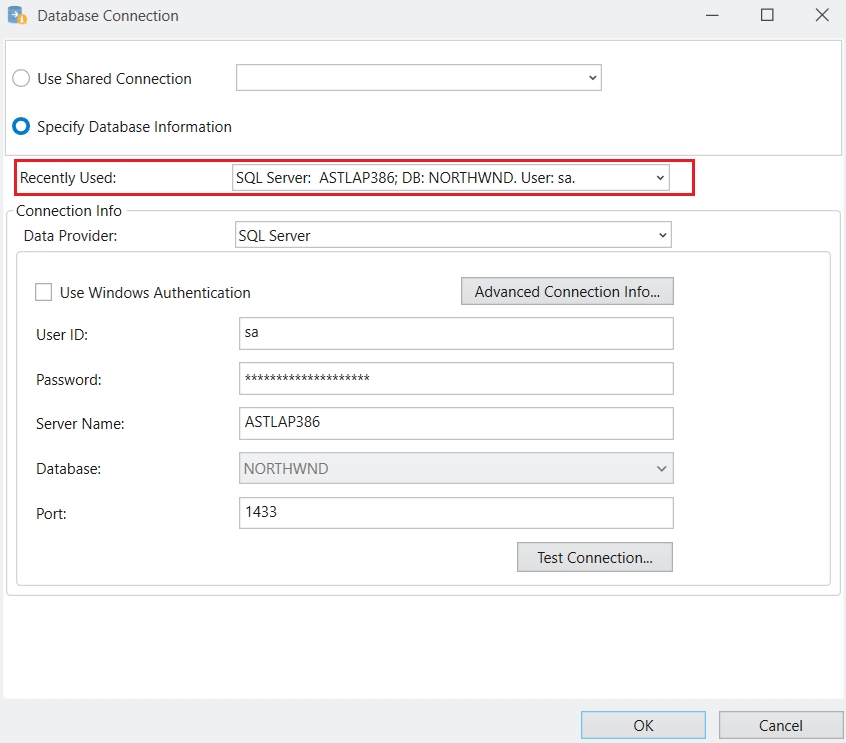

If you have connected to any server recently, you can automatically connect to that server by selecting that server from the Recently Used drop-down list.

Click Connect after you have filled out the information required.

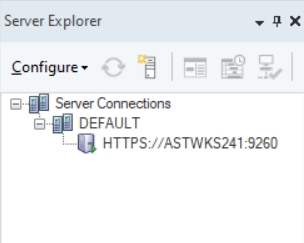

The client will now connect to the selected server. You should be able to see the server listed in the Server Explorer tree when the client application opens.

To open Server Explorer go to Server > Server Explorer or use the keyboard shortcut Ctrl + Alt + E.

Before you can start working with the Astera client, you will have to create a repository and configure the server.

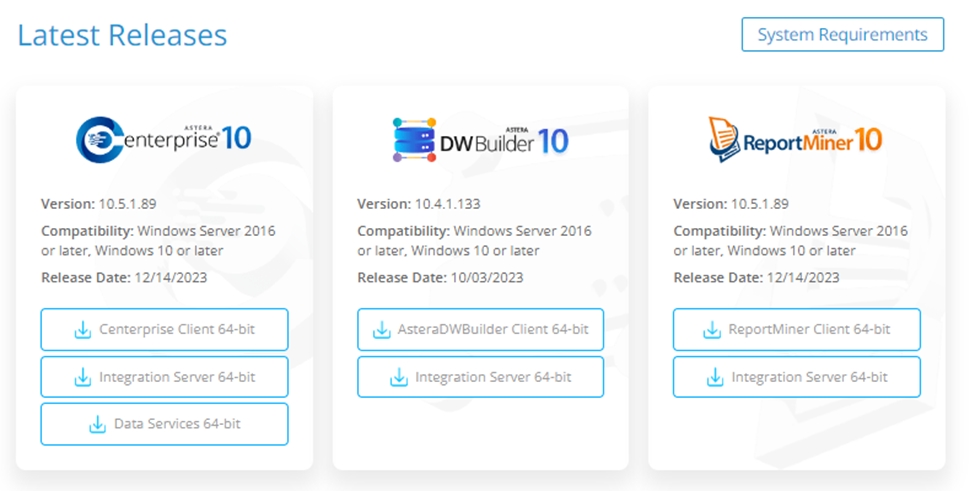

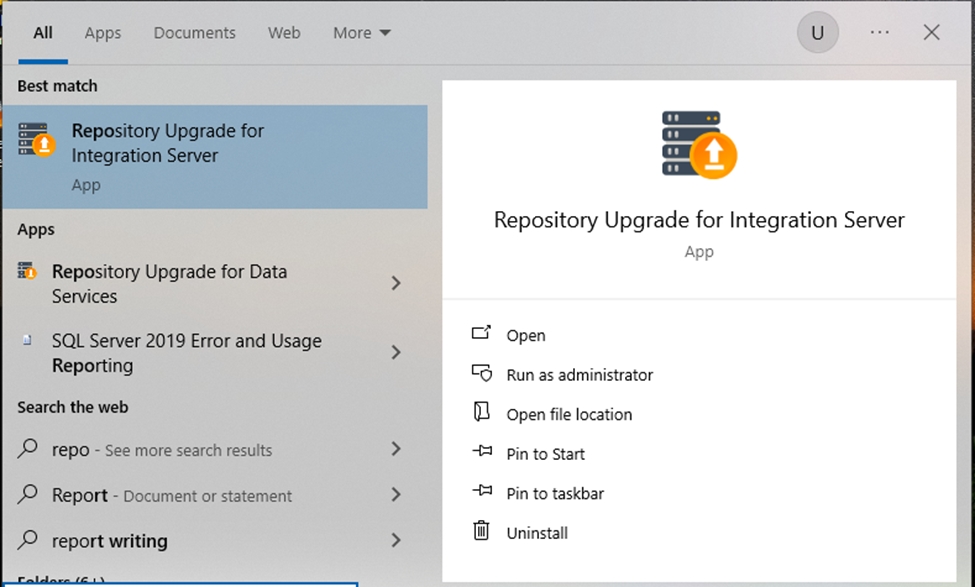

Existing Astera customers can upgrade to the latest version of Astera Data Stack by executing an exe. script, which automates the repository update to the latest release. This streamlined approach enhances the efficiency and effectiveness of the upgrade process, ensuring a smoother transition for users.

To start, download and run the latest server and client installers to upgrade the build.

Run the Repository Upgrade Utility to upgrade the repository.

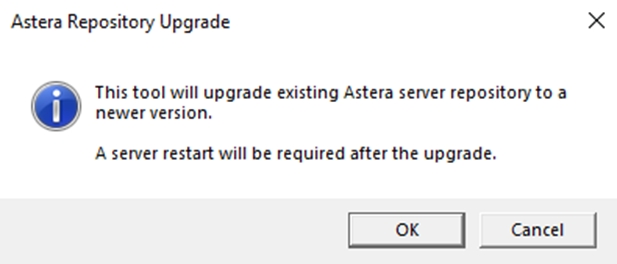

Once run, you will be faced with the following prompt.

Click OK and the repository will be upgraded.

Once done, you will be able to view all jobs, schedules, and deployments that you previously worked with in the Job Monitor, Scheduler, and Deployment windows.

This concludes the working of the Repository Upgrade Utility in Astera Data Stack.

Before you start using the Astera server, a repository must be set up. Astera supports SQL Server and PostgreSQL for building cluster databases, which can then be used for maintaining the repository. The repository is where job logs, job queues, and schedules are kept.

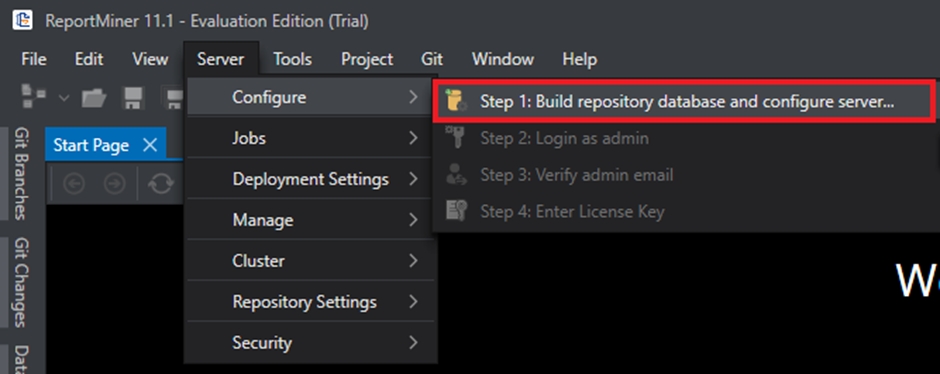

To see these options, go to Server > Configure > Step 1: Build repository database and configure server.

The first step is to point to the SQL Server or PostgreSQL instance where you want to build the repository and provide the credentials to establish the connection.

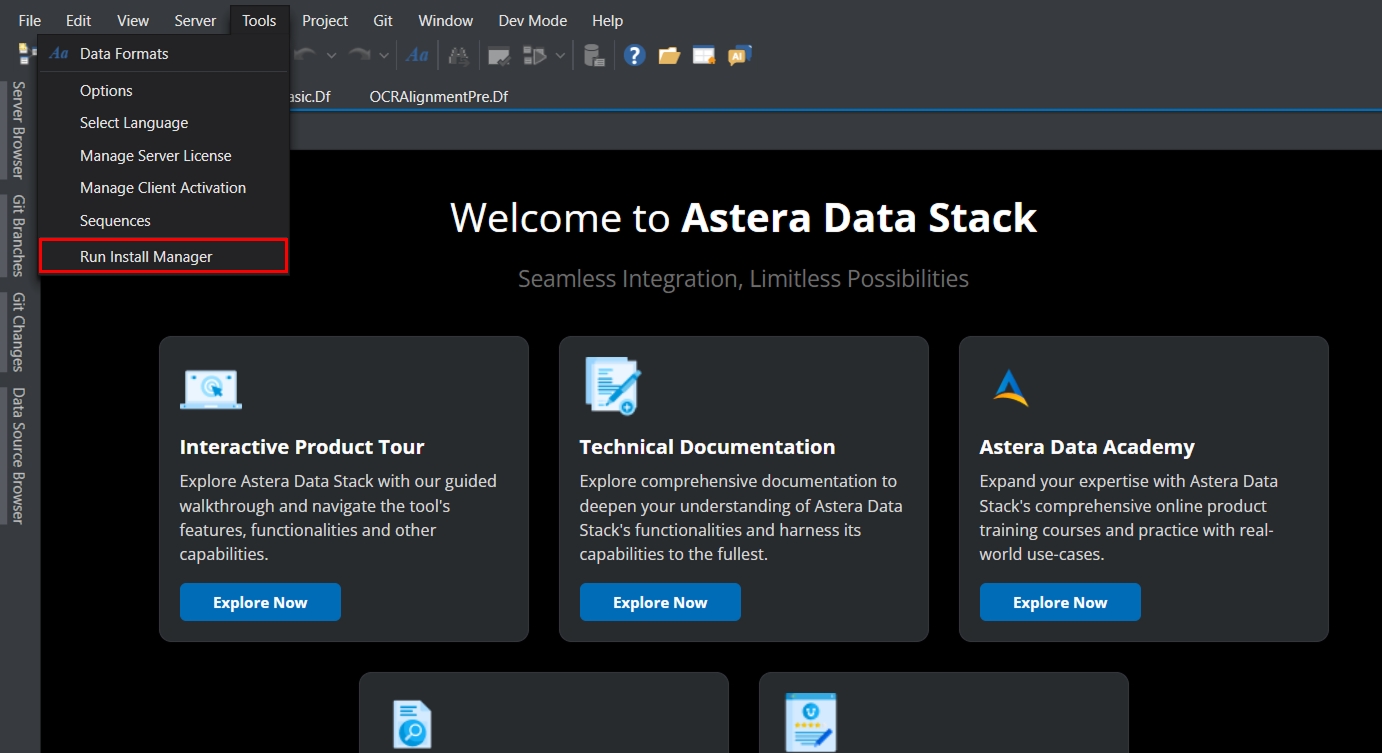

Open Astera as an administrator.

Once Astera is open, go to the Tools > Run Install Manager.

The Install Manager welcome window will appear. Click on Next.

Astera Cloud allows you to seamlessly transfer content between your local machine and the cloud environment. Whether bringing local files into Astera Designer or saving work back to your system, the Designer offers intuitive tools for efficient content management. This document explains how to perform both upload and download operations.

Requires ASP .NET Core 8.0.x Windows and Desktop Runtime 8.0.x

Requires ASP .NET Core 8.0.x Windows and Desktop Runtime 8.0.x

Seamless Migration: Easily migrate existing on-premise flows to the cloud while maintaining compatibility.

Comprehensive Data Operations: Handle missing values, remove duplicates, fix formatting, and apply transformations through simple natural language instructions.

Recipe Mode: View your data manipulation actions as step-by-step English instructions for clarity and reuse.

Workflow Automation (available in Cloud and on-prem client/server): Automate preparation processes with scheduled runs and real-time job monitoring.

Data Privacy Protection: Your data remains secure within the Astera platform, no data is ever sent to external LLMs, with the AI used solely to interpret natural language instructions.

Server: 8GB or greater (recommended)

32GB or greater for large data processing

32GB or greater for AI processing

Hard Disk Space

Client: 2GB– (if .NET Framework is pre-installed)

Server: 2GB – (if .NET Framework is pre-installed)

Additional 300 MB if .NET Framework is not installed

AI Subscription Requirements

OpenAI API (provided as part of the package)

LLAMA API

Together AI

Postgres Db (if knowledgebase is needed)

Other

Requires ASP.NET Core 8.0.x Windows and Desktop Runtime 8.0.x for the client, .NET Core Runtime 8.0.x for the server

Client Application Processor

Dual Core or greater (recommended); 2.0 GHz or greater

Server Application Processor

8 Cores or greater (recommended)

Repository Database

MS SQL Server 2008R2 or newer, or PostgreSQL v15 or newer for hosting repository database

Operating System - Client

Windows 10 or newer

Operating System - Server

Windows: Windows 10 or Windows Server 2019 or newer

Memory

Client: 8GB or greater (recommended)

Go to Server > Configure > Step 1: Build repository database and configure server.

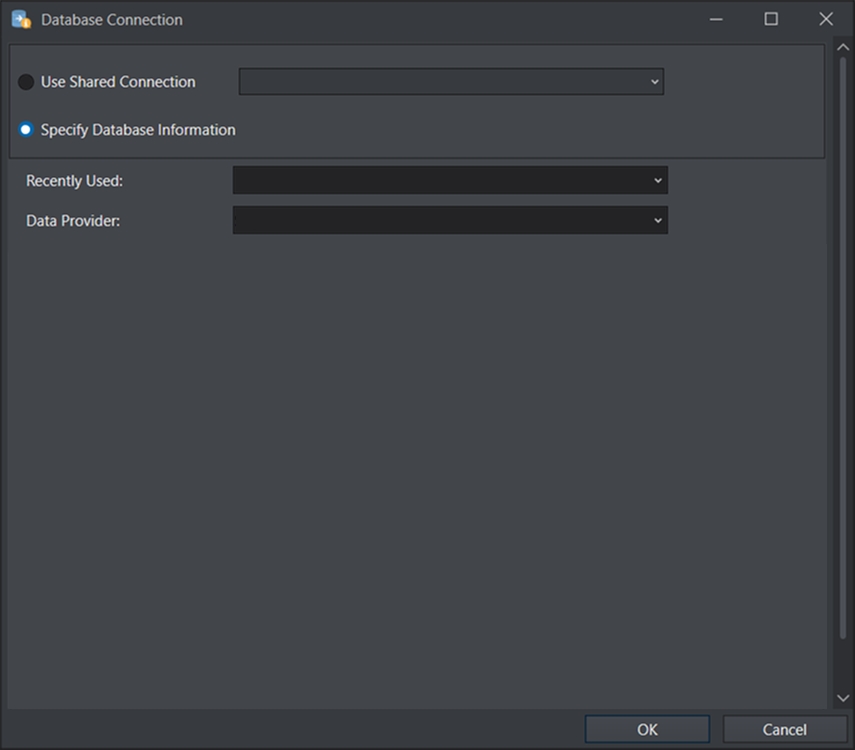

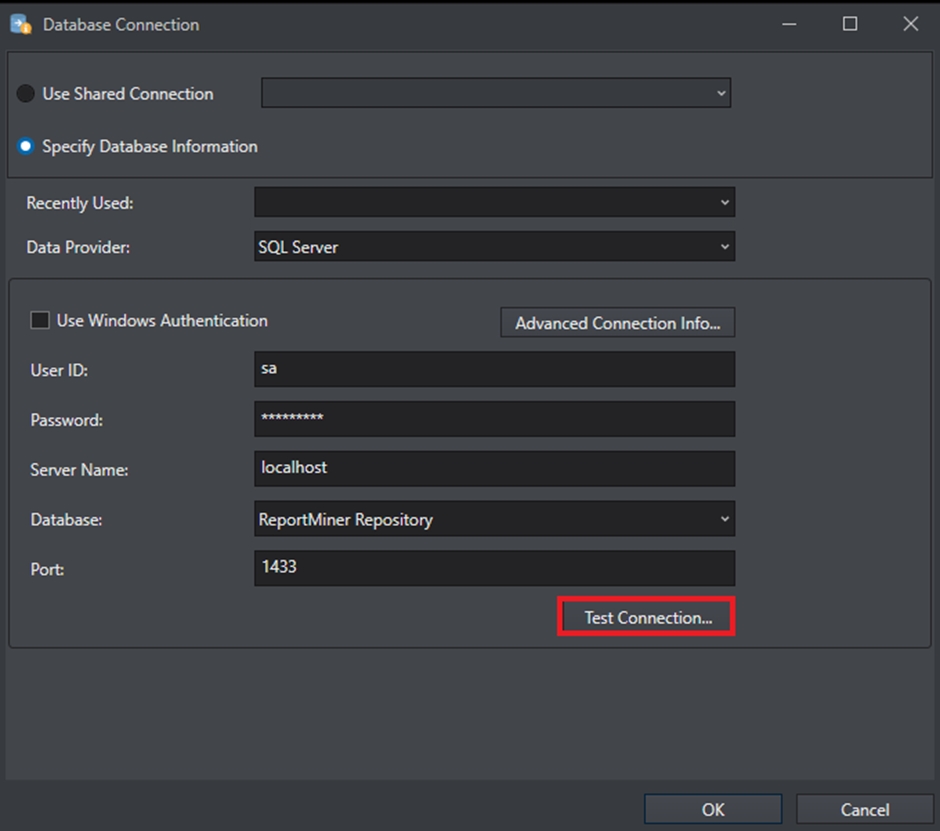

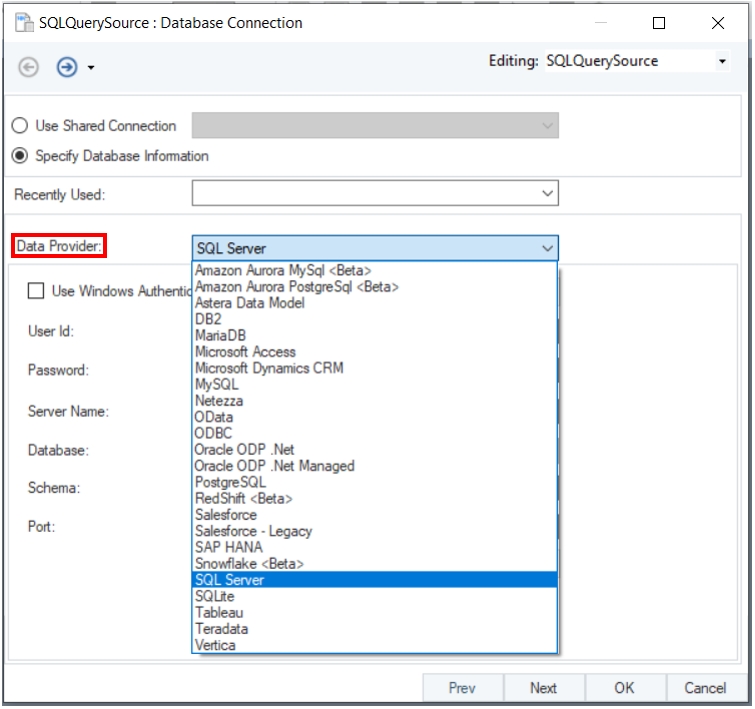

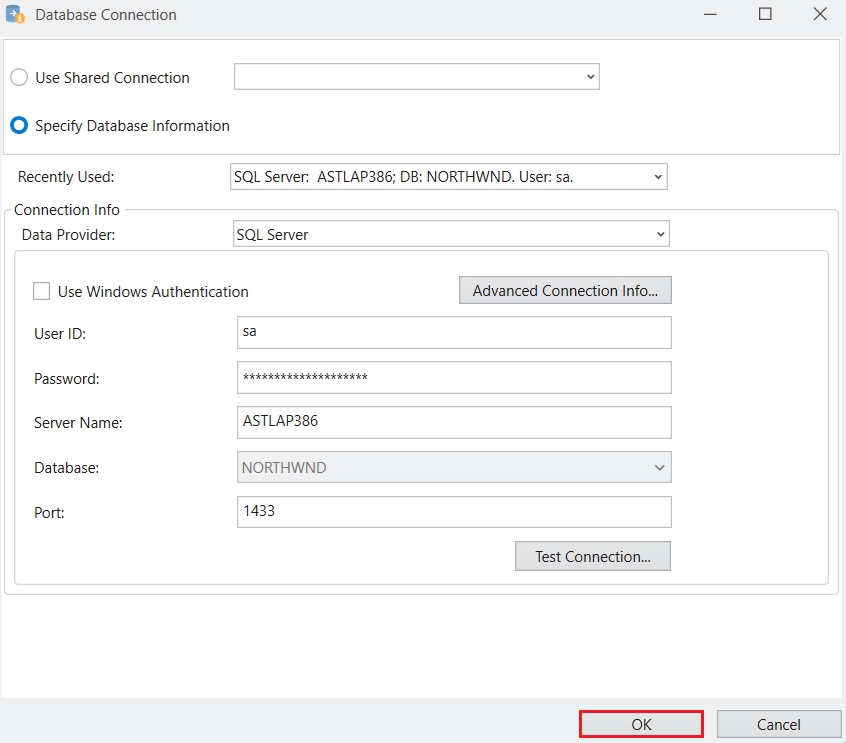

Select SQL Server from the Data Provider drop-down list and provide the credentials for establishing the connection.

From the drop-down list next to the Database option, select the database on the SQL instance where you want to host the repository.

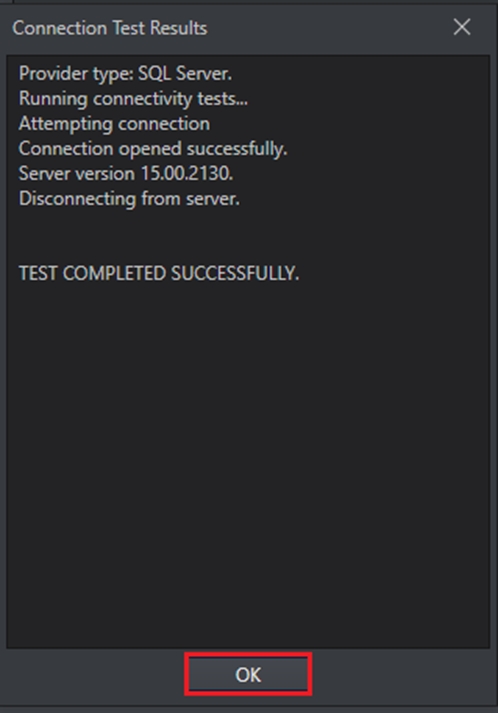

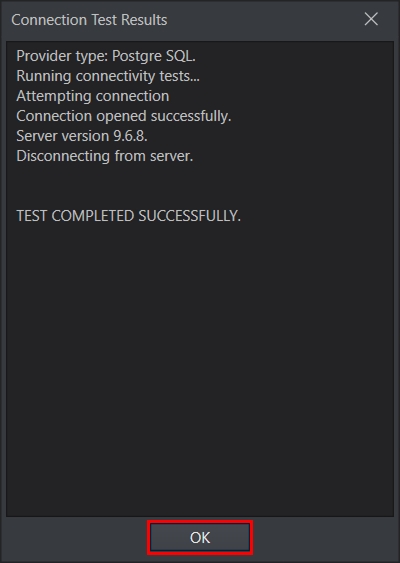

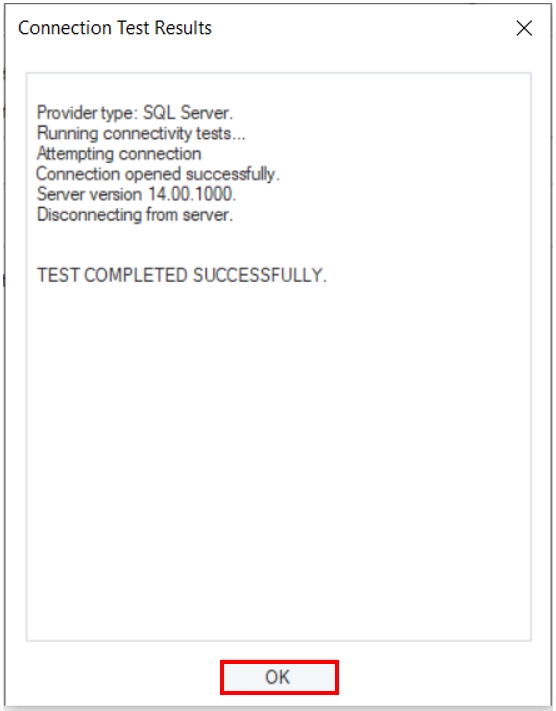

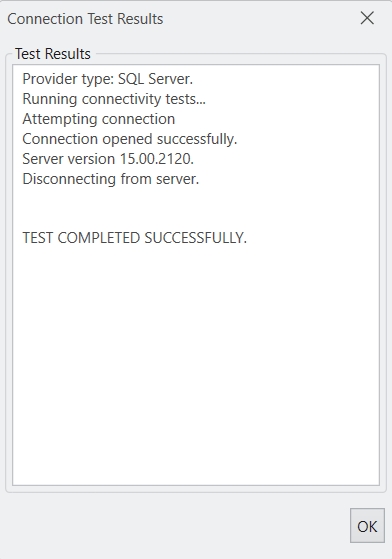

Click Test Connection to test whether the connection is successfully established or not. You should be able to see the following message if the connection is successfully established.

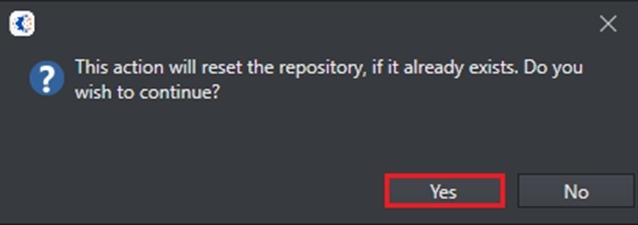

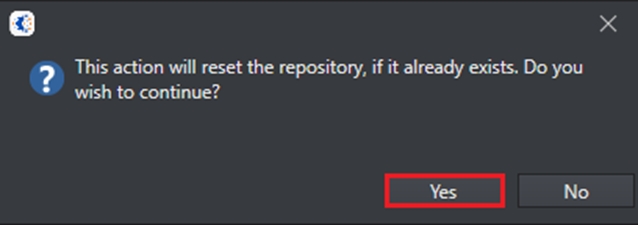

Click OK to exit out of the test connection window and again click OK, the following message will appear. Select Yes to proceed.

The repository is now set up and configured with the server to be used.

The next step is to log in using your credentials.

Go to Server > Configure > Step 1: Build repository database and configure server.

Select PostgreSQL from the Data Provider drop-down list and provide the credentials for establishing the connection.

From the drop-down list next to the Database option, select the database on the PostgreSQL instance where you want to host the repository.

Click Test Connection to test whether the connection is successfully established or not. You should be able to see the following message if the connection is successfully established.

Click OK and the following message will appear. Select Yes to proceed.

The repository is now set up and configured with the server to be used.

The next step is to log in using your credentials.

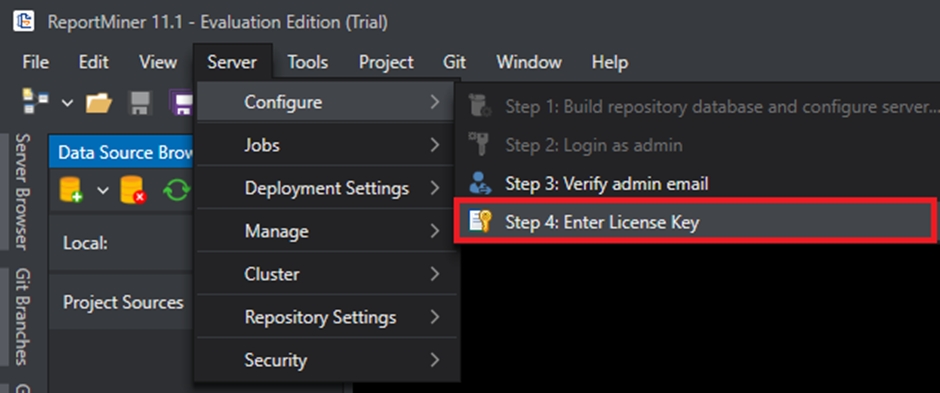

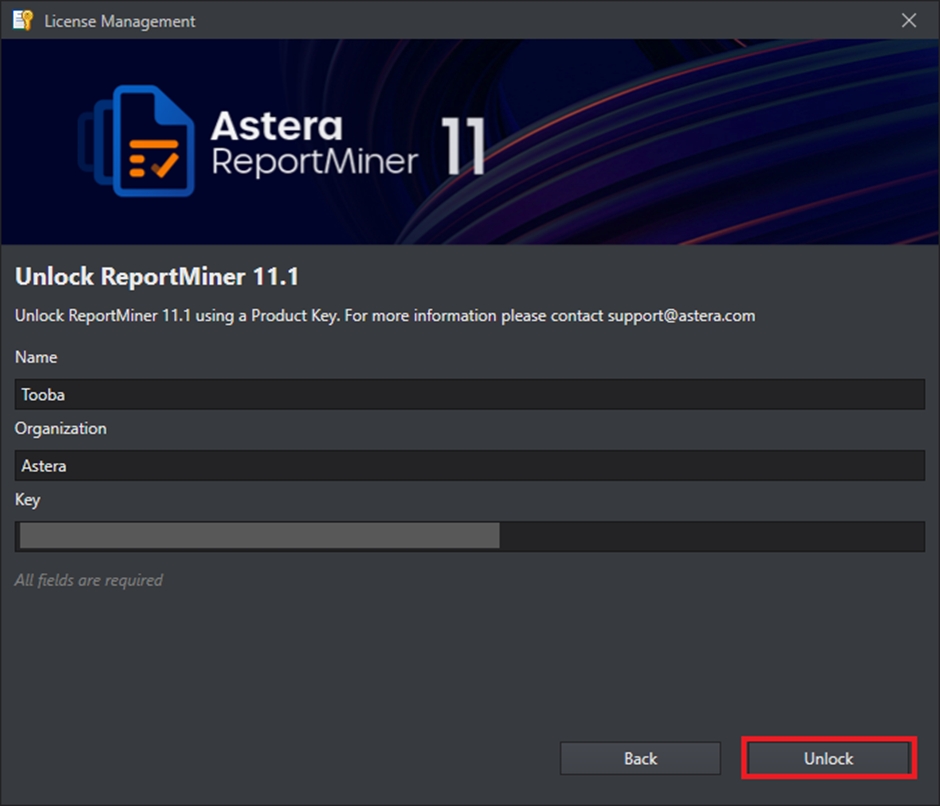

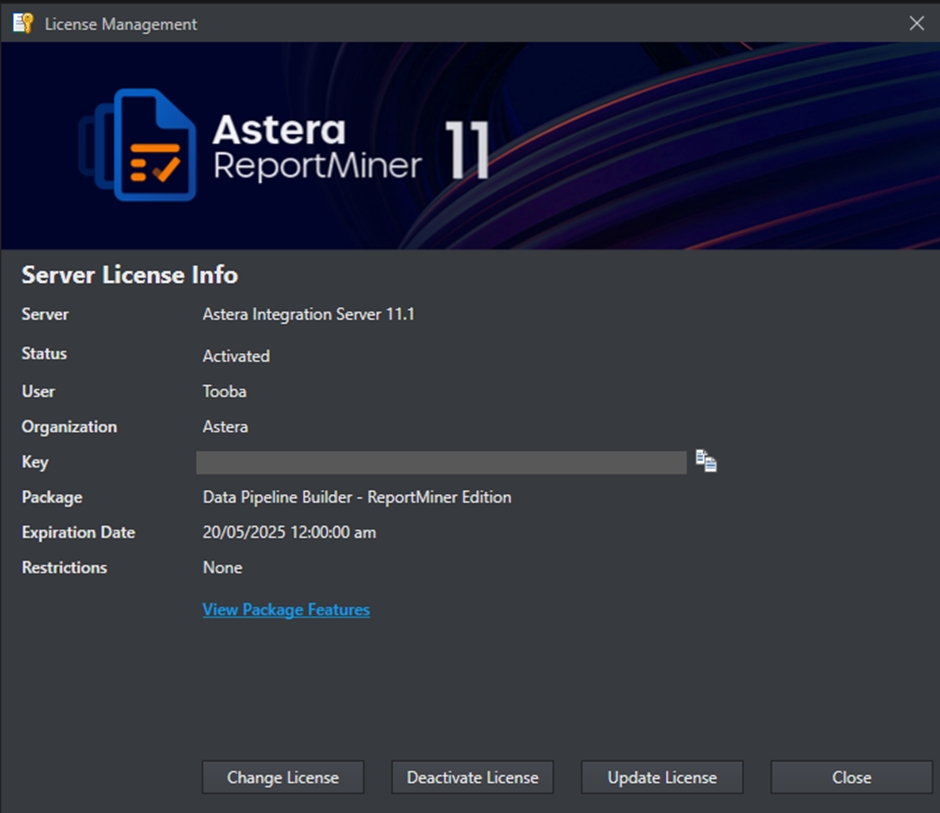

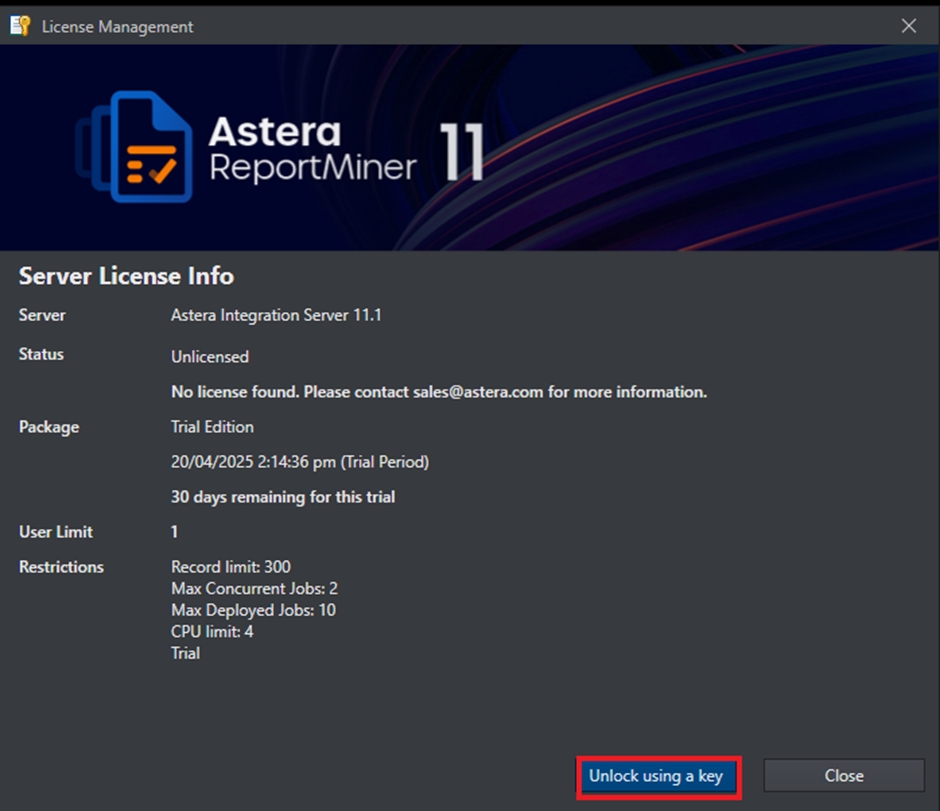

After you have configured the server, and logged in with the admin credentials, the last step is to insert your license key.

Go to Server > Configure > Step 4: Enter License Key.

On the License Management window, click on Unlock using a key.

Enter the details to unlock Astera – Name, Organization, and Product Key and select Unlock.

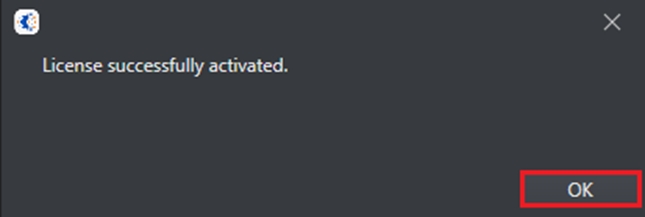

You’ll be shown the message that your license has been successfully activated.

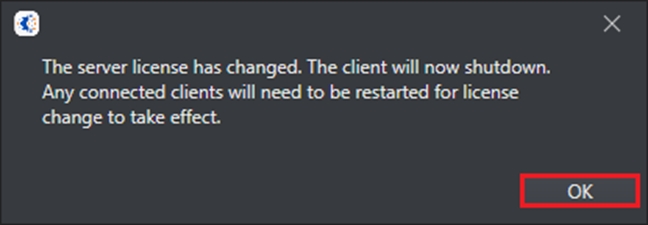

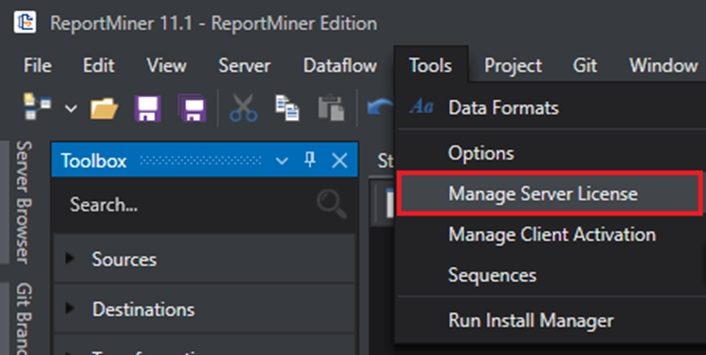

Your client is now activated. To check your license status, you can go to Tools > Manage Server License.

This opens a window containing information about your license.

This concludes unlocking Astera client and server applications using a single licensing key.

If the prerequisite packages are already installed, the Install Manager will inform you about them and give you the option to uninstall or update them as needed.

If the prerequisite packages are not installed, then the Install Manager will present you with the option to install them. Check the box next to the pre-requisite package, and then click on Install.

During the installation, the Install Manager window will display a progress bar showing the installation progress.

You can also cancel the installation at any point if necessary.

Once the installation is complete, the Install Manager will prompt you. Click on Close to exit out of the Install Manager.

The packages for AGL and OCR usage are now installed, and the features are ready to use.

In case the Integration Server is installed on a separate machine, we will need to install the packages for AGL and OCR there as well.

Your local file explorer will open. Here you can select the files (or folder if using the dropdown option) you want to upload and click Open.

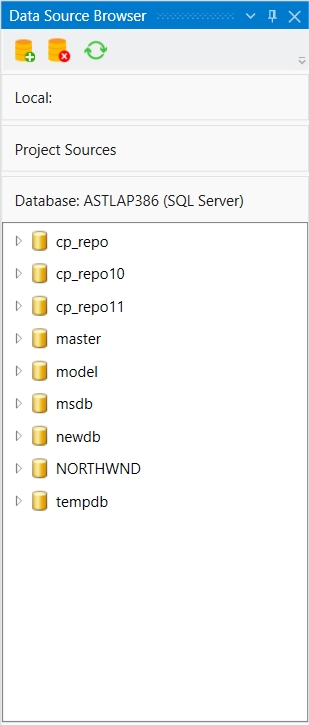

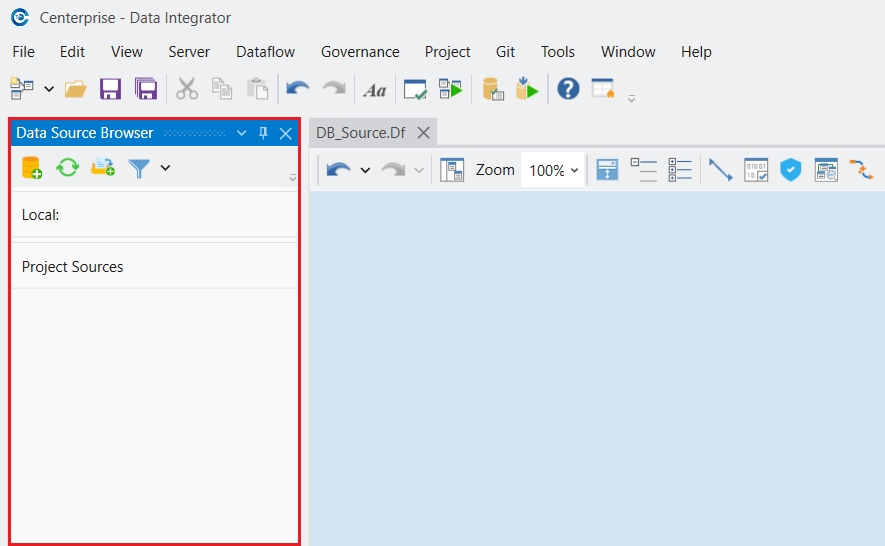

A success message will appear and the Data Source Browser panel will open with the uploaded content in a Default Uploads folder.

The Data Source Browser panel also has a similar upload button . This option will only be enabled when a user has selected a folder, as this button is designed to upload files or folders to the selected folder rather than the Default Folder. The rest of the steps remain the same as before.

To save files or folders from Astera Cloud to your local machine, you can use the download functionality available in the Designer.

In this document, we will learn how to download files and folders from the Designer.

You can download content by first selecting a file or folder in the Data Source Browser panel, this will enable the Download button.

Click the Download button.

Your local file explorer will open, allowing you to choose where you want to save the downloaded content. Select your preferred download location and click OK. The selected content will be downloaded to your chosen location.

Once the download is complete, a success message will appear confirming that the download was successful.

Managing user access in Astera Cloud Portal is user-centric and allows you to efficiently control who can access your cloud resources. This guide will walk you through the process of managing users in your Organization.

Let's begin by navigating to the User Management section in your Astera Cloud Portal. Here, you'll see the User Management interface displaying existing users and their roles

To add a new user to your portal, click on the Invite User button.

The Add User pop-up will appear. Here, you'll need to provide the following information:

Email Address: Enter the email address of the user you want to invite

Role Assignment: You can assign one of two roles to the new user:

Admin: Provides full administrative access to the portal, including the ability to manage other users, access all resources, and modify settings

User: Provides standard user access with limited administrative privileges

Click Send Invite to send an invitation email to the new user. A success message will appear notifying if the email was sent successfully.

The user will now be able to accept the invitation and login to this organization.

After sending an invitation, you can monitor the status of your invites in the Invited section.

The interface displays several important details about each user:

Email: The user's email address

Roles: Displays the assigned role (Admin or User)

Status: Shows whether the user is Active, Accepted or Cancelled

Active: The invitation has been sent but not yet accepted

Once users have accepted their invitations and are part of your organization, an Admin can manage their access and roles as needed in the Users section.

In the Actions column for each active user, you'll find a menu with the following options:

Delete: Permanently removes the user from the organization

Deactivate: Temporarily disables the user's access without removing them

Make Admin: Promotes a regular user to admin role

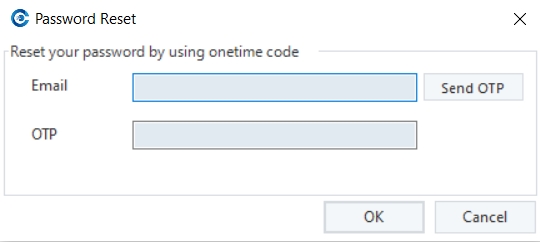

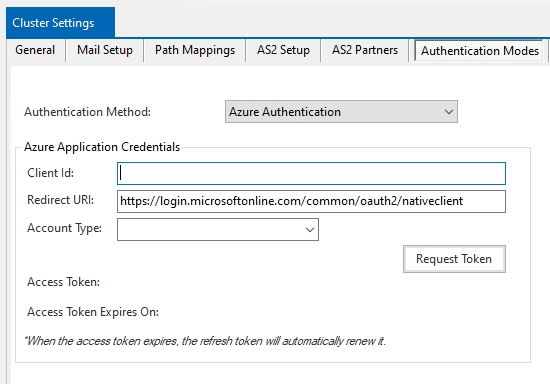

Once you have logged into the Astera client, you can set up an admin email to access the Astera server. This will also allow you to be able to use the “Forgot Password” option at the time of log in.

In this document, we will discuss how to verify admin email in Astera.

1. Once logged in, we will now proceed to enter an email address to associate with the admin user by verifying the email address.

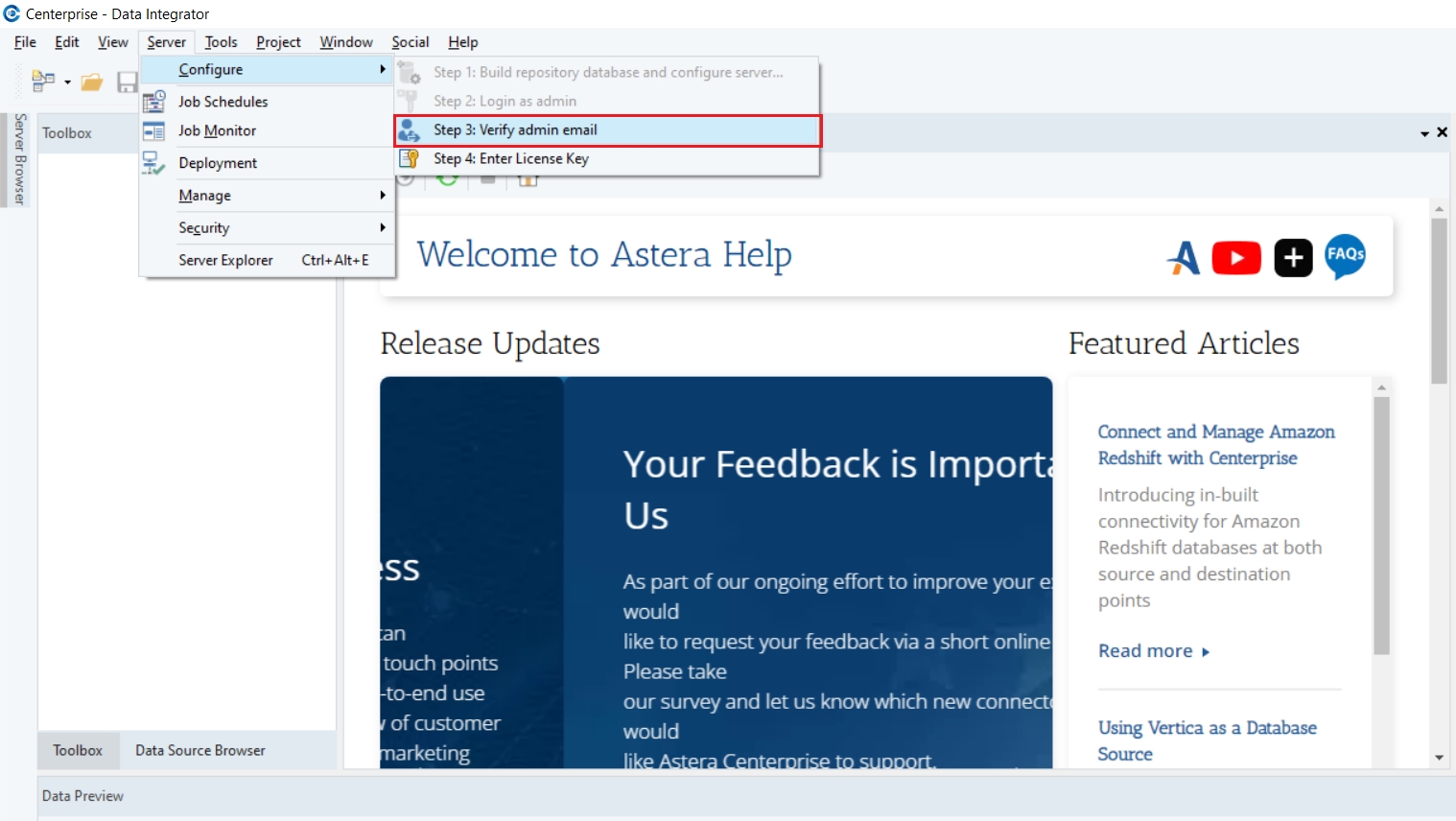

Go to Server > Configure > Step 3: Verify Admin Email

In this document, you’ll learn how to use the Distinct transformation in Astera Dataprep to remove duplicate rows based on selected columns, ensuring your dataset contains only unique records.

To apply Distinct in Astera Dataprep, click on the Transform option in the toolbar and select Distinct from the drop-down.

Once selected, the Recipe Configuration – Distinct panel will open.

Accepted: The user has accepted the invitation and can access the portal

Cancelled: The invitation for the user has been cancelled

Actions:

Cancel Invitation: Cancel an invitation here.

Resend Invitation: Resend an invitation here

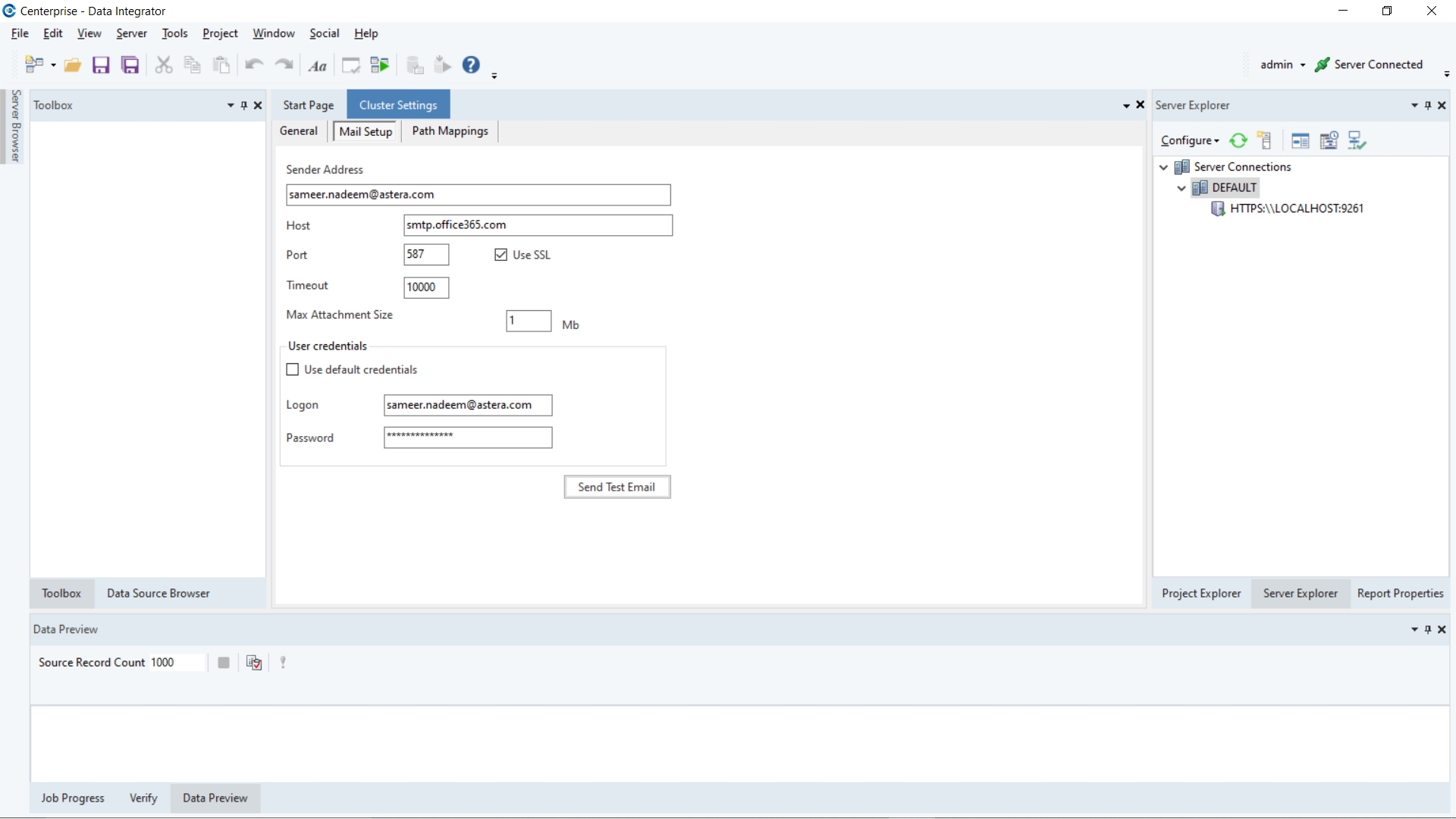

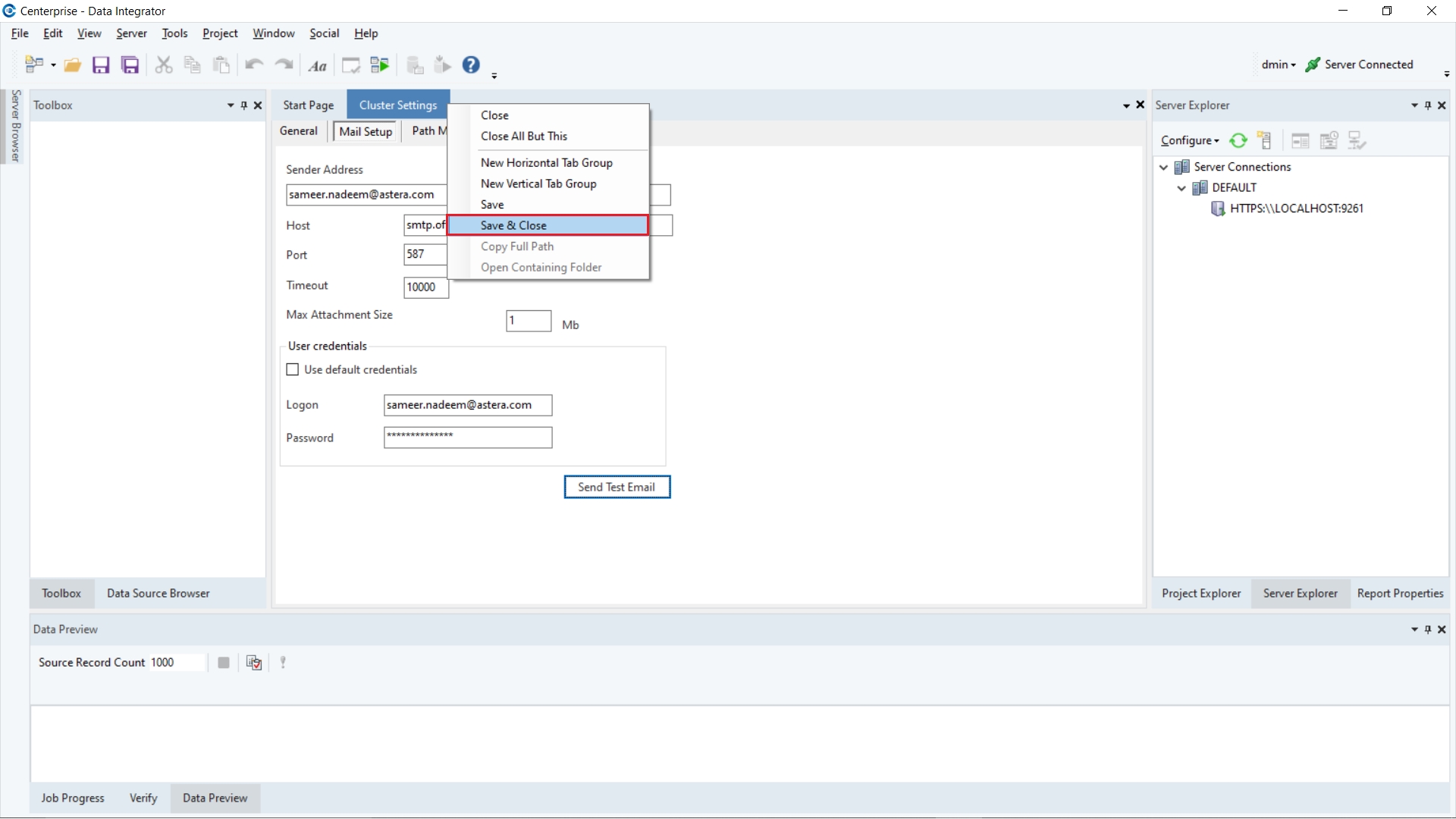

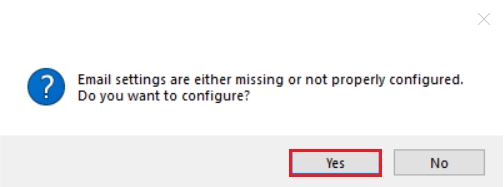

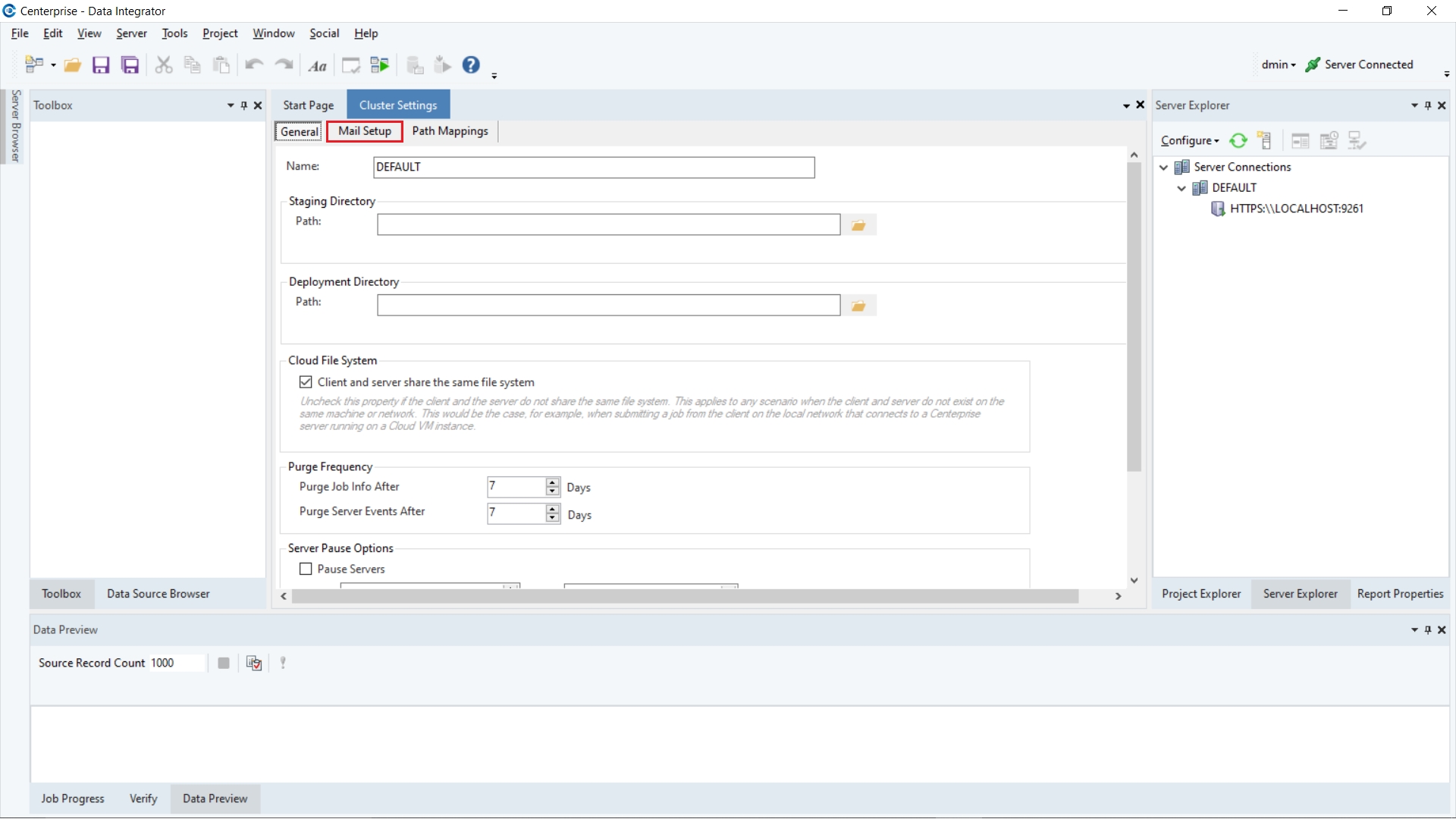

2. Unless you have already set up an email address in the Mail Setup section of Cluster settings, the following dialogue box will pop up asking you to configure your email settings.

Click on Yes to open your cluster settings.

Click on the Mail Setup tab.

3. Enter your email server settings.

4. Now, right-click on the Cluster Settings active tab and click on Save & Close in order to save the mail setup.

5. Re-visit the Verify Admin Email step by going to Server > Configure > Step 3: Verify Admin Email.

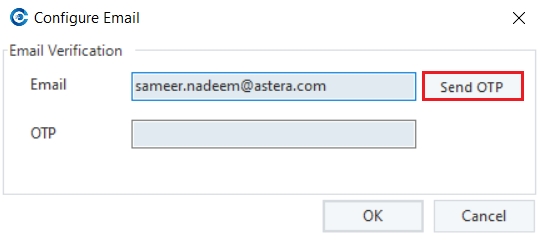

This time, the Configure Email dialogue box will open.

6. Enter the email address you previously set up and click on Send OTP.

7. Use the OTP from the email you received and enter it in the Configure Email dialogue and proceed.

On correct entry of the OTP, an email successfully configured dialogue will appear.

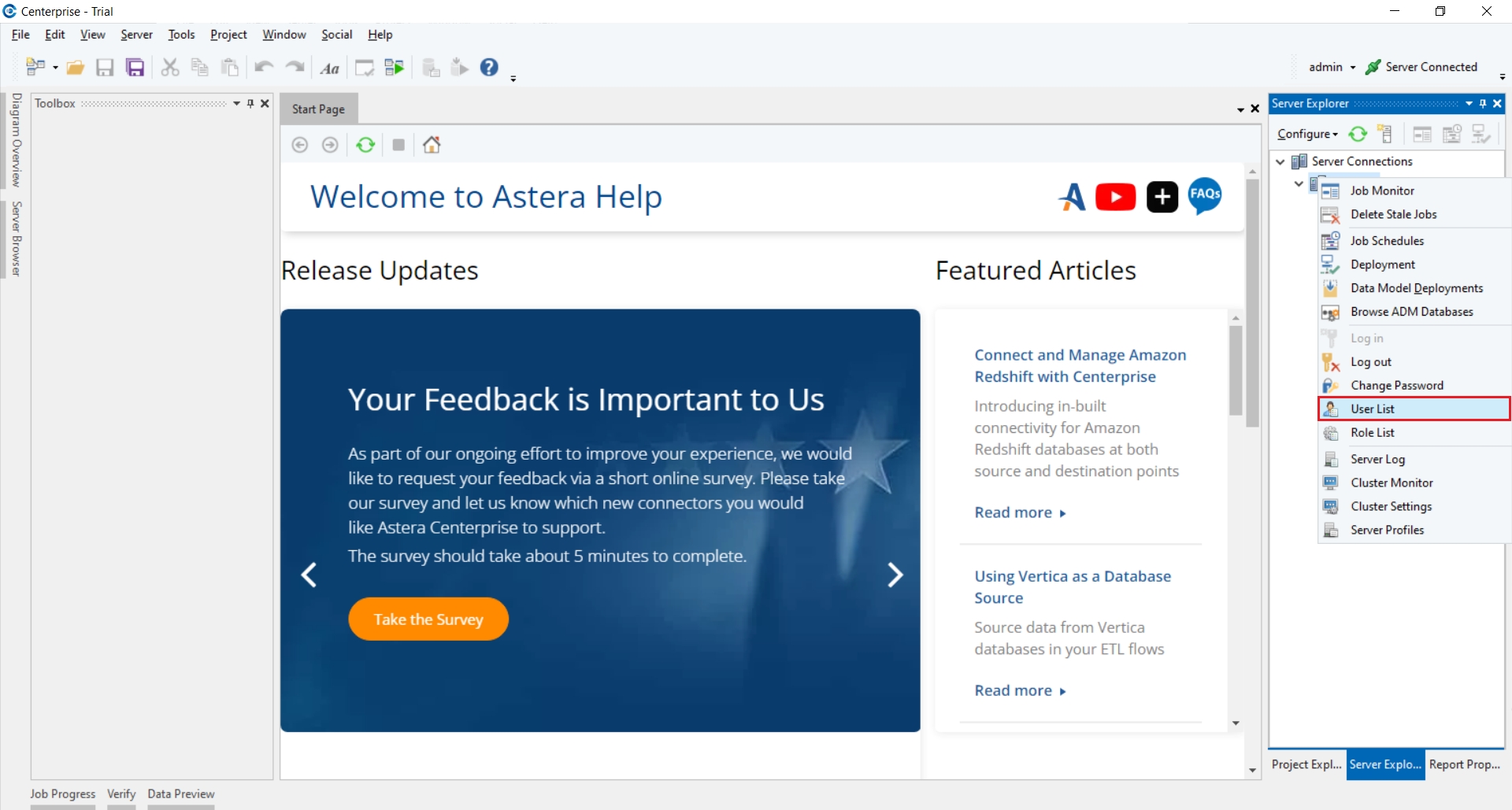

8. Click OK to exit it. We can confirm our email configuration by going to the User List.

Right click on DEFAULT under Server Connections in the Server Explorer and go to User List.

9. This opens the User List where you can confirm that the email address has been configured with the admin user.

The feature is now configured and can be utilized when needed by clicking on Forgot Password in the log in window.

This opens the Password Reset window, where you can enter the OTP sent to the specified e-mail for the user and proceed to reset your password.

This concludes our discussion on verifying admin email in Astera.

Column Names: Here, you can select one or more columns from the drop-down. The uniqueness check will be applied based on the selected columns.

After selecting the columns, click Apply.

Now, in the grid, you can see that only unique records, based on your selected columns, remain in the dataset.

In order for advanced features such as AGL, OCR and Text Converter to work in Astera, different packages are required to be installed, such as Python, Java etc. To avoid the tedious process of separately installing these, Astera provides a built in Install Manager in your tool.

There are two types of packages which are required to be installed:

Prerequisites for Python Server: This package is required to be installed on server machine only.

Prerequisites for ReportMiner: This package is required to be installed on client and server machine.

The packages being installed for AGL are listed as follows:

is installed

with the following packages:

The packages being installed for OCR are listed as follows:

Python packages

The packages being installed for Python Server are listed as follows:

Python Server executable is installed (comes with all packages necessary for Python Server)

In the following documents, we will look at how to use the install manager to install these packages on client and server machines.

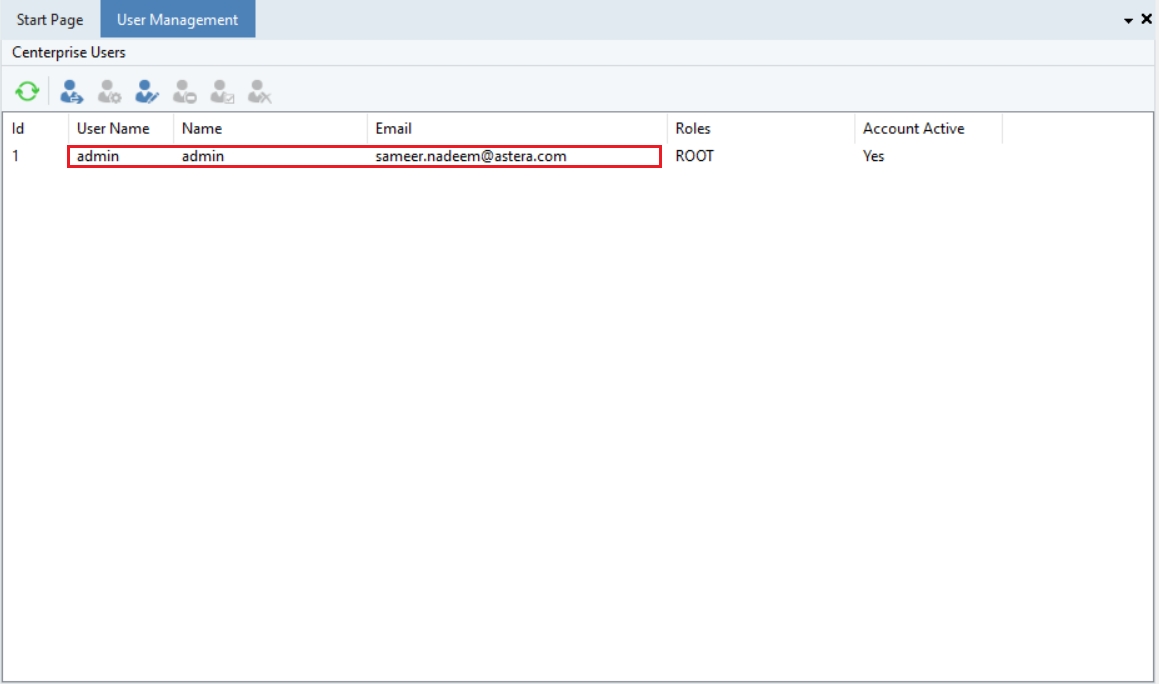

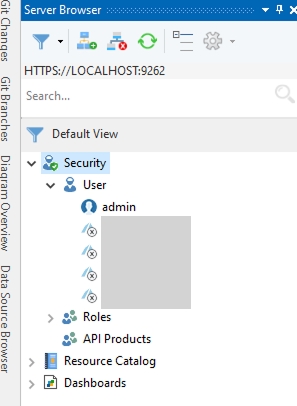

This article introduces the role-based access control mechanism in Astera. This means that administrators can grant or restrict access to various users within the organization, based on their role in the entire data management cycle.

In this article, we will look at the user lists and role management features in detail.

Username: admin

Password: Admin123

Once you have logged in, you now have the option to create new users and we recommend you to do this as a first step.

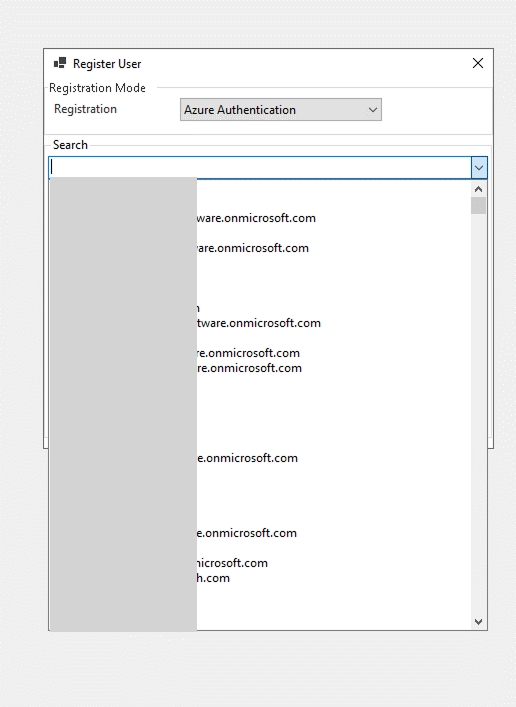

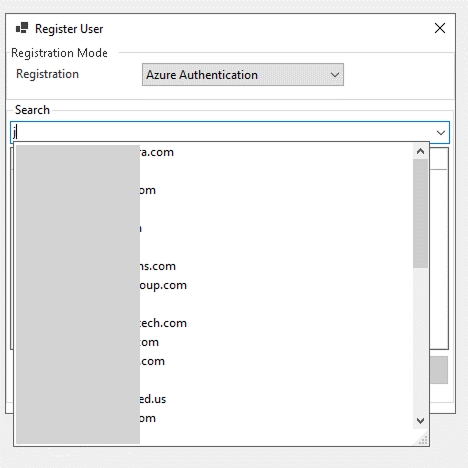

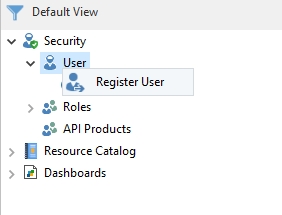

To create/register a new user, right-click on the DEFAULT server node in the Server Explorer window and select User List from the context menu.

This will open the Server Browser panel.

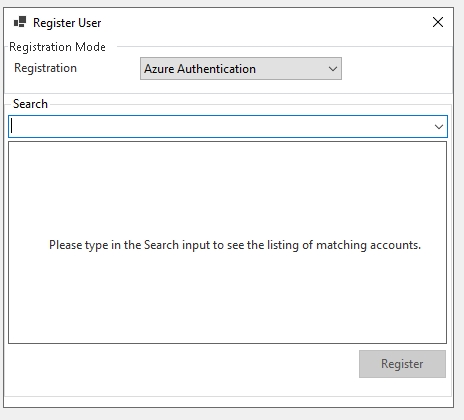

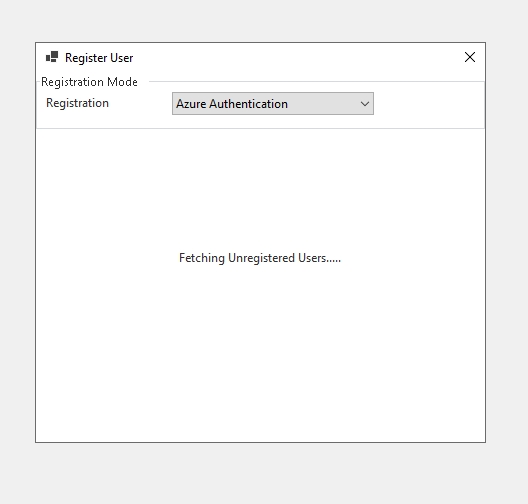

Under the Security node, right-click on the User node and select Register User from the context menu.

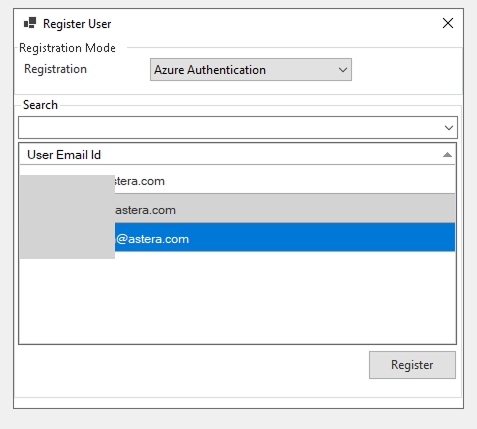

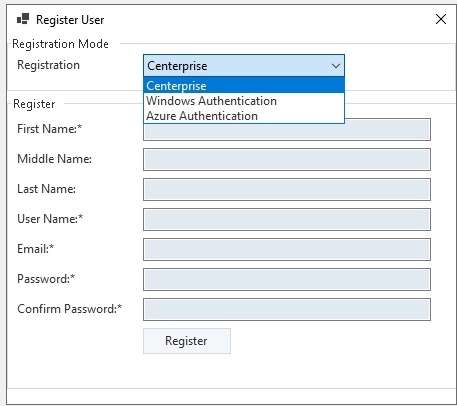

This will open a new window. You can see quite a few fields required to be filled here to register a new user.

Once the fields have been filled, click Register and a new user will be registered.

Now that a new user is registered, the next step is assign roles to the user.

Select the user you want to assign the role(s) to and right-click on it. From the context menu, select Edit User Roles.

A new window will open where you can see all roles that are there by default in Astera or are custom created. We haven’t created any custom role, so we’ll see the three default roles that are - Developer, Operator, and Root.

Select the role that you want to assign to the user and click on the arrows in the middle section of the screen. You’ll see that the selected role will get transferred from the All Roles section to the User Roles section.

After you have assigned the roles. click OK and the specific role(s) will be assigned to the user.

Astera lets the admin manage resources allowed to any user. They can assign permissions of resources or they can restrict resources.

To edit role resources, right-click on any of the roles and select Edit Role Resources from the context menu.

This will open a new window. Here, you can see four nodes on the left under which resources can be assigned,

The admin can provide a role with resources from the Url node, the Cmd node, access to deployments from the REST node and access to Catalog aritfacts from the Catalog Node.

Expanding the Url node shows us the following resources,

Expanding the Cmd node will give us the following checkboxes as resources.

If we expand the REST checkbox, we can see a list of available API resources, including endpoints you might have deployed.

Upon expanding the Catalog node, we can see the artifacts that have been added to the Catalog, along with which endpoint permissions are to be given.

This concludes User Roles and Access Control in Astera Data Stack.

In this document, you’ll learn how to use the Stack transformation in Astera Dataprep to combine multiple columns into a single column for easier analysis.

Suppose you have a dataset containing patient glucose readings across different times of the day. By applying Stack, you can merge these time-specific columns into one Glucose Level column, with an additional Category column indicating the time of measurement, making the data easier to analyze and visualize.

To apply Stack in Astera Dataprep, click on the Transform option in the toolbar and select Stack from the drop-down.

Once selected, the Recipe Configuration – Stack panel will open.

Column Name: Select the column(s) you want to stack.

Stacking Option: Defines how values and identifiers are combined when stacking columns.

Repeat: Repeats identifier or reference values for each stacked entry.

Category: Specify the category column to label each stacked group.

Once done, click Apply.

Now, in the grid, you can see that all glucose measurements are combined into a single column, with a corresponding category indicating the time of day they were taken.

In this document, you will explore how to aggregate in Astera Dataprep.

To aggregate in Astera Dataprep, you can click on the Transform option in the toolbar and select Aggregate option from the drop-down.

Once selected, the Recipe Configuration – Aggregate panel will open.

Here, you can configure the Aggregate section by selecting column names in the Name drop-down, adding any expressions in the Calculation column, and selecting an Aggregate Function from the drop-down with all the aggregate functions.

Once done, you can click on Apply

Now, in the grid, you can see that the columns have been grouped by the EmployeeID and a Count aggregate function has been applied to the OrderID field.

This concludes the document on using Aggregate in Astera Dataprep.

In this document, we will explore how to concatenate columns in Astera Dataprep.

To begin, click on the Layout option in the toolbar and select the Concatenate Columns option from the drop-down.

This will open the Recipe Configuration – Concatenate Columns panel.

In this panel, you can configure the following:

New Column Name: Enter a name for the new column that will hold the combined values.

Delimiter: Specify the character (such as a space, comma, or dash) that should separate the values.

Column Name(s): Use the drop-down to select the columns you want to concatenate.

Once you're done, click Apply . The new column will appear in the Grid, showing the combined values. For example, a new PC_Country column.

In this document, you’ll learn how to use the Find and Replace function in Astera Dataprep to search for specific values and replace them with new ones across your dataset.

To begin, click on the Cleanse option in the toolbar and select Find and Replace from the drop-down.

This will open the Recipe Configuration – Find and Replace panel.

In this panel, you’ll configure the following options:

Column Selection Properties

Apply to entire dataset: Applies the find and replace action to all columns.

Apply to specific column(s): Applies the action to selected columns only.

Find and Replace

Once you’re done, click Apply . In the Grid, you’ll see that all matching values have been replaced accordingly in the selected column(s).

Alternatively, you can right-click on any column header in the Grid and go to Cleanse > Find and Replace. The same configuration panel will appear with the column already selected. Make any changes you need and click Apply to update your data.

In this document, you’ll learn how to use the Filter transformation in Astera Dataprep to include or exclude records based on a specified condition within your Dataprep Recipe.

Suppose you have a dataset of transactions, but some rows have missing transaction amounts. These incomplete records could lead to incorrect revenue calculations. By applying a filter, you can remove rows where the transaction amount is missing, ensuring that only valid transactions are included in the analysis.

To filter in Astera Dataprep, click on the Transform option in the toolbar and select Filter from the drop-down.

Once selected, the Recipe Configuration – Filter panel will open.

Filter Condition: Here, you can configure the Filter section by entering a condition to include or exclude records.

Click on the three dots to open Expression Builder. Here you can either write your own expression or choose from the built-in functions' library. For example, to remove rows where the Amount field is missing, you can enter: Amount IS NOT null

Once done, click Apply.

Now, in the grid, you can see that only records meeting the specified condition remain in the dataset.

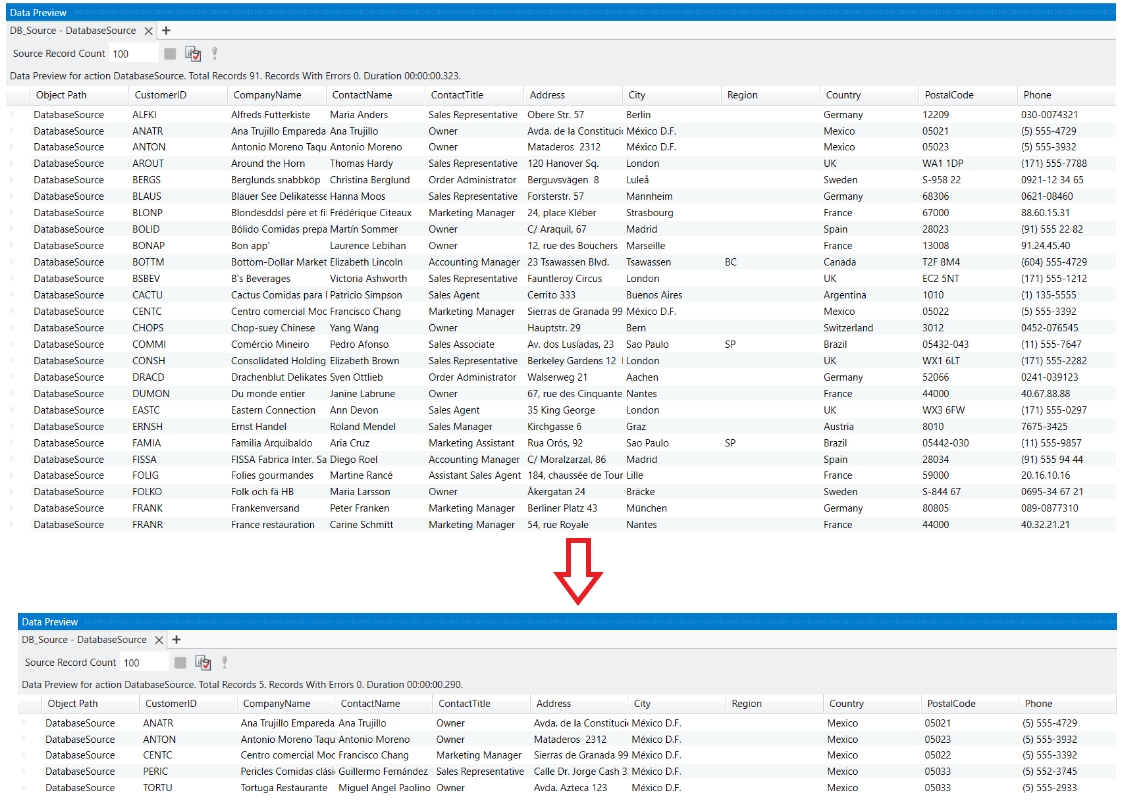

Astera Dataprep makes it easy to connect to and work with databases. You can connect, browse, and start preparing your data within a few clicks.

Start by creating a database connection as a shared action in your project. This allows you to reuse the connection throughout your project. To learn how to create a database connection, click here.

Ask in chat to read your desired table.

This approach is ideal if you prefer working through natural language and want to quickly load data from your connected databases without manual browsing.

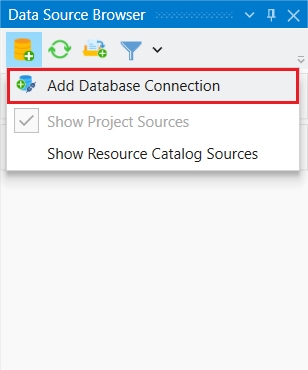

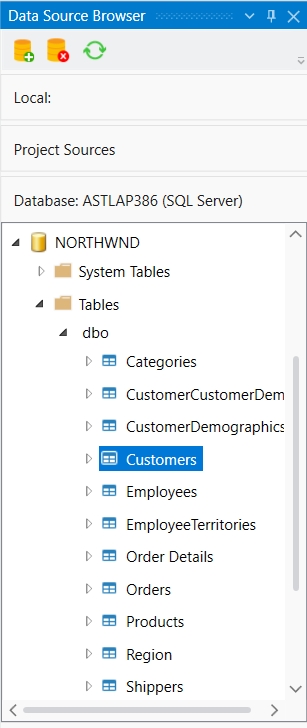

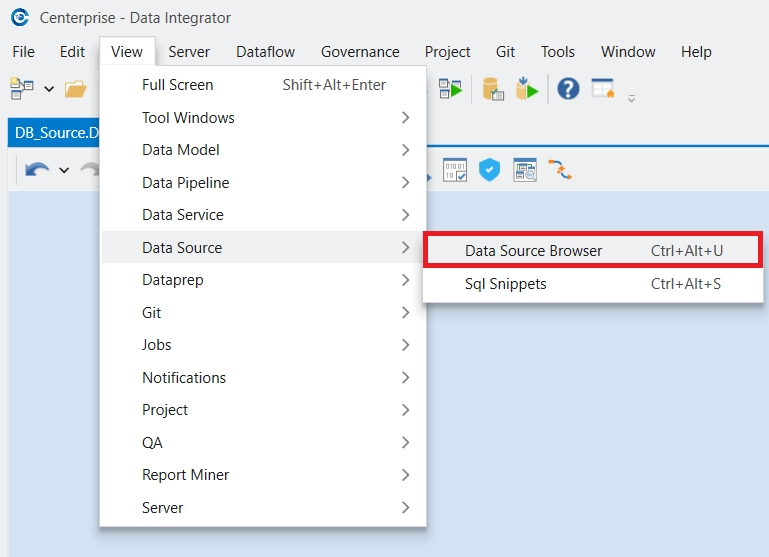

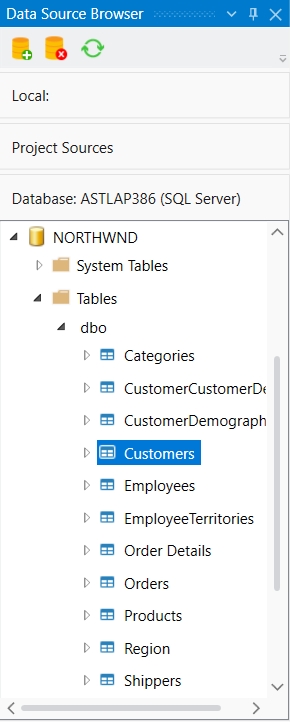

Open the Data Source Browser.

Click the Add Data Source dropdown and choose Add Database Connection.

Note: When working with Astera Cloud, local (on-premises) databases will not be accessible.

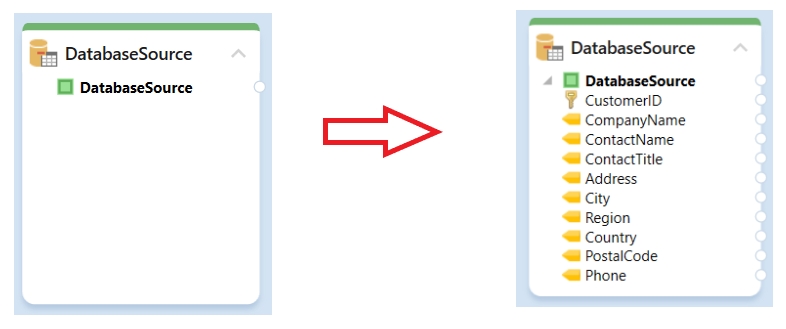

Once your connection is added, browse through the list of tables and simply drag and drop the required table into your dataflow. This creates a shared action automatically in your project ready to be used for reading.

The table is now ready for filtering, cleaning, or transforming, just like any other dataset.

In this document, you’ll learn how to use the Remove function in Astera Dataprep to clean unwanted characters from your data.

To begin, click on the Cleanse option in the toolbar and select Remove from the drop-down.

This will open the Recipe Configuration – Remove panel.

In this panel, you’ll configure the following options:

Apply to entire dataset: The changes will be applied to the entire dataset.

Apply to specific column(s): Allows you to apply changes to specific columns.

Under the Remove section, you can choose what to remove:

All whitespaces

Leading and trailing whitespaces

Tabs and line breaks

Duplicate whitespaces

Once you’re done, click Apply. For example, in our use case the Grid will show that all whitespaces and hyphens ("-") have been removed from the ShipPostalCode column.

Alternatively, you can right-click on a column in the Grid and go to Cleanse > Remove. The same configuration panel will appear with the column already selected. Make any changes you need and click Apply to clean the data.

In this document, you’ll learn how to use the Change Case function in Astera Dataprep to convert text data to lower, upper, or title case.

To begin, click on the Cleanse option in the toolbar and select Change Case from the drop-down.

This will open the Recipe Configuration – Change Case panel.

In this panel, you’ll configure the following options:

Column Selection Properties

Apply to entire dataset: Applies the case change to all columns in the dataset.

Apply to specific column(s) – Applies the change only to selected columns.

Case Type

Lower: Converts all text to lowercase.

Upper: Converts all text to uppercase.

Title: Capitalizes the first letter of each word.

Once you’re done, click Apply . In the Grid, you’ll see that the data in the StoreName column has been converted to uppercase.

Alternatively, you can right-click on a column header in the Grid and select Cleanse > Change Case. The same configuration panel will appear with the column already selected. Make any changes you need and click Apply to update your data.

In this document, you will explore how to split columns in Astera Dataprep.

To split columns in Astera Dataprep, click on the Layout option in the toolbar and select the Split Columns option from the drop-down.

This will open the Recipe Configuration – Split Columns panel.

In this panel, you can configure the following options:

Column Name: Choose the column you want to split.

Delimiter: Enter the character (like a space, comma, or dash) that separates the values.

New Column Names: Specify the names for the new columns that will hold the split values.

Once you're done, click Apply . You’ll see in the Grid that your selected column (for example, CustomerName) has been split into new columns (like FirstName and LastName) based on the delimiter you provided.

Alternatively, you can right-click on a column header directly in the Grid and select Split Columns from the menu. The configuration panel will open with the selected column pre-filled. Make any changes you need and click Apply to split the column.

In this document, you will explore how to move a column to a new position in Astera Dataprep.

To move a column in Astera Dataprep you can click on the Layout option in the toolbar and select Move Column from the drop-down.

Alternatively, you can also right-click on the column header that you want to move to a new position and select Move Column and the required position from the drop-down.

Once selected, the Recipe Configuration – Move Column panel will open. Here, you can specify the Name and Position of the column.

Position: Consists of various options which can be selected to move columns to different positions.

Start: Moves the column to the start of the dataset.

End: Moves the column to the end of the dataset.

Right Displacement:

Once the necessary configurations have been made, you can select Apply to move the column to your desired position.

You can also move a column within the Grid itself. To do this, you simply drag-and-drop the column to a new position. When dragging a column, a black line appears to indicate the drop position.

In this document, you’ll learn how to use the Compute All function in Astera Dataprep to apply an expression across all fields in your dataset.

To begin, click on the Cleanse option in the toolbar and select Compute All from the drop-down.

This will open the Recipe Configuration – Compute panel.

Click on the button to open the Expression Builder window.

In this example, we have mapped a regular expression to the “$FieldValue” parameter.

Click OK and the expression will appear in the Recipe Configuration – Compute panel.

Once you’re done, click Apply. In the Grid, you’ll see that all white spaces in every field have been replaced with single spaces.

In this document, you’ll learn how to use the Replace Null Values function in Astera Dataprep to handle missing data by replacing null strings or numerics with default values.

To begin, click on the Cleanse option in the toolbar and select Replace Null Values from the drop-down.

This will open the Recipe Configuration – Replace Null Values panel.

In this panel, you’ll configure the following options:

Column Section Properties:

Apply to entire dataset: The changes will be applied to the entire dataset.

Apply to specific column(s): Allows you to apply changes to selected columns only.

Replace Nulls:

Once you’re done, click Apply. In the Grid, you’ll see that all specified null values have been replaced accordingly.

Alternatively, you can right-click on a column in the Grid and go to Cleanse > Replace Null Values. The same configuration panel will appear with the column already selected. Make any changes you need and click Apply to update your data.

Astera Dataprep streamlines data cleansing, transformation, and preparation within the Astera platform. With an intuitive interface and data previews, it simplifies complex tasks like data ingestion, cleaning, transformation, and consolidation.

With the AI-powered chat interface, users can describe data preparation tasks in plain language, and the system automatically applies the right transformations and filters, reducing the learning curve and accelerating the process.

Astera Dataprep is essential for optimizing data processes, ensuring clean, transformed, and integrated data is ready for analysis.

To activate Astera on your machine, you need to enter the license key provided with the product. When the license key is entered, the client sends a request to the licensing server to grant the permission to connect. This action can only be performed when the client machine is connected to the internet.

However, Astera provides an alternative method to activate the license offline by providing an activation code to the users who request for it. Follow the steps given below for offline activation:

1. Go to the menu bar and click on Server > Configure > Step 4: Enter License Key

2. Click on Unlock using a key.

3. Type your Name, Organization and paste the Key provided to you. Then, click Unlock. Do the same if you are changing (instead of activating) your license offline.

The Dataprep Recipe panel is a workspace where you can visualize and manage all data preparation tasks applied to your datasets. This panel is at the core of the data preparation process, providing a clear view of each step taken.

This panel displays a flow of all operations performed on the dataset, with each task shown as a distinct step in the sequence. You can follow a step-by-step workflow, making it easier to understand the impact of each action. This approach ensures that each data preparation task is applied methodically and can be reviewed at any point.

To navigate to the Dataprep Recipe panel, go to View > Dataprep > Dataprep Recipe.

The panel is interactive, allowing you to click on any step to review or modify it. Clicking on the Expand All option allows you to get a more detailed view of a data preparation task.

This provides flexibility, enabling users to adjust their data preparation process as needed.

Maintaining high data quality is crucial for accurate analysis and decision-making. The Dataprep Profile Browser panel and active profiling provide real-time insights into data health, assisting in data cleaning and transformation while ensuring the data meets quality standards. Key features include real-time monitoring, evaluation of cleanliness, uniqueness, and completeness, helping you quickly identify and address issues to maintain data integrity and reliability.

This Dataprep Profile Browser panel, displayed as a side window, offers visual and tabular representations of data quality metrics, helping you assess data health, detect issues, and gain valuable insights.

Key Features:

Comprehensive Data View: The Profile Browser provides a holistic view of the dataset with graphs, charts, and field-level profile tables, making it easier for you to understand and analyze data quality.

Field-Level Insights: Detailed profile tables offer granular insights into each data column, helping you pinpoint specific issues and their impact.

The Dataprep Profile Browser panel toolbar provides options for different views of the data:

In this document, you will explore how to add a column in Astera Dataprep.

To add a column, click Layout in the toolbar and select Add Column.

Once done, the Recipe Configuration – Add Column panel will open.

In this document, you will explore how to route datasets in Astera Dataprep. A Route transformation routes the data to multiple datasets based on custom decision logic expressed as rules or expressions. This allows the creation of multiple sub-datasets from the main dataset with records being directed to different datasets based on specified conditions.

Open a Recipe in Astera Dataprep.

Navigate to the Toolbar and select Transform > Route.

In this document, you explore how to make changes to a column in Astera Dataprep.

To change a column, select the Layout option in the toolbar and select Change Column from the drop-down.

Alternatively, you can also right-click on the column you need to change and select the Change Column option from the drop-down.

Once selected, the Recipe Configuration – Change Column

In this document, you will explore how to remove columns in Astera Dataprep.

To begin, open your recipe in Astera Dataprep. Then, click on the Layout option in the toolbar and select Remove Columns from the drop-down.

This will open the Recipe Configuration – Remove Columns panel.

In this document, you’ll learn how to use the Unstack transformation in Astera Dataprep to convert values from a single column into multiple columns, making it easier to compare data side by side.

Suppose you have a dataset containing education-related statistics for multiple countries, with columns for Country, ISO_Code, Year, and LAYS. Each country has separate rows for different years. For example, 2017, 2018, and 2020.

To unstack data in Astera Dataprep, click on the Transform option in the toolbar and select Unstack from the drop-down.

Astera Dataprep features a preview-centric grid, an Excel-like, dynamic, and interactive grid that updates in real time. This interface, with its familiar rows and columns layout, allows for easy navigation and data manipulation, mimicking the traditional spreadsheet format. It displays transformed data immediately after each operation, providing you with an instant preview. This allows you to see the impact of their transformations right away, enabling quick verification and ensuring that changes are applied correctly.

You can directly apply transformations to columns by right clicking the column header and selecting the required transformation.

If multiple columns are selected when right clicking, different transformations are available for application. For example, Concatenate Columns.

You can rename columns by double clicking the column headers.

To change a column’s data type, you can select the data type icon next to the column name and select a different data type from the drop-down menu.

In this document, you’ll learn how to use the Join function in Astera Dataprep to combine a dataset from a database table in a shared connection with an existing dataset in your Dataprep Recipe.

In this use case, we have a Dataprep Recipe where a company’s Customers dataset has been cleansed. Now, they want to join it with their Orders dataset, which is stored in a database table accessible through a shared connection in the project.

In this document, you’ll learn how to use the Lookup transformation in Astera Dataprep to enrich a dataset by bringing in additional fields from another source.

A university maintains a student's dataset, but roll numbers are stored separately. To prepare data for reporting and transcripts, the university needs to enrich the student's dataset with roll numbers, ensuring each student is matched correctly.

This lookup source may exist as a file, a shared project source or in a database table.

In this document, you will explore how to rename a column in Astera Dataprep.

To rename a column in Astera Dataprep click on the Layout option in the toolbar and select the Rename Column option from the drop-down.

Alternatively, you can also right-click on the column header that you want to rename and select Rename Column from the drop-down.

Once selected, the

In this document, you’ll learn how to use the Join function in Astera Dataprep to combine two datasets within the same Dataprep Recipe.

For this use case, we have a Dataprep Recipe where a company’s Orders and OrderDetails dataset has been cleansed, they now want to join these datasets with each other.

In this document, you will explore how to sort columns in Astera Dataprep.

To sort columns in Astera Dataprep, click on the Layout option in the toolbar and select the Sort Columns option from the drop-down.

Once selected, the Recipe Configuration – Sort Columns panel will open.

spaCy

NLP

Java 17 is installed

Match Case: Enable this checkbox to make the search case sensitive.

Find: Enter the value you want to search for.

Replace: Enter the value you want to replace it with.

Digits

Punctuations

Specified characters – You can enter one or more characters (separated by commas) to be removed.

Left Displacement: Moves the column to the left by the specified number of Index times.

Index: Moves the column to the specified Index position.

Move Before: Moves a column before a specified column in the dataset. Once selected, you must specify the column through the Referenced Column drop-down.

Move After: Moves a column after a specified column in the dataset. Once selected, you must specify the column through the Referenced Column drop-down.

Null strings with blanks: Replaces all null strings with blank entries.

Null numerics with zeros: Replaces all null numeric values with 0.

AI-powered Chat: Interact with a smart assistant to perform data preparation tasks through natural language commands. Just type what you want, for example, "filter out records where contact title is "Sales Manager" and the AI agent will apply the required transformation instantly.

Point and Click Recipe Actions: Easily accomplish data preparation tasks through intuitive point and click operations. The Dataprep Recipe panel provides a visual representation of all the Dataprep tasks applied to a dataset.

Rich Set of Transformations: Perform a variety of transformations such as Join, Union, Lookup, Calculation, Aggregation, Filter, Sort, Distinct, and more.

Active Profiling and Profile Browser with Data Quality Rules: Real-time data health assists in data cleaning and transforming while validating data to provide a comprehensive view of its cleanliness, uniqueness, and completeness. The Profile Browser, displayed as a side window, offers a comprehensive view of the data through graphs, charts, and field-level profile tables, helping you assess data health, detect issues, and gain valuable insights.

Preview-Centric Grid and Grid View: An Excel-like, dynamic, and interactive grid updates in real time, displaying transformed data after each operation. It offers an instant preview and feedback on data quality, ensuring accuracy and integrity.

Data Source Browser: A centralized location that houses file sources, catalog sources, and project sources, providing a seamless way to import these sources into the Dataprep artifact.

Only flat (tabular) data structures are supported.

Hierarchical data formats such as nested JSON or XML with multiple levels are not supported.

2. Data Size Constraints

Large files may experience performance delays when previewing or applying multiple transformations. Extremely large datasets should be processed in smaller chunks.

3. Export Destinations

Direct exports are currently limited to CSV and Excel formats.

4. Dataprep AI Chat

The Dataprep Agent only works with metadata and cannot answer questions about the actual data values. You can paste a sample into the chat window so it can assist you.

The Agent cannot respond to queries outside the scope of Astera Dataprep.

4. Another pop-up window will give you an error about the server being unavailable because you cannot connect to the server offline. Click OK.

5. Click on Activate using a key button.

6. Now, copy the Key and the Machine Hash and email it to [email protected]. The Machine Hash is unique for every machine. Make sure you send the correct Key and Machine Hash as it is very important for generating the correct Activation Code.

7. You will receive an activation code from the support staff via e-mail. Paste this code into the Activation Code textbox and click on Activate.

8. You have successfully activated Astera on your machine offline using the activation code. Click OK.

9. A pop-up window will notify you that the client needs to restart for the new license to take effect. Click OK and restart the client.

You have successfully completed the offline activation of Astera.

You can move, edit, or remove tasks directly within the panel. This makes it simple to adjust the data preparation strategy as new insights are gained or requirements change.

The Up and Down option can be used to move recipe actions and change their order:

You can select any of the recipe actions and select the Preview option to view any changes in the dataset in real-time.

The edit and delete icons can be used to edit and/or remove tasks from a Dataprep Recipe. Recipe actions can also be edited by double clicking the cards.

By default, the field profiles are displayed through a bar chart. By selecting the To Line Chart icon, you can view the field profile through a line chart:

When in Bar Chart view, the Show Data Labels option can be used to have labels displayed on the chart:

The Reset option resets any changes made to the profile view. For example, in case the profile view is adjusted using the sliding bar below the graph.

The Expand View option opens a new window to give you a better view of the data profile.

Finally, selecting the Global View option displays the overall dataset profile.

Issue Detection: The Profile Browser highlights issues like missing values, duplicates, and outliers, allowing you to address problems proactively and maintain data integrity.

In this panel, you can specify the Name, Type, Header, Position, and Index.

Name: The name of the new column.

Type: The datatype of the new column to be added.

Header: Specifies the column name when writing to a file.

Position: Consists of various options to help specify where the new column should be added.

Index: Adds the column at the specified Index position.

Start: Adds a column to the start of the dataset.

End: Adds a column to the end of the dataset.

Move Before: Adds a column before a specified column in the dataset. Once selected, you must specify the column through the Reference drop-down.

Value: You can specify the expression that you want to be applied on the objects for the new column.

This can be done directly in the expression box, or you can open the Expression Builder by clicking next to the expression box.

Here, we have simply added 100 to our existing ‘Freight’ value.

Now, let’s select Apply to add this new column to our dataset.

Once done, you can see that the new column with your specified configurations has been added to the grid.

Configure the following options in the recipe action in the Recipe Panel:

Default Dataset Name: This dataset contains all the records not passing any rules defined in the Route transformation expressions. It is required to set a name for the Default Dataset here.

Dataset Name: The name of the dataset that will contain data for the records that pass/fulfill its specific expression's requirements.

Expression: Enter an expression (e.g., Region = ‘North’) or any condition you want to apply to your data. Each Route expression you add here will create its own Dataset based on whether the records satisfy the expression.

In the Route Properties, click on the expression text entry box to enter an expression. You can enter the expression here or click the button to open the expression builder. Click to approve and apply the expression.

In this example, we have created three datasets based on the value in the Region field: one for North_Region, one for South_Region, and a default dataset named Other_Region. Each dataset has an associated expression that routes records accordingly. Records where the Region is "North" are sent to North_Region, those with "South" go to South_Region, and any records that do not match these conditions are routed to the default dataset, Other_Region.

Click Apply to apply the changes or Cancel to discard them.

Once you click Apply, the routed datasets are created and by default, the first Route Dataset (here: “North”) is previewed on the grid.

The other datasets can then be read using the Read Dataset recipe action.

This concludes the document on using Route in Astera Dataprep.

Name: The name of the column that needs to be modified.

Operation: The modification that needs to be applied to the column. This drop-down consists of three options: DataType, Header, and Value.

DataType: Select this if the column’s data type needs to be modified. If selected, you can use the Type drop-down to choose a new data type for the selected column.

Header: Select this if a column’s header needs to be changed. If selected, you can specify a new header in the Header textbox.

Value: Select this if you need to modify the value within a column. If selected, you can use the expression box to enter an expression that you want to be applied.

Once you are done with the necessary configurations, you can select the Apply option for the changes to be applied to the selected column.

In this panel, use the Column Name(s) drop-down to select the columns you want to remove. You can select multiple columns from the list.

Once you're done, click Apply . The selected columns will be removed from the Grid.

Alternatively, you can right-click on a column header directly in the Grid and select Remove Columns from the menu. The configuration panel will open with the selected column pre-filled. You can further configure the columns to be removed and add any others if needed. Once done click Apply to remove the column(s).

Once selected, the Recipe Configuration – Unstack panel will open.

Group Count: Check this option if you manually define the number of input groups in your dataset.

Number of Input Groups: The number of times your repeating values appear for each unique Key.

Unstack Options:

Key: Columns that uniquely identify each record (e.g., Country, ISO_Code).

Input: Columns that hold the values you want to unstack (e.g., LAYS).

Example: Setting number of input groups to 3 (for years 2017, 2018, and 2020) with Country and ISO_Code as Key and LAYS as Input will unstack values into three new columns (LAYS1, LAYS2, LAYS3).

Pivot: Check this option when you want to unstack data based on a category or driver values (e.g., Year), creating separate columns for each value.

Unstack Options:

Key: Columns that uniquely identify each record (e.g., Country, ISO_Code).

Input: Columns that hold the values you want to unstack (e.g., LAYS).

Pivot: Column containing categories or drivers (e.g., Year).

Driver Values: You also need to provide the driver values (e.g., 2017, 2018, 2020). These can be entered manually or fetched using Auto Fill.

Once you are done with your configurations, click Apply.

Example: Setting Country and ISO_Code as Key, LAYS as Input, and Year as Pivot with driver values 2017, 2018, 2020 will generate separate columns named LAYS_2017, LAYS_2018, and LAYS_2020.

You can also change column order by dragging and dropping columns to a different place in the table. Small black markers help guide you when moving columns.

In case there are any errors or warnings in the dataset, they are displayed on the Grid for easier identification. For example, here we’ve created a validation rule for Null values in the Region column:

Once the validation rule has been applied successfully, it will show the errors in the preview grid like so:

To begin, click on the Join option in the toolbar and select Table from the drop-down.

This will open the Recipe Configuration – Join panel.

In this panel, you’ll configure the following options:

Connection Name: Select the shared connection you want to use. The drop-down lists all shared connections available in the project.

Table: From the drop-down, choose the database table you want to join with. In this example, we’ll select the Orders table.

Join Dataset: You can provide a custom name for the joined dataset or keep the default name. In this example, we’ll keep the default name.

Join Type: Choose the type of join you want to perform:

Inner: Keeps only the records that have matching values in both datasets.

Left Outer: Keeps all records from the current dataset and adds matching data from the table. Unmatched records from the table are filled with nulls.

Right Outer: Keeps all records from the table and adds matching data from the current dataset. Unmatched records from the current dataset are filled with nulls.

Full Outer: Keeps all records from both datasets. Unmatched values are filled with nulls.

In our example, we’ll use an Inner join to include only matching records.

Keys: Specify the key fields that the join will be based on. Astera will auto-detect matching fields, but you can modify them as needed.

Left Field: Field from the current dataset.

Right Field: Field from the table in the shared connection.

In this case, we’ll keep the default key fields selected.

Once you’re done, click Apply. The shared connection table will now be joined, and the result will appear in the grid.

This will open the Recipe Configuration – Lookup panel. In this panel you can configure the source-specific settings (see tabs below).

Browse Path: Use this to manually browse and select your source file.

Path from Variable: Use this when your file path is dynamic and parameterized. To learn more about using variables click here.

For this use case, we’ll use the Browse Path option.

Lookup Source: Choose the source you want to use for your Lookup. All available sources will be visible in the drop-down.

Connection Name: Select the shared connection you want to use. The drop-down lists all shared connections available in the project.

Table: From the drop-down, choose the database table you want to lookup from with. In this example, we’ll select the RollNo table.

Provide a name for the lookup dataset (or keep the default name).

Keys: Select the key fields to define how records will be matched. Astera will auto-detect matching fields, but you can modify them as needed.

Current Dataset Column: Field from the current dataset.

Source Column: Field from the lookup dataset.

Return Columns: Choose which columns to return from the lookup source.

Once you’re done, click Apply. The result will appear in the grid with the new column(s) added.

Once you are done with your configurations, you can select Apply for the column to be renamed.

To rename a column using the Grid itself, you can also simply double-click on the column header that you want to rename and provide a new name directly within the Grid.

This will open the Recipe Configuration – Join panel.

In this panel, you’ll configure the following options:

Dataset: From the drop-down, choose the dataset you want to join with. For example, if you're currently working with the Orders dataset, you can select OrderDetails as the joining dataset.

Join Dataset: You can enter a custom name for the joined dataset or keep the default name.

Join Type: Choose the type of join you want to perform:

Inner: Keeps only the records that have matching values in both datasets.

Left Outer: Keeps all records from the current dataset and adds matching data from the joined dataset. Unmatched records from the joined dataset are filled with nulls.

Right Outer: Keeps all records from the joined dataset and adds matching data from the current dataset. Unmatched records from the current dataset are filled with nulls.

Full Outer: Keeps all records from both datasets. Unmatched values are filled with nulls.

In our example, we’ll use an Inner join to include only matching records.

Keys: Specify the key fields that the join will be based on. Astera will auto-detect matching fields, but you can modify them as needed.

Left Field: Field from the current dataset.

Right Field: Field from the joining dataset.

Once you’re done, click Apply. The datasets will now be joined, and the result will appear in the Grid.

In this panel:

Optional checkboxes allow you to customize the sort behavior:

Treat Null as the Lowest Value: Places null values at the beginning of the sort.

Case Sensitive: Enables case-sensitive sorting.

Return distinct values only: Returns only unique rows in the sorted output.

Use the Field drop-down to select the column you want to sort.

The Sort Order options include:

Ascending: Sorts the column from A to Z or smallest to largest.

Descending: Sorts the column from Z to A or largest to smallest.

Click Apply to sort the dataset. The Grid will now reflect the specified sort order. For example, sorting the StoreName column in ascending order.

Alternatively, you can sort a column directly in the Grid by right-clicking on the column header and selecting Sort Columns from the menu. This opens the Recipe Configuration panel with the selected column pre-filled. Adjust the settings if needed and click Apply to confirm.

You can ask the Dataprep agent to create a new project for you. Provide the agent with the folder path where you want to create your project.

Alternatively, go to Project > New > Integration Project, enter a name, and select a location to save it.

Within the project, create a Dataprep document.

To create a data prep document you can simply ask the Dataprep agent, or right click the Project tab and selecting Add New Item.

On the Add New Item window select Dataprep and click the Add button.

Open the Data Source Browser.

Navigate to Cloud Storage and upload the files you want to work with.

Once the files have been uploaded, they will be visible in you project folder, ready for you to use in your Dataprep document.

Ask the Dataprep agent to load the file into your Dataprep document, or

Drag and drop the file from Cloud Storage onto the Dataprep canvas. To learn more click here.

Once the file is loaded, it will appear in the Preview Grid, where you can begin cleaning, transforming, and organizing your data.

Dataprep

Dataflows

Workflows

Subflows

Data Model

Report Model

API Flow

Project Management & Scheduling

Data Governance

Functions

Use Cases

Connectors

Miscellaneous

FAQs

Upcoming...

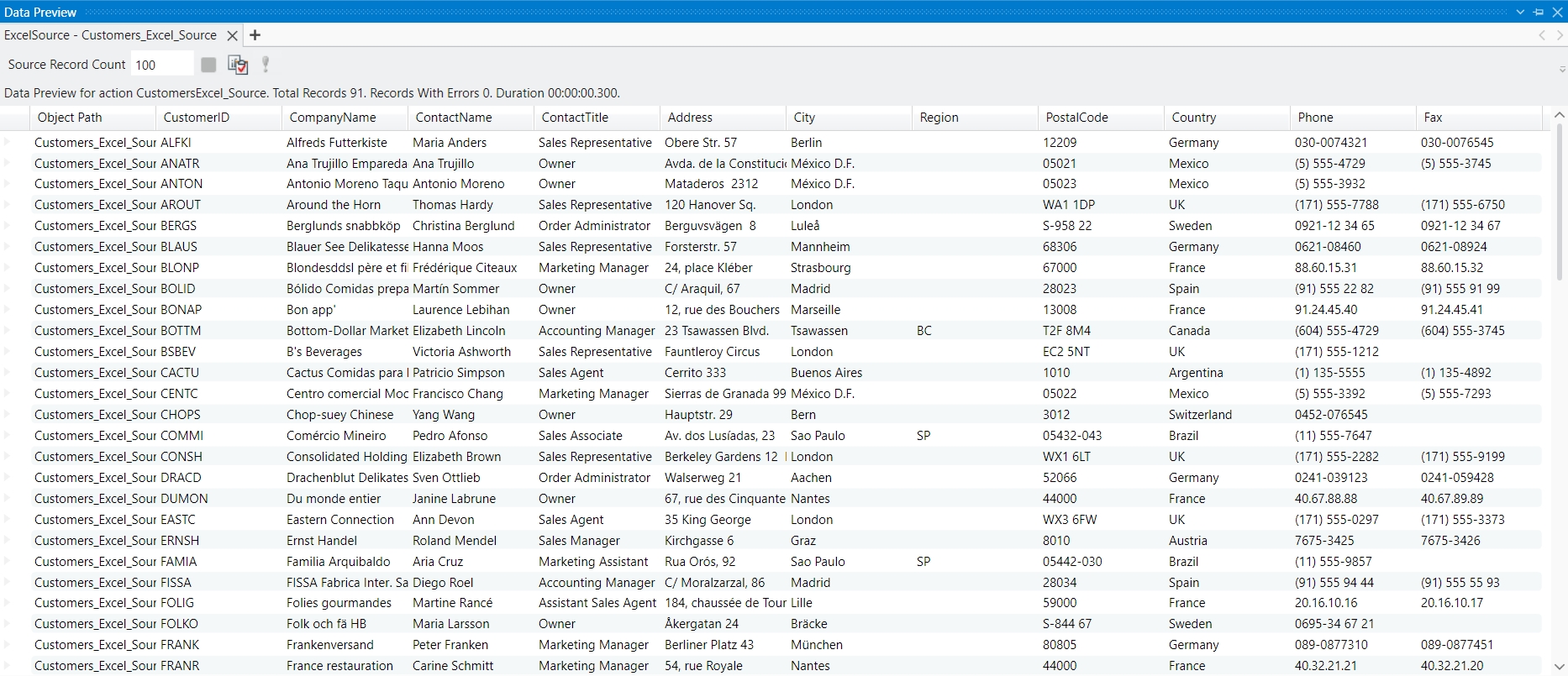

Astera Dataprep supports two ways to add sources:

By interacting with the AI-powered chat interface

Manually through Data Source Browser

You can simply ask the Dataprep chat interface to load your data by:

Providing it the name of the source:

Providing it the file path of your source:

The system will automatically load it as a dataset, making it ready for use in your data preparation workflow.

Open Astera Dataprep.

Navigate to the Toolbar and select Read > Project Source.

Once clicked, the recipe action is added to the Recipe Panel. Here, we have to configure the options to read the Project Source.

The options to be configured are:

Filter Source: This dropdown filters the type of shared sources you will be able to view and choose in the Shared Source options. Options are:

All: Shows all shared sources.

Excel Source: Shows only Excel sources.

Delimited Source

Shared Source: This dropdown shows the shared sources in the project (after filtering has been applied) that you can read.

Dataset Name: This is the name given to the dataset. You can configure this to be able to use the dataset separately elsewhere. The dataset name is auto filled and defaults to the name of the selected shared source. However, this name can be changed as needed.

Click Apply to apply the changes or Cancel to discard them.

Once you click Apply, the dataset will now be visible in Astera Dataprep, and further data processing can be applied to it.

Open the Data Source Browser and navigate to Project Sources and expand the accordion. Here you will see all the relevant supported shared sources that you can read from.

Drag and drop the desired source onto the Dataprep canvas. A 2x2 matrix will appear. Drop the source onto the Read option within the matrix.

Click Apply to apply the changes or Cancel to discard them.

Once you click Apply, the source will be loaded into the Dataprep Grid, and you can begin working with it.

This concludes the document on reading a Project Source in Astera Dataprep.

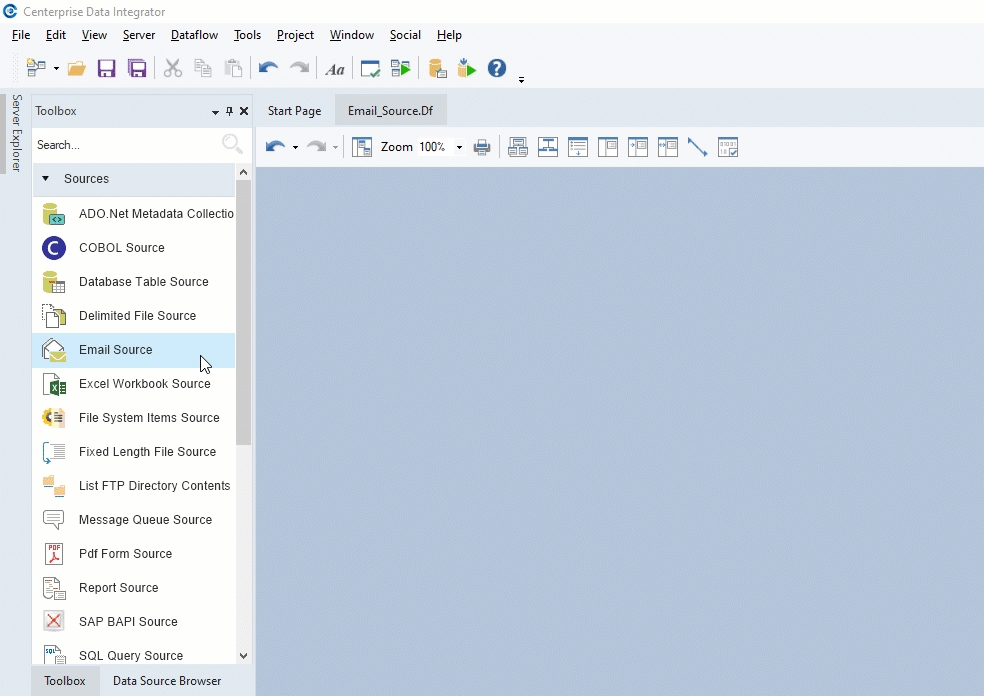

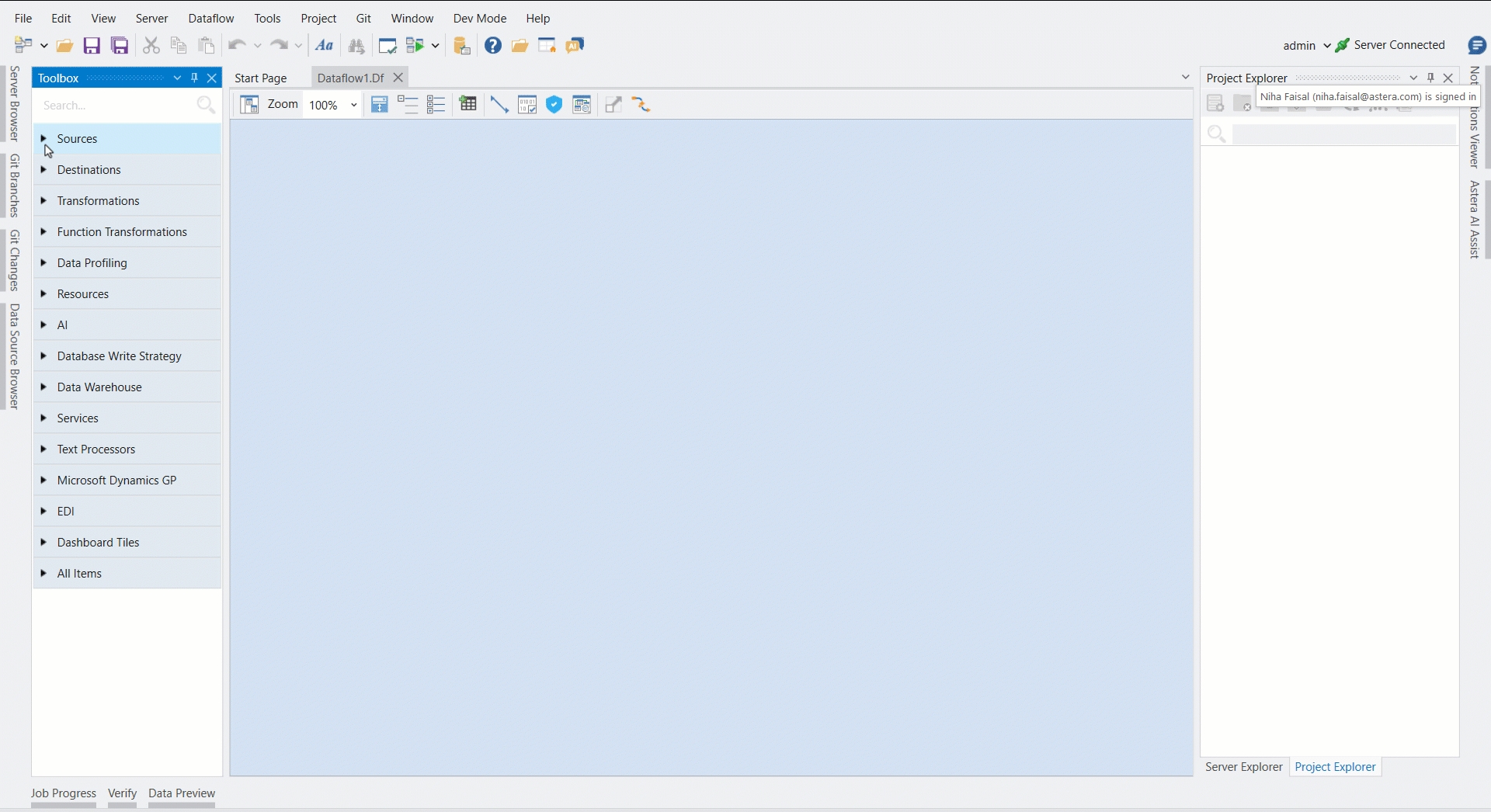

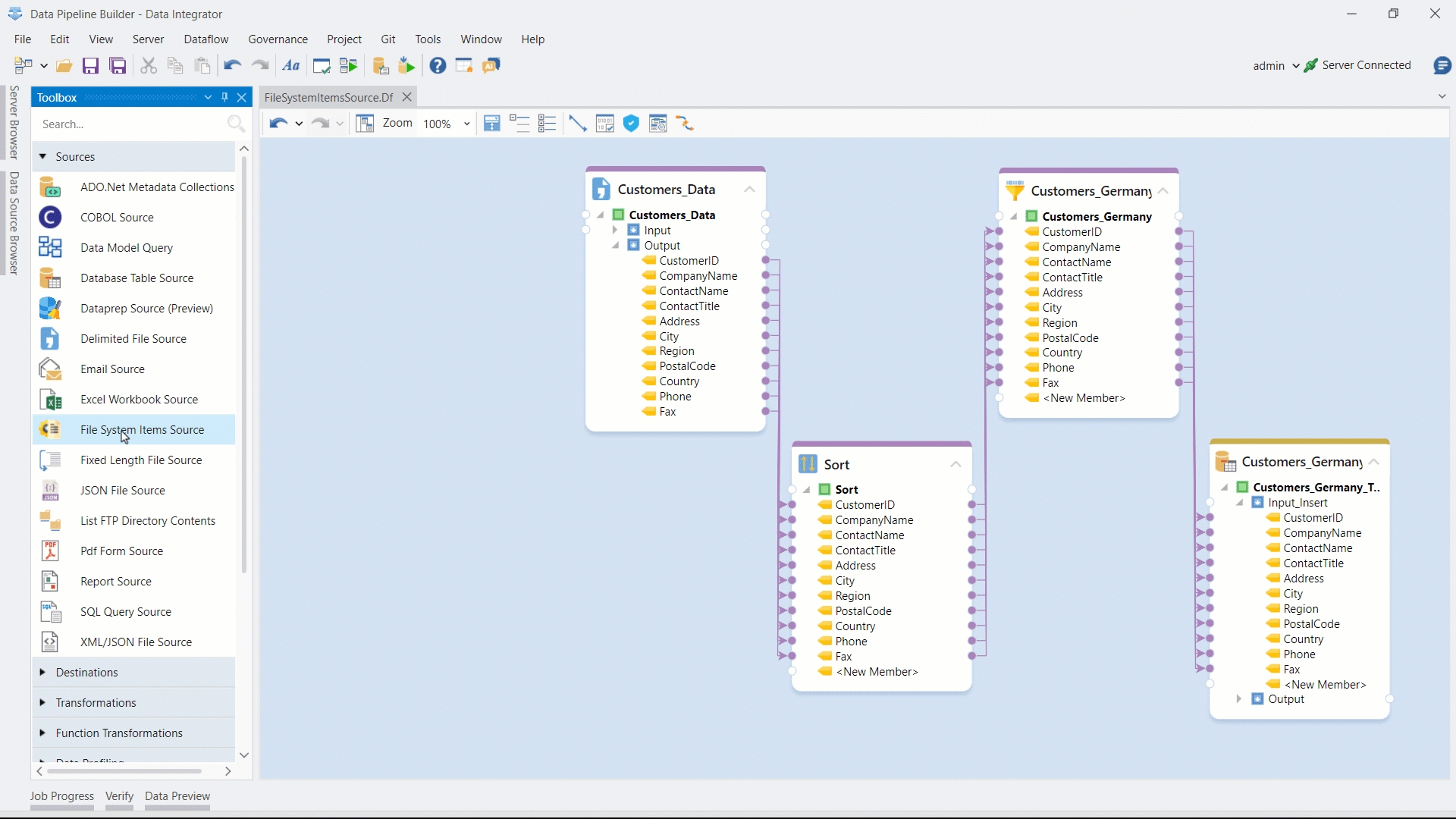

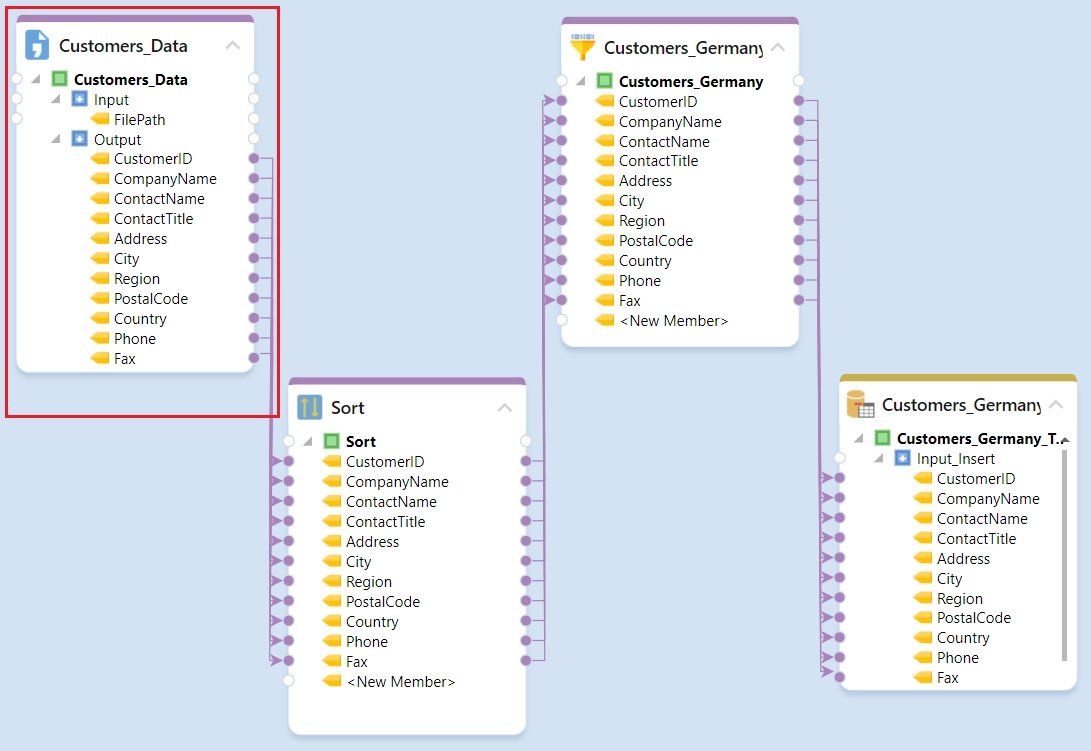

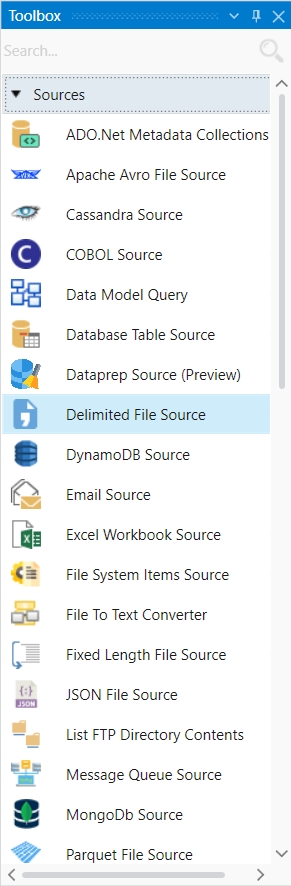

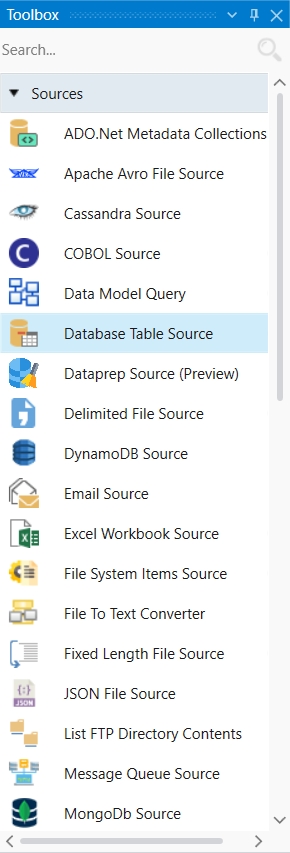

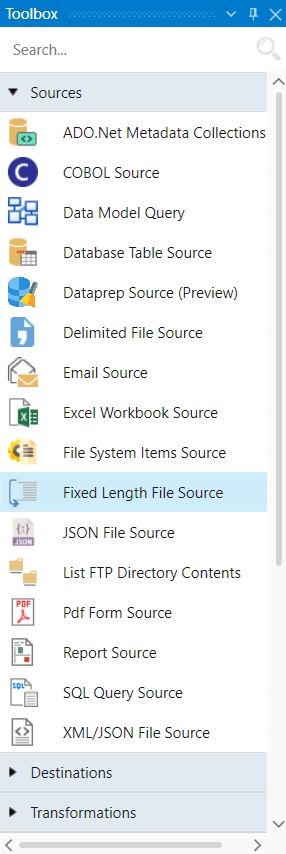

The ETL and ELT functionality of Astera Data Stack is represented by Dataflows. When you open a new Dataflow, you’re provided with an empty canvas knows as the dataflow designer. This is accompanied with a Toolbox that contains an extensive variety of objects, including Sources, Destinations, Transformations, and more.

Using the Toolbox objects and the user-friendly drag-and-drop interface, you can design ETL pipelines from scratch on the Dataflow designer.

The Dataflow Toolbar also consists of various options.

These include:

Undo/Redo: The Dataflow designer supports unlimited Undo and Redo capability. You can quickly Undo/Redo the last action done, or Undo/Redo several actions at once.

Auto Layout Diagram: The Auto Layout feature allows you to arrange objects on the designer, improving its visual representation.

Zoom (%): The Zoom feature helps you adjust the display size of the designer. Additionally, you can select a custom zoom percentage by clicking on the Zoom % input box and typing in your desired value.

In the next sections, we will go over the object-wise documentation for the various Sources, Destination, Transformations, etc., in the Dataflow Toolbox.

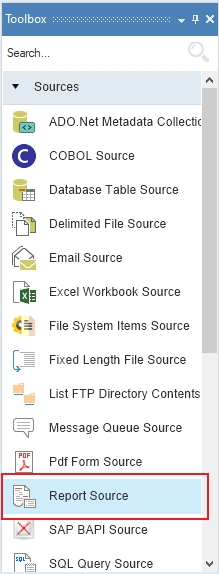

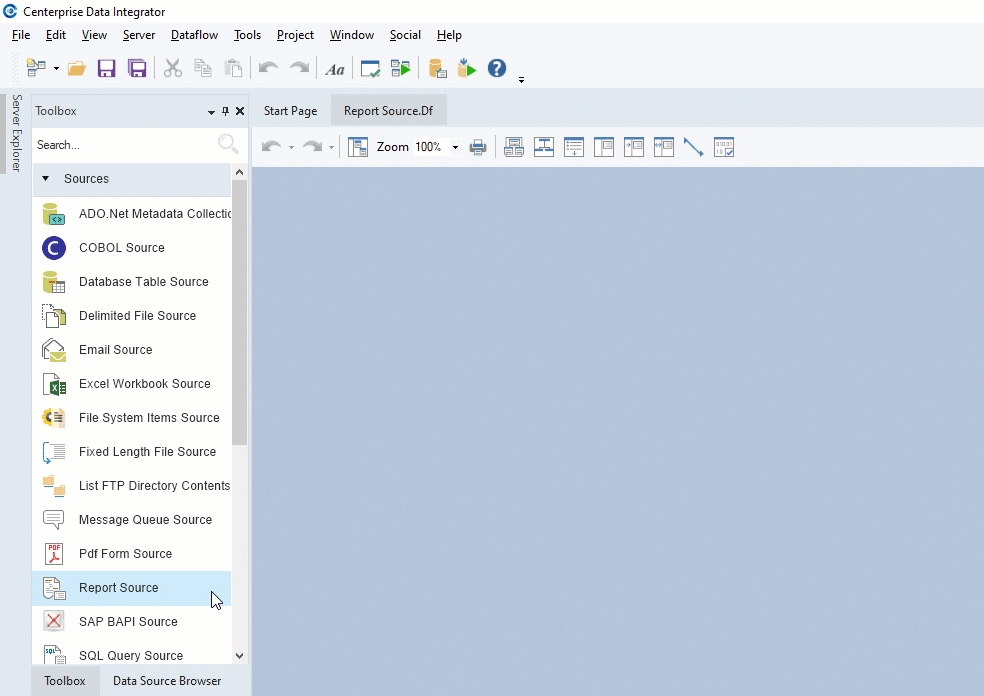

Report Model extracts data from an unstructured file into a structured file format using an extraction logic. It can be used through the Report Source object inside dataflows in order to leverage the advanced transformation features in Astera Data Stack.

In this section, we will cover how to get the Report Source object onto the dataflow designer from the Toolbox.