Connecting to Snowflake Database

Snowflake is an enterprise-ready data warehouse designed for the cloud. It uses a central data repository that makes data management easier, secure, and more accessible. Astera provides native connectivity to Snowflake databases through its database objects.

The connectivity can also be established through the Database Table Source, Database Table Destination, SQL Query Source, Database Lookup, SQL Query Lookup, and Database Write Strategies in Astera.

In this article, we will cover:

A use case where Snowflake is being used as a database in Astera.

Connecting to Snowflake databases in Astera.

Different data loading and reading options for Snowflake databases in Astera.

Use Case

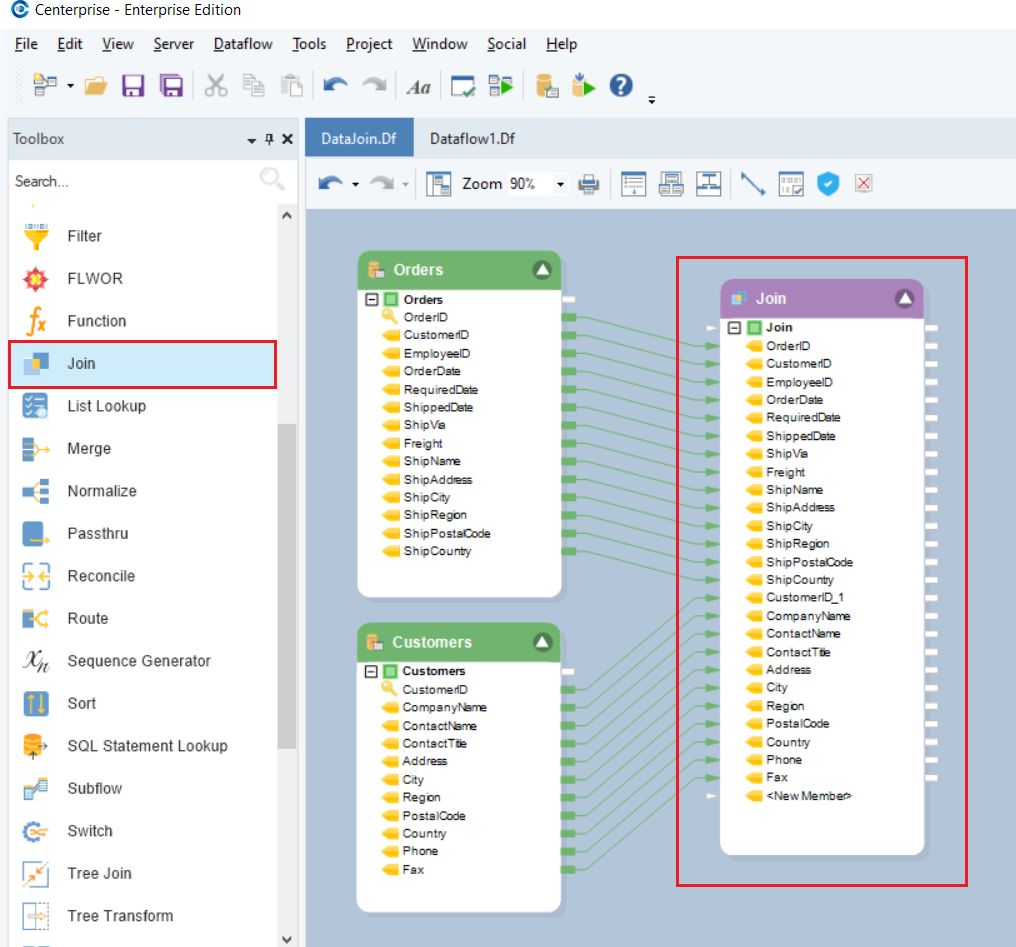

We have some sample Orders and Customer data stored in a Snowflake database. The data contains multiple fields containing orders and customer information. We want to join both the tables to have a consolidated view of this information.

We will be using the Database Table Source object to source data from the Snowflake database.

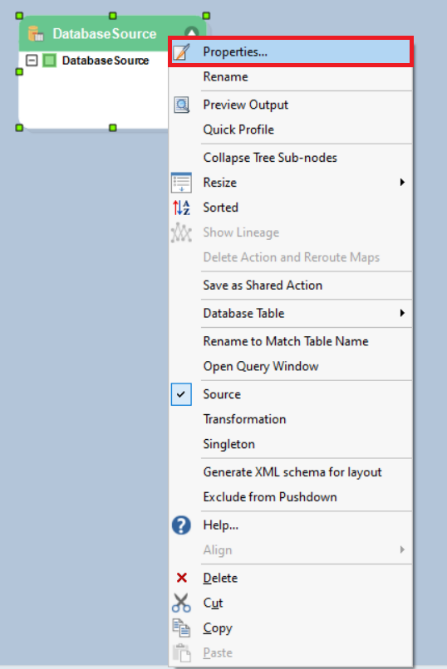

To configure the Database Table Source object, right-click on its header and select Properties from the context menu.

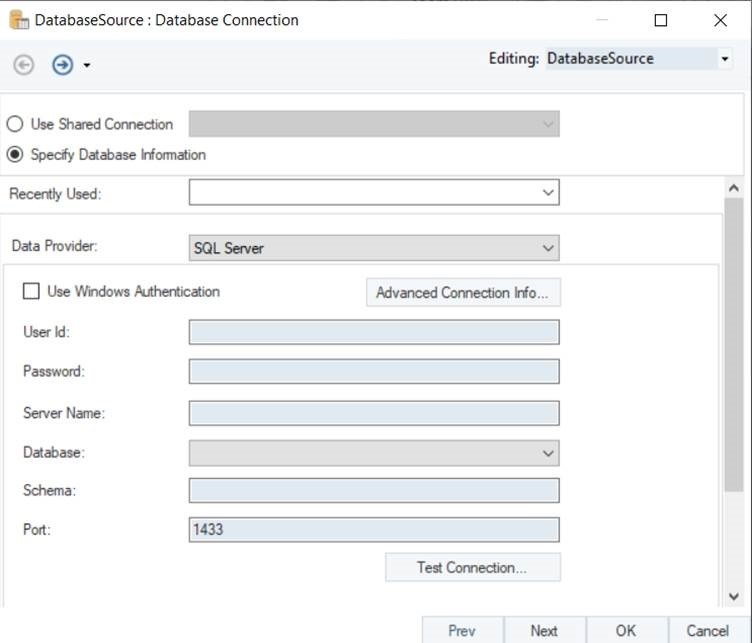

This will open a new window where you can configure a connection with the Snowflake database.

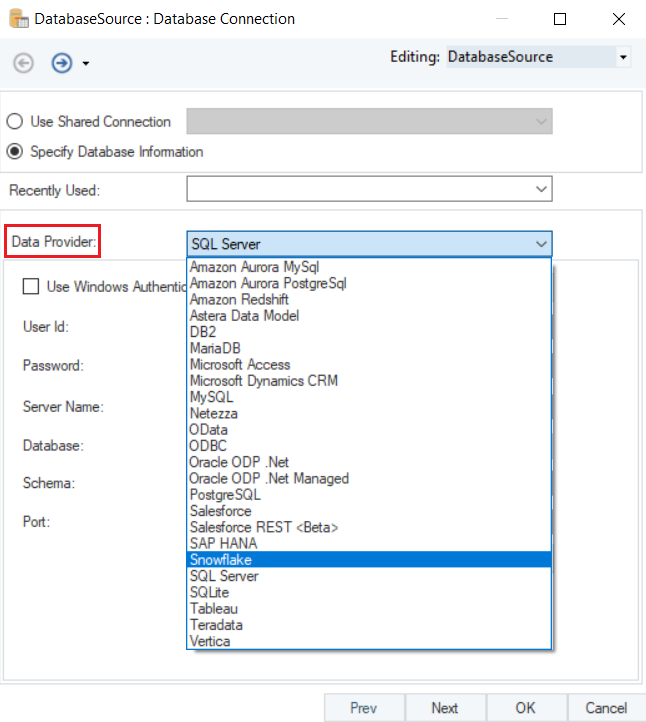

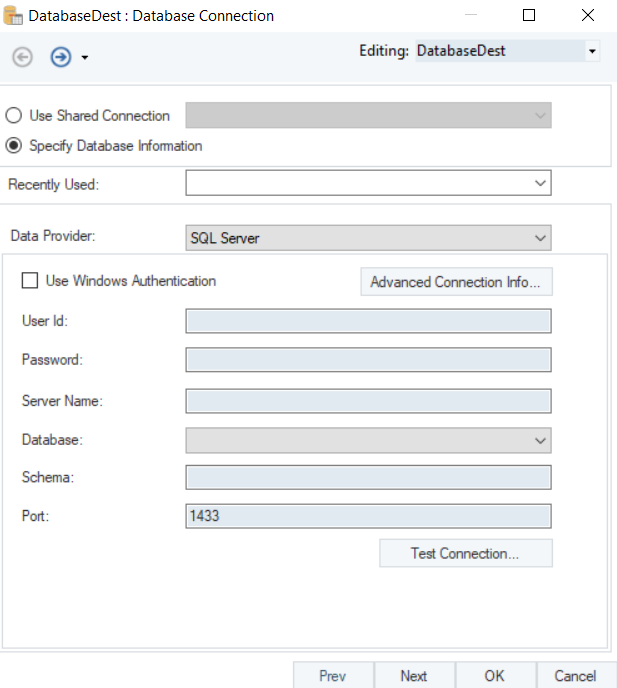

The first step is to select the Data Provider. Select Snowflake as your data provider from the drop-down list.

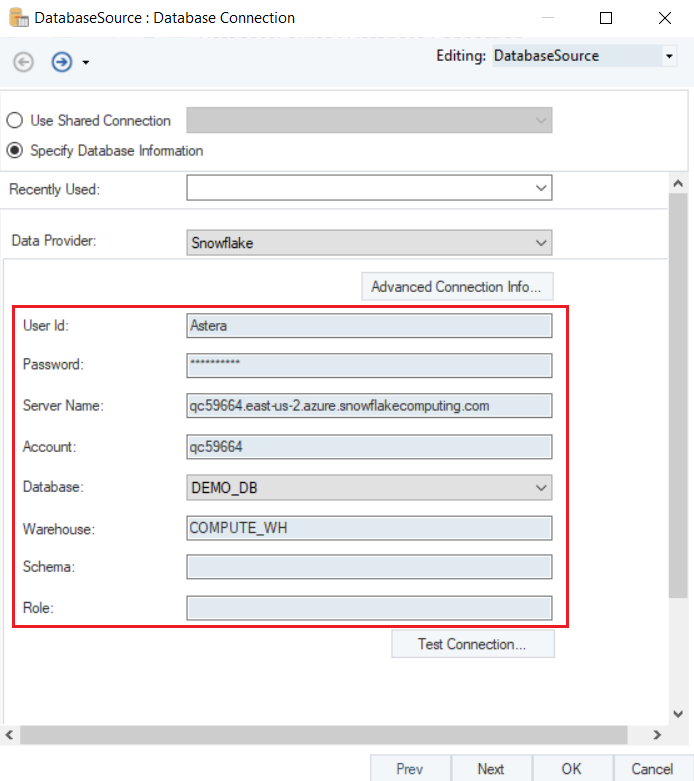

Enter the required credentials for your Snowflake account.

User ID

Password

Server Name

Account

Database

Warehouse

Schema

Role

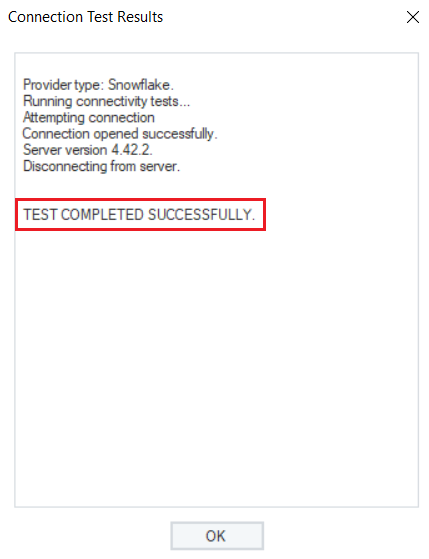

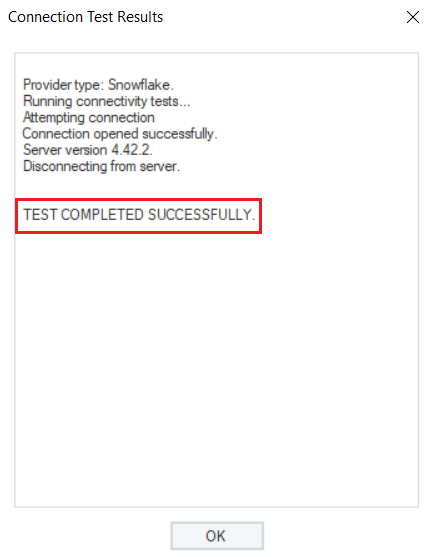

Test Connection to make sure that your database connection has been established and click Next to proceed to the next screen.

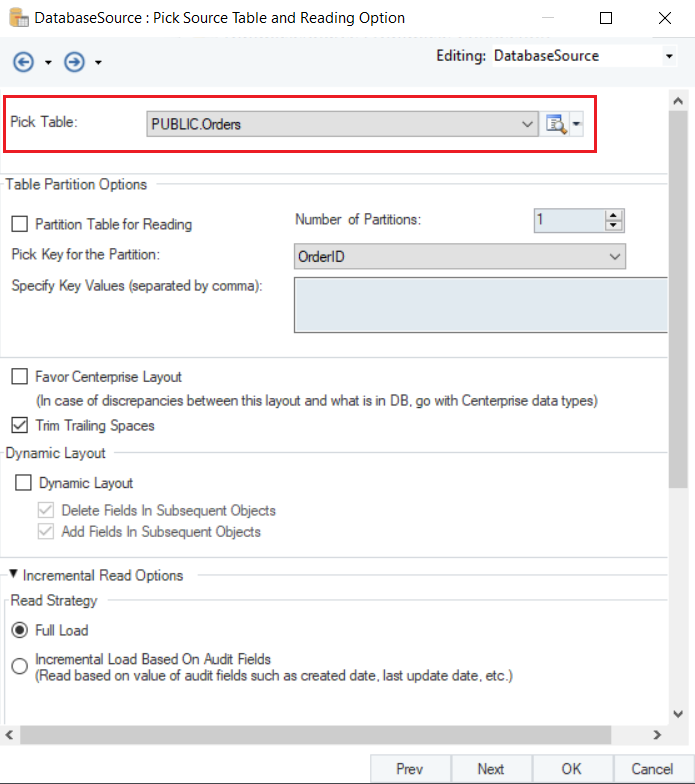

Here, you can Pick Source Table and modify Reading Options for your Snowflake database.

Note: In this case, we will select the Orders table.

To learn more about data reading options in a Database Table Source, click here.

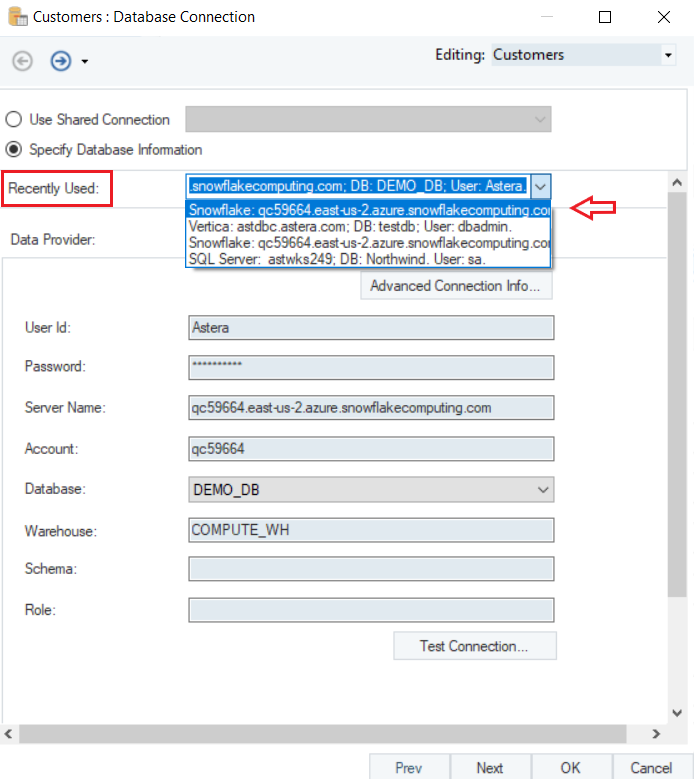

Since we want to join two tables, we will add the Customers table onto the dataflow using the same steps.

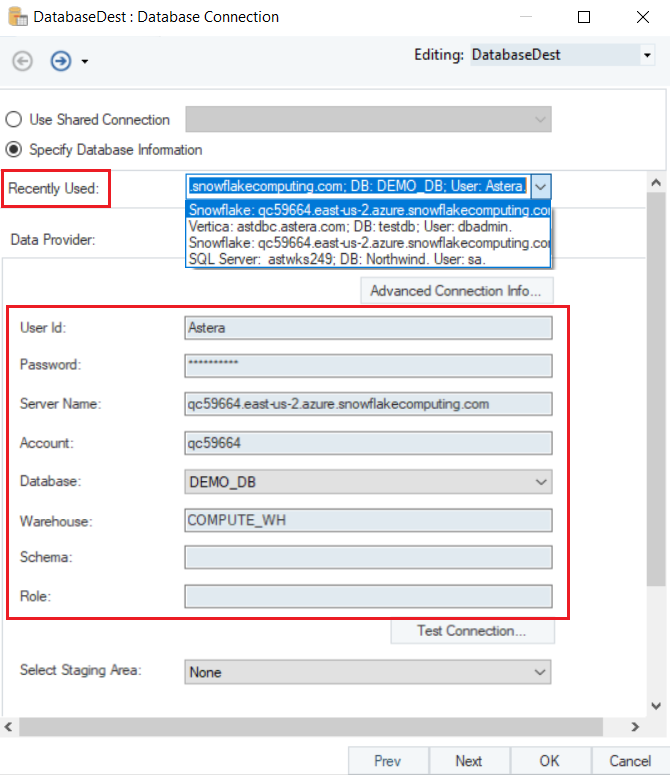

You can use the Recently Used option on the Database Connection screen to connect to the Snowflake database using the previously used credentials.

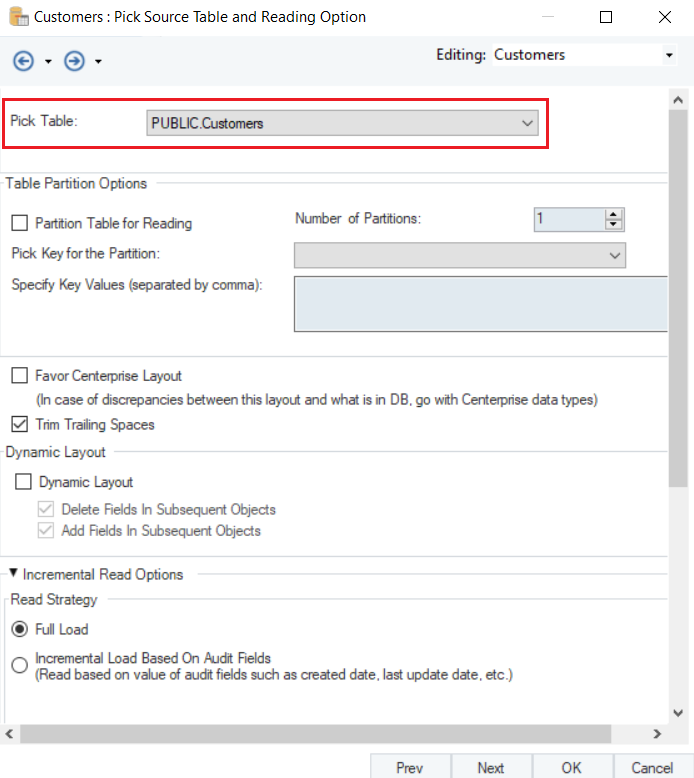

On the Pick Table screen, we will pick the Customers table from the database.

Now, we will use the Join transformation object and provide the key fields for both the tables in the dataflow.

To know more about how the Join transformation works in Astera, click here.

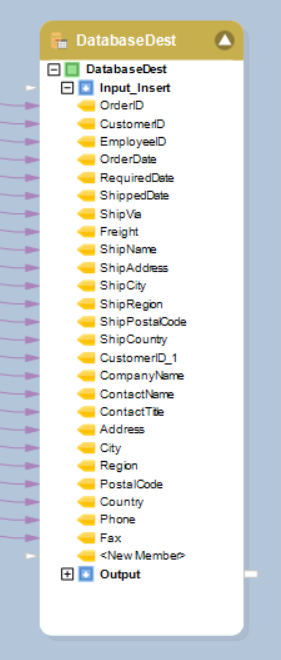

Next, we will write this data to our Snowflake database using the Database Table Destination in Astera.

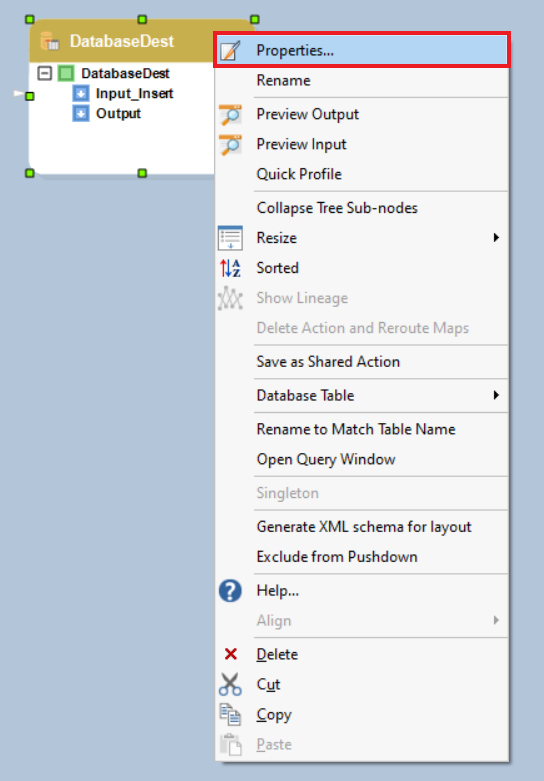

To configure the Database Table Destination object, right-click on its header and select Properties from the context menu.

This will open a new window on your screen.

Here you can configure the properties for the Database Table Destination object.

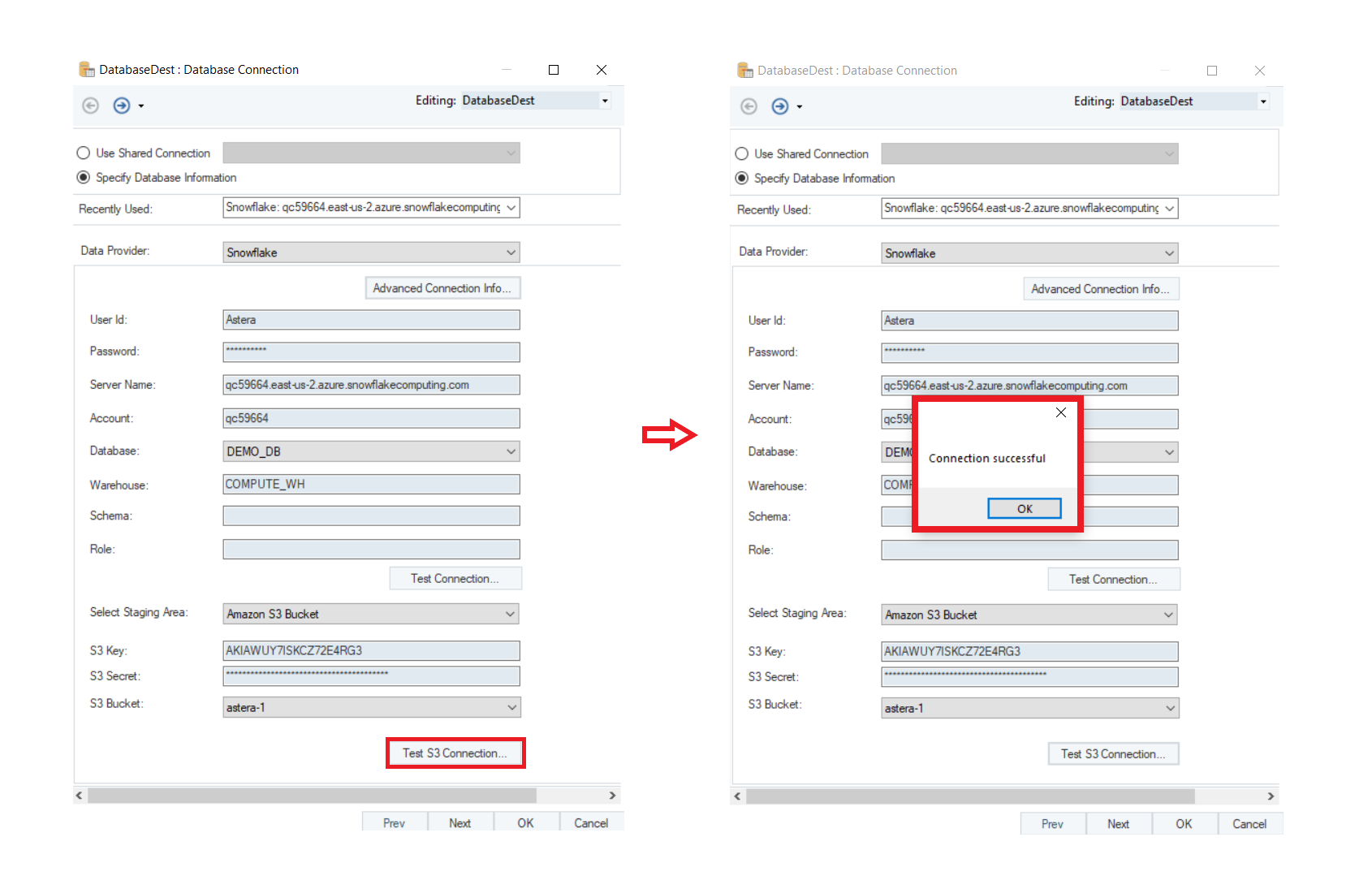

Since we have previously used Snowflake database to extract our source data, we can use the Recently Used credentials to connect to it again.

All the credentials have been filled in automatically.

Test Connection to make sure that your database connection is successful.

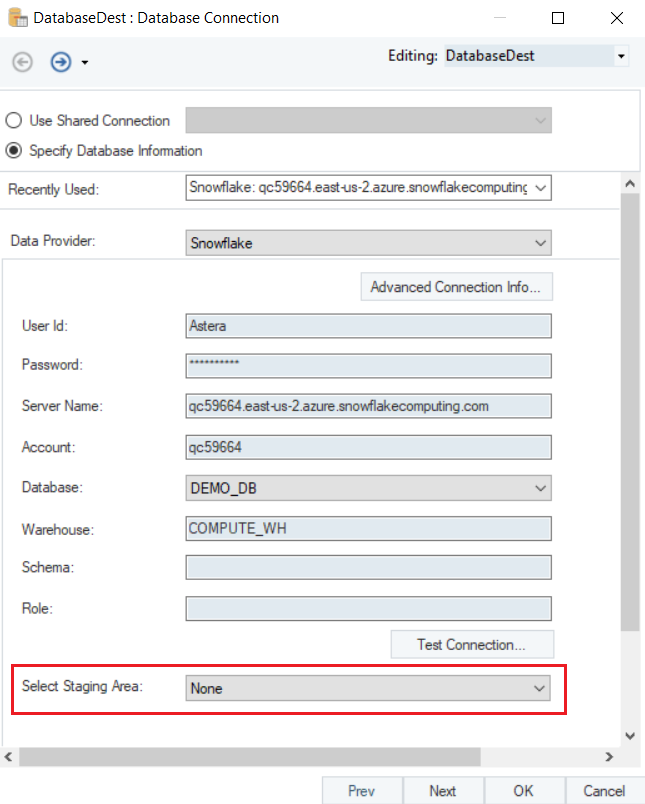

There is an option on the database connection screen for Snowflake where you can select the Staging Area.

A staging Area is a cloud storage location outside the Snowflake environment that allows greater flexibility in loading data to Snowflake. A stage specifies an area where temporary batches of data files are stored (i.e. “staged”) so that these files can be loaded into tables.

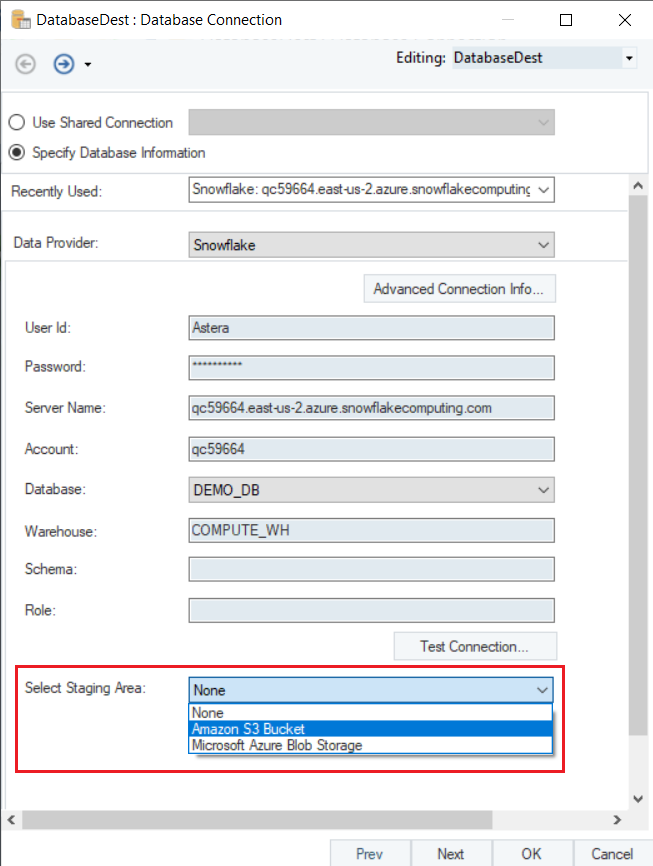

Astera provides connectivity to both staging areas for bulk insertion:

Amazon S3 storage

Microsoft Azure storage

Select the Staging Area from the drop-down list.

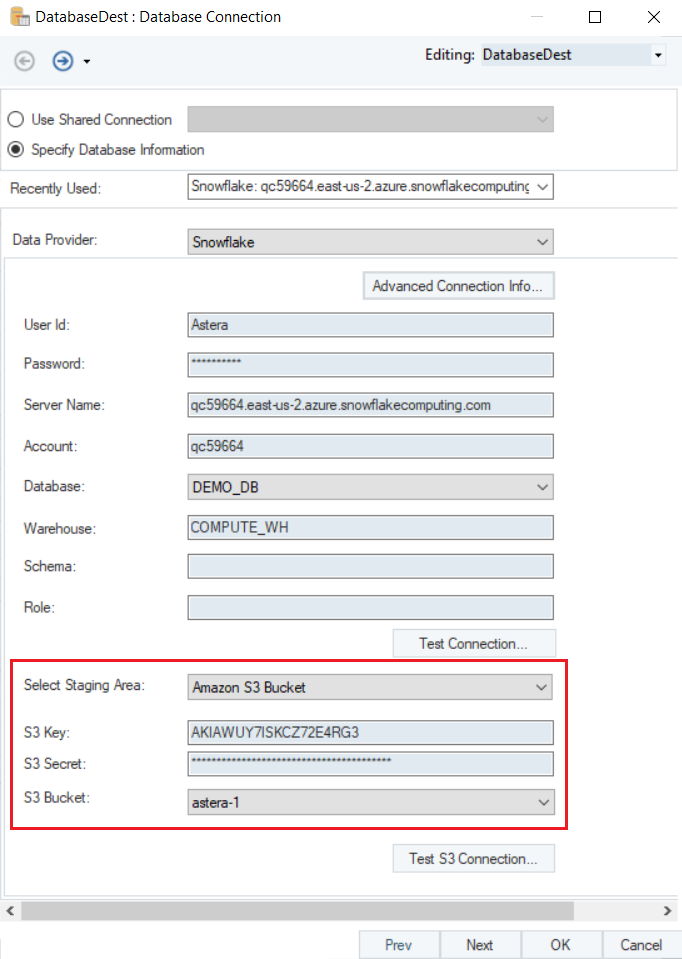

Note: In this case we will use the Amazon S3 Bucket for staging.

Once the staging area is selected, you will be required fill in the following fields:

S3 Key

S3 Secret

S3 Bucket

Now, Test S3 Connection to see if the Amazon S3 Bucket has been connected.

Once the connection is successful, click OK. Now, click Next to move to the next screen.

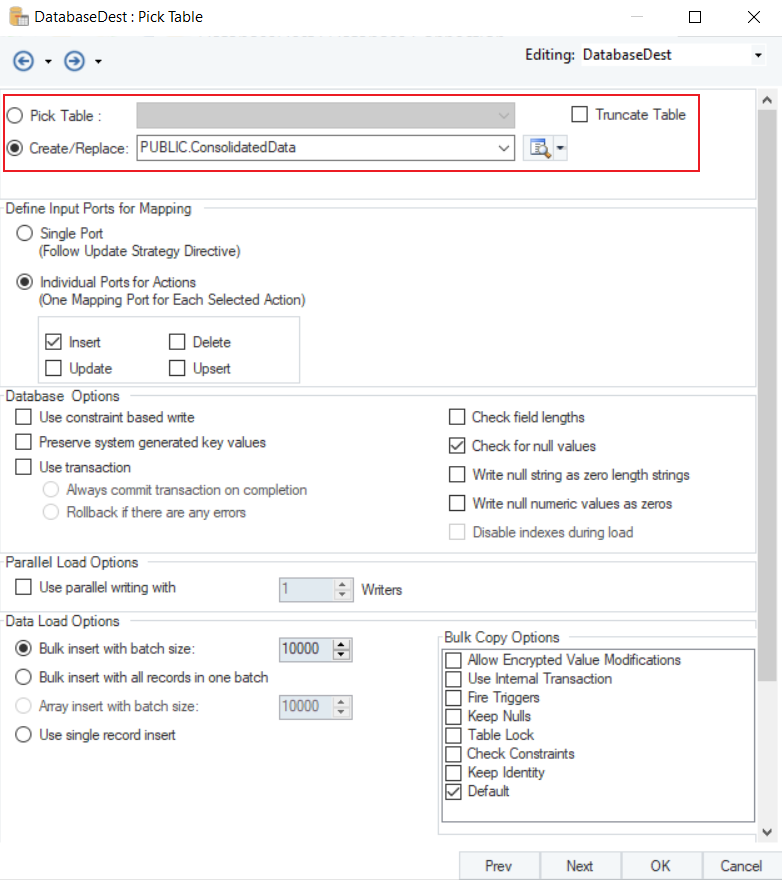

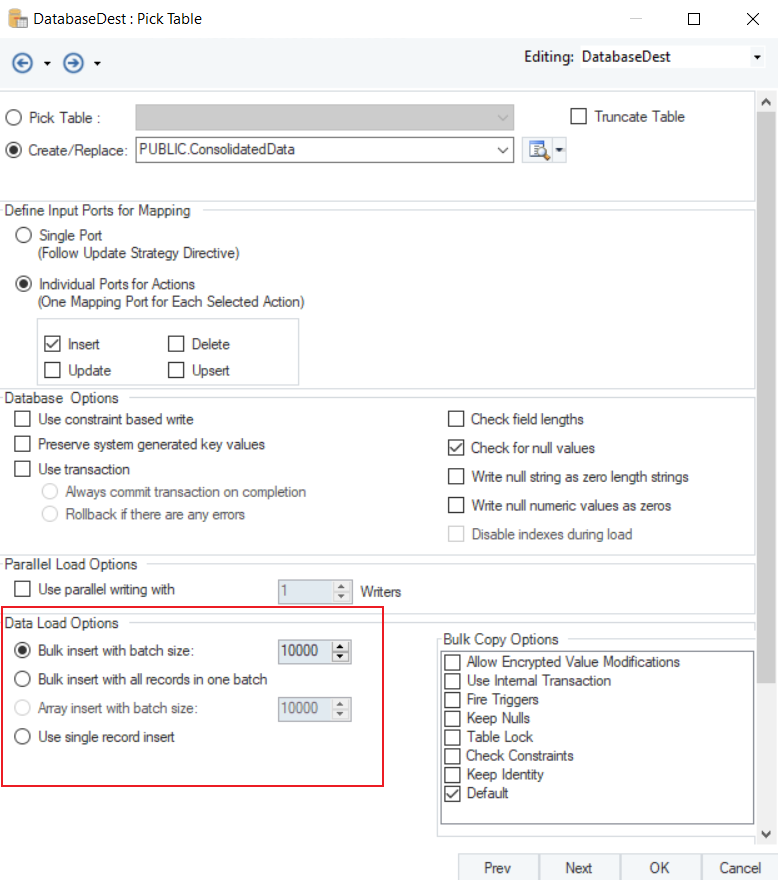

You can Pick Table or Create Table and modify Writing Options for the Snowflake Database on the Properties screen.

Note: In this case, we will create a new table and name it as PUBLIC.ConsolidatedData, where Public is the schema for the database.

Since we selected the staging area, we will set the Data Load Options as Bulk Insert.

Once you have set the data reading options, click OK.

We have successfully configured Snowflake as our database provider for the Database Table Destination object. The data will now be written to the Snowflake database once the dataflow is run.

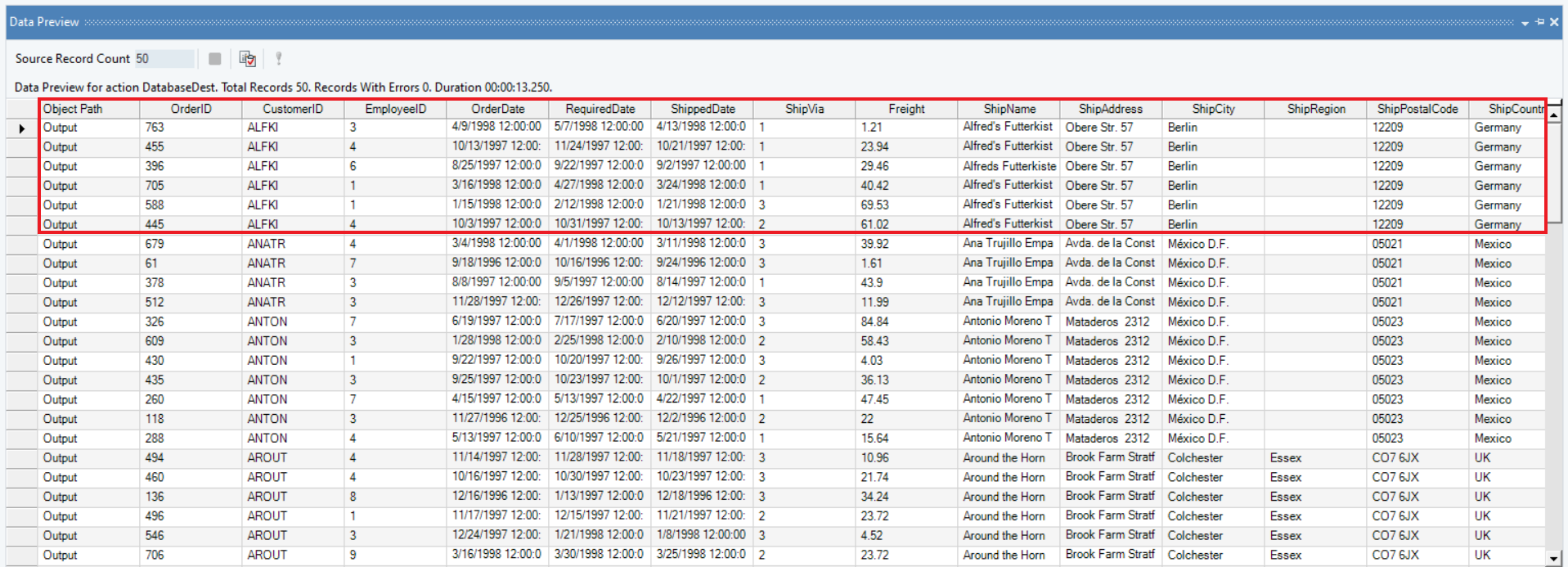

Now, if we preview the data in the database table, we can see that the details for all the orders placed by customers have been written into the destination table.

We have successfully connected to the Snowflake Database and completed our use case in Astera.

Was this helpful?