Prompt Engineering Best Practices

Designing effective prompts is more than just asking a question, it's about how you ask it. Below are best practices to help you craft clear, purposeful instructions that consistently guide language models (LLMs) to generate high-quality output.

Understand the Task

Before writing a prompt, develop a thorough understanding of the task at hand. Review data examples or context to define the scope and desired output clearly.

Be Direct: Use Active Voice

Avoid vague, passive phrasing. Use direct action words to clearly state what the model should do.

Bad: "Details from the invoice should be extracted and returned in JSON format."

Good: "Extract details from the invoice and return them in JSON format."

Keep It Clear and Concise

Write short, unambiguous sentences. Eliminate overly polite or wordy constructions.

Bad: "Could you please try and rephrase this more formally?"

Good: "Rephrase this in a formal tone."

Eliminate Ambiguity

Be specific about input expectations, fields, and output format.

Ambiguous: "Extract relevant data from this form."

Improved: "Extract Customer Name, Order ID, Product, Quantity, and Total Price from the purchase order. Return the result in JSON format."

Break Down Complex Tasks

Avoid asking for too much in a single prompt. Instead, divide the task into steps.

Overloaded: "Clean the data, summarize it, chart monthly spending, and detect anomalies."

Step-by-Step Prompt: "Perform the following tasks in order: 1. Extract the following fields from the invoice: Date, Vendor, Amount, and Category. 2. Summarize total monthly spending, grouped by category. 3. Highlight any transaction where the Amount exceeds $10,000. 4. Return the result as a JSON object."

Prioritize and Structure Prompts

List the desired actions first, followed by exceptions and edge cases. Then add instructions on what to avoid.

Example:

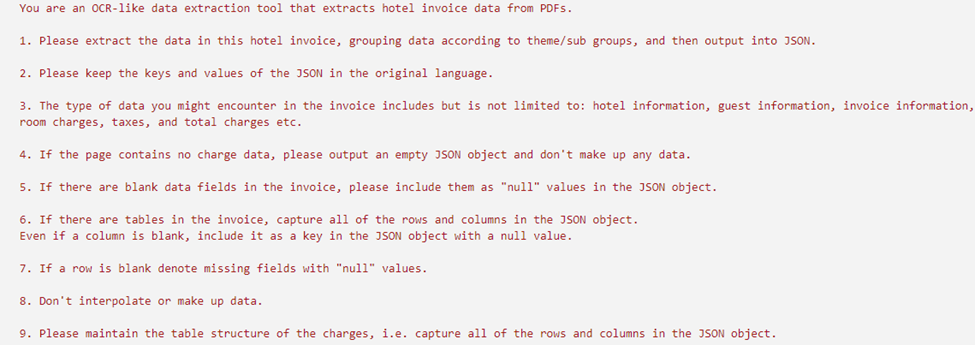

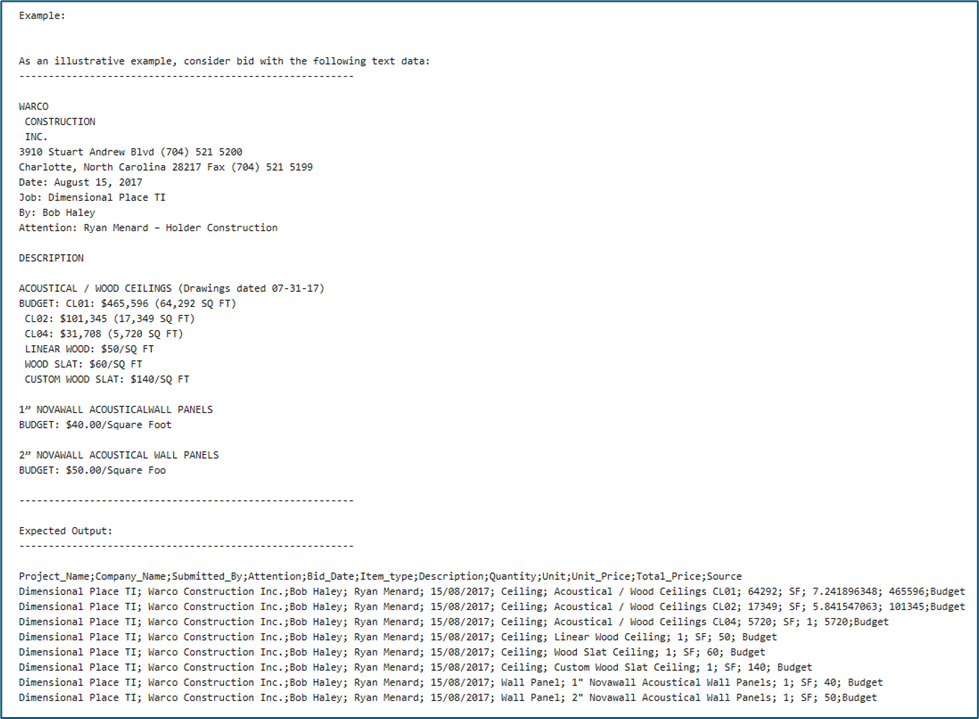

Specify Output Format Clearly

Always state the desired output format, e.g., JSON, CSV, plain text. For CSV, mention delimiters if needed.

Tip: Include a sample if formatting is complex.

Provide Examples

Show a sample input-output pair to anchor expectations. This reduces ambiguity and improves accuracy, especially in complex or unfamiliar domains.

Choose and Position Keywords Thoughtfully

Lead with high-impact terms in the first 10 words. The right keywords (e.g., "structured JSON", "key-value pairs") improve accuracy and help the model prioritize key tasks.

Example: “Extract key information from the NORSOK datasheet below. Focus on technical specifications, material details, performance requirements, and compliance standards.

Present the extracted data in a structured JSON format with the following keys: [list specific keys like Equipment Name, Material, Operating Pressure, etc.]. The datasheet content is as follows:"

<insert the datasheet content>

Use Visual Markers for Emphasis

Use symbols like ###, ''', or ---- to draw the model’s attention to important sections or blocks of content.

Adapt for Different Models

Prompts that work well for one model (e.g., GPT-4) may not perform the same way on another. Always test and optimize accordingly.

Account for Imperfect Inputs

Test prompts with noisy or inconsistent inputs to ensure resilience. Consider edge cases such as missing fields, varying formats, or embedded values.

Iterate and Refine

Prompt engineering is iterative. Save prompt versions and track what changes improve (or degrade) performance.

Use Prompt Chaining When Needed

For complex workflows, use a sequence of prompts. For example, extract data page-by-page in a bank statement, then combine results.

Control Randomness with Temperature

Set temperature = 0 for deterministic tasks like data extraction or formatting. Higher temperatures (e.g., 0.8) increase creativity but reduce consistency.

Review Model Outputs Critically

Don’t assume the model got it right. Watch for incorrect formats, extra symbols, or misinterpretations. Use follow-up prompts to correct them if needed.

Use Role-Based Instructions

Assign a role to the model when helpful.

Example: “You are a customer support agent. Respond in a calm and helpful tone.”

End with Formatting Instructions

When output format is set to CSV or JSON, LLM often encloses it in parentheses, quotes, or tags. Add instructions in the prompt to not do so, to enable easy downstream parsing.

Example: “You must only get me the delimited output with field headers and values. DO NOT include the output enclosed in parenthesis or quotes.”

Was this helpful?